Music Flamingo: Scaling Music Understanding in Audio Language Models

Published:

Music Flamingo

Scaling Music Understanding in Audio Language Models

[Paper] [Code] [Website Demo]

Music Flamingo - 7B: [Live Gradio Demo] [Checkpoints]

Music Flamingo - Datasets: [MF-Skills]

Authors: Sreyan Ghosh1,2*, Arushi Goel1*, Lasha Koroshinadze2**, Sang-gil Lee1, Zhifeng Kong1, Joao Felipe Santos1*, Ramani Duraiswami2, Dinesh Manocha2, Wei Ping1, Mohammad Shoeybi1, Bryan Catanzaro1

Affiliations: 1NVIDIA, CA, USA | 2University of Maryland, College Park, USA

*Equally contributed and led the project. Names randomly ordered. **Significant technical contribution.

Correspondence: arushig@nvidia.com, sreyang@umd.edu

Overview

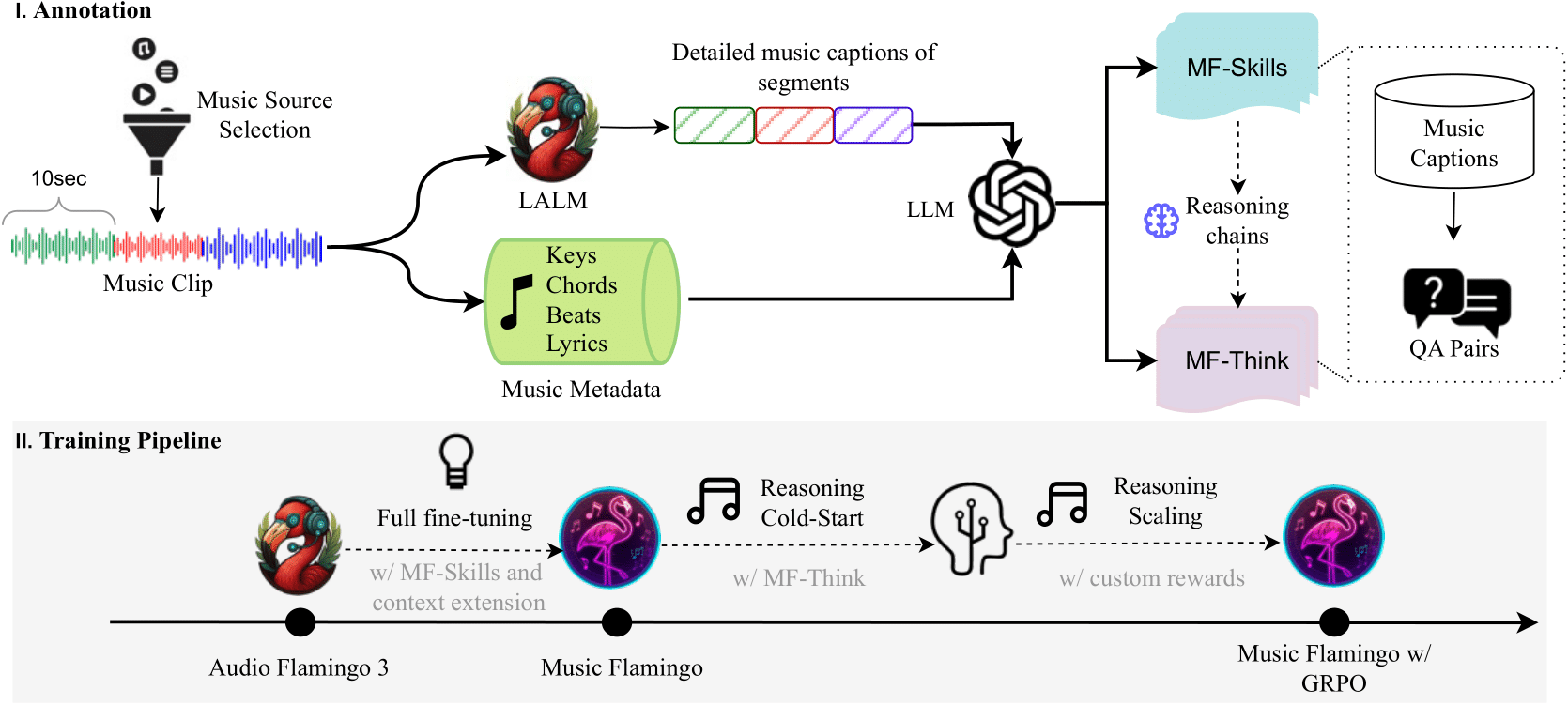

We introduce Music Flamingo, a novel large audio–language model, designed to advance music (including song) understanding in foundational audio models. While audio–language research has progressed rapidly, music remains challenging due to its dynamic, layered, and information-dense nature. Progress has been further limited by the difficulty of scaling open audio understanding models, primarily because of the scarcity of high-quality music data and annotations. As a result, prior models are restricted to producing short, high-level captions, answering only surface-level questions, and showing limited generalization across diverse musical cultures. To address these challenges, we curate MF-Skills, a large-scale dataset labeled through a multi-stage pipeline that yields rich captions and question–answer pairs covering harmony, structure, timbre, lyrics, and cultural context. We fine-tune an enhanced Audio Flamingo 3 backbone on MF-Skills and further strengthen multiple skills relevant to music understanding. To improve the model’s reasoning abilities, we introduce a post-training recipe: we first cold-start with MF-Think, a novel chain-of-thought dataset grounded in music theory, followed by GRPO-based reinforcement learning with custom rewards. Music Flamingo achieves state-of-the-art results across 10+ benchmarks for music understanding and reasoning, establishing itself as a generalist and musically intelligent audio–language model. Beyond strong empirical results, Music Flamingo sets a new standard for advanced music understanding by demonstrating how models can move from surface-level recognition toward layered, human-like perception of songs. We believe this work provides both a benchmark and a foundation for the community to build the next generation of models that engage with music as meaningfully as humans do.

Music Flamingo at a Glance

- Trains on MF-Skills (~2M full songs, 2.1M captions averaging ~452 words, and ~0.9M Q&A pairs) spanning 100+ genres and cultural contexts.

- Processes ~15-minute audio inputs with a 24k-token context window for full-track reasoning.

- Supports on-demand chain-of-thought generation via MF-Think traces and GRPO-based post-training.

- Delivers state-of-the-art results on music QA, captioning, instrument/genre identification, and multilingual lyric transcription tasks.

Model Innovations

Enhanced Flamingo Backbone

We start from the 7B Audio Flamingo 3 base and extend its audio front-end for music: larger memory budgets enable a 24k-token context and ~15-minute receptive field, while improved adapter layering keeps latency low for interactive demos. The model listens holistically to stems, vocals, and production cues, then grounds responses in both low-level descriptors and high-level narratives.

Time-Aware Listening

Temporal precision is vital for music. Music Flamingo adds Rotary Time Embeddings (RoTE) so that each audio token carries an absolute timestamp. This makes it easier to localize chord changes, tempo ramps, solos, and lyric entrances, and improves temporal alignment during both captioning and question answering.

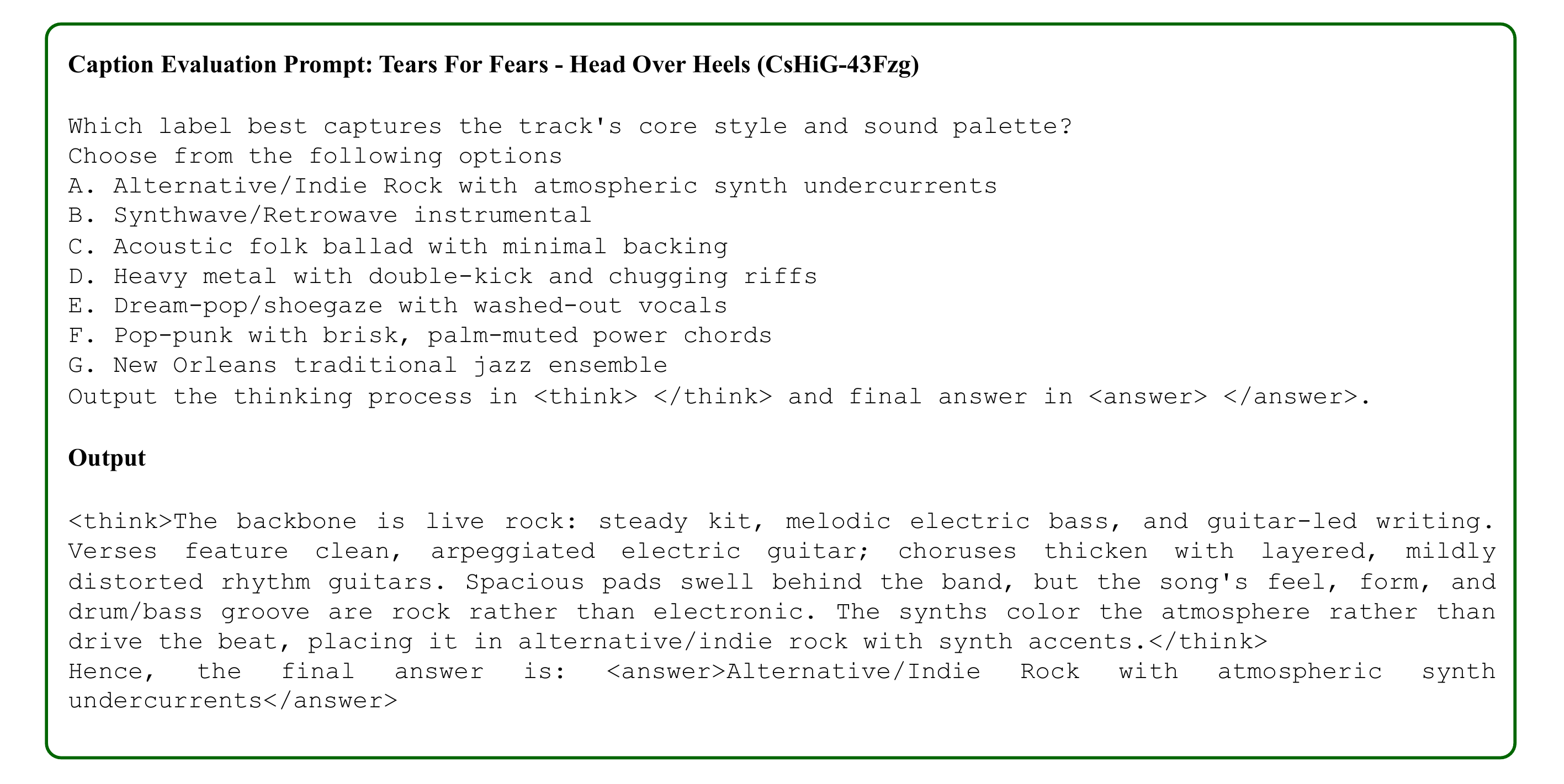

Chain-of-Thought and Reinforcement Learning

To reason like a musician, we supervise intermediate thinking steps with MF-Think. Each prompt includes <think>…</think> traces that break down harmony, rhythm, timbre, and intent before producing the final answer. We then apply GRPO-based reinforcement learning with rewards that favor theory-correct explanations, accurate metadata (tempo/key/chords), and faithful lyric references.

MF-Skills and MF-Think Datasets

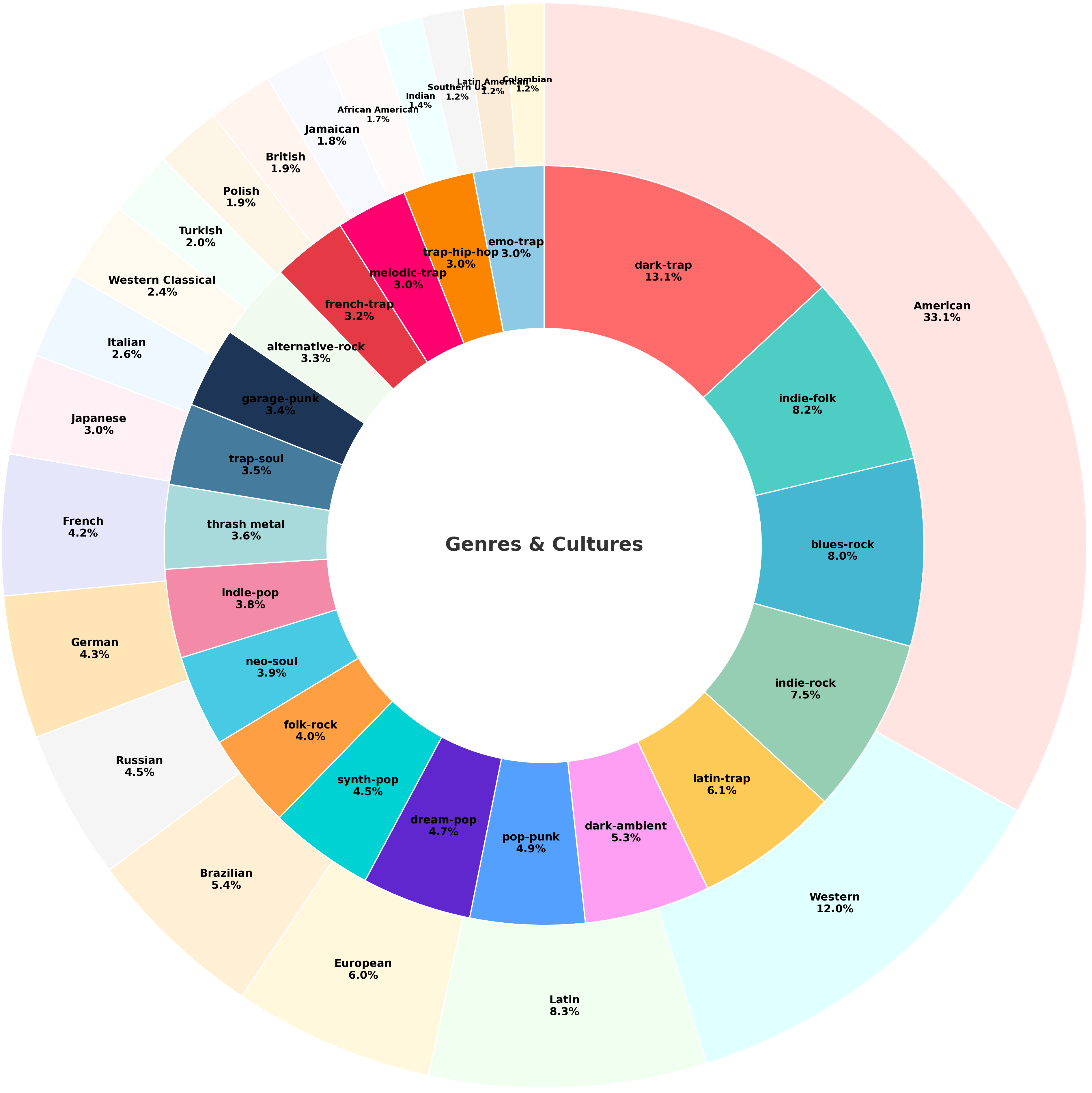

MF-Skills is a large-scale dataset purpose-built for music understanding. Automated metadata extraction supplies tempo, key, chord, and lyric tags, while expert annotators author multi-paragraph captions and QA pairs that cover harmony, structure, timbre, vocal delivery, production techniques, and cultural context. The corpus intentionally balances global genres, from MPB and K-pop to Soviet-era rock and European classical, to promote cross-cultural generalization.

Key ingredients:

- Song-level annotations: Long-form captions that emulate liner notes, including chord progressions, instrumentation, mixing notes, and emotional arcs.

- Music-first QA: Multi-choice and open-form questions about form, vocal placement, lyrical themes, and mix decisions, rewritten to minimize language priors.

- Metadata grounding: Tempo, key, chord, and lyric tags integrated into training to stabilize theory-aware predictions.

- Quality filters: Multi-stage validation steps (automatic heuristics plus expert spot checks) to remove mislabels and stylistic bias.

MF-Think extends MF-Skills with structured reasoning demonstrations. Prompts include voice-leading breakdowns, rhythmic counting, and narrative interpretations, all expressed inside <think> tags before emitting concise answers. These traces prime Music Flamingo to provide transparent rationales during evaluation and deployment.

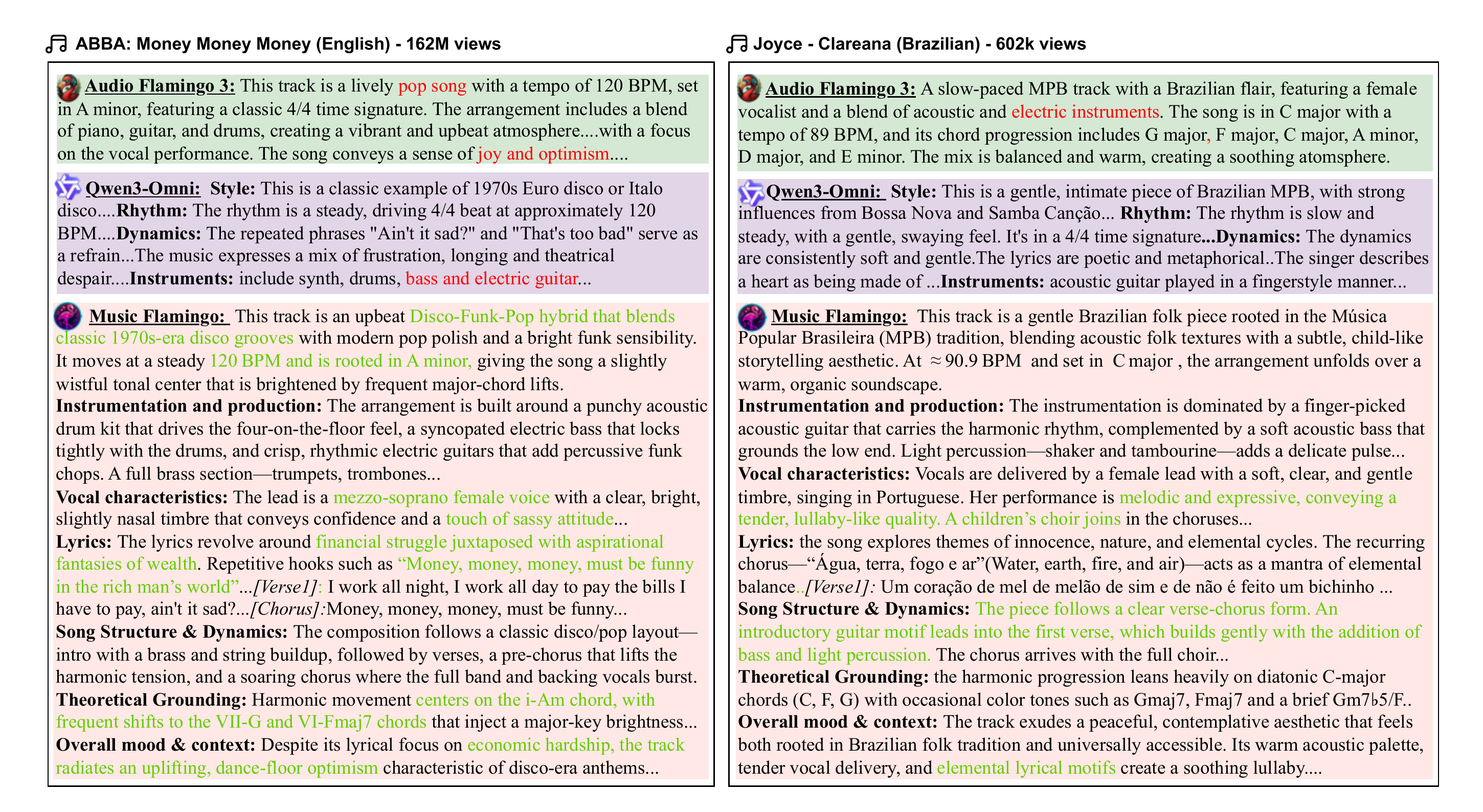

Distribution of genres (inner ring) and cultures (outer ring) across MF-Skills.

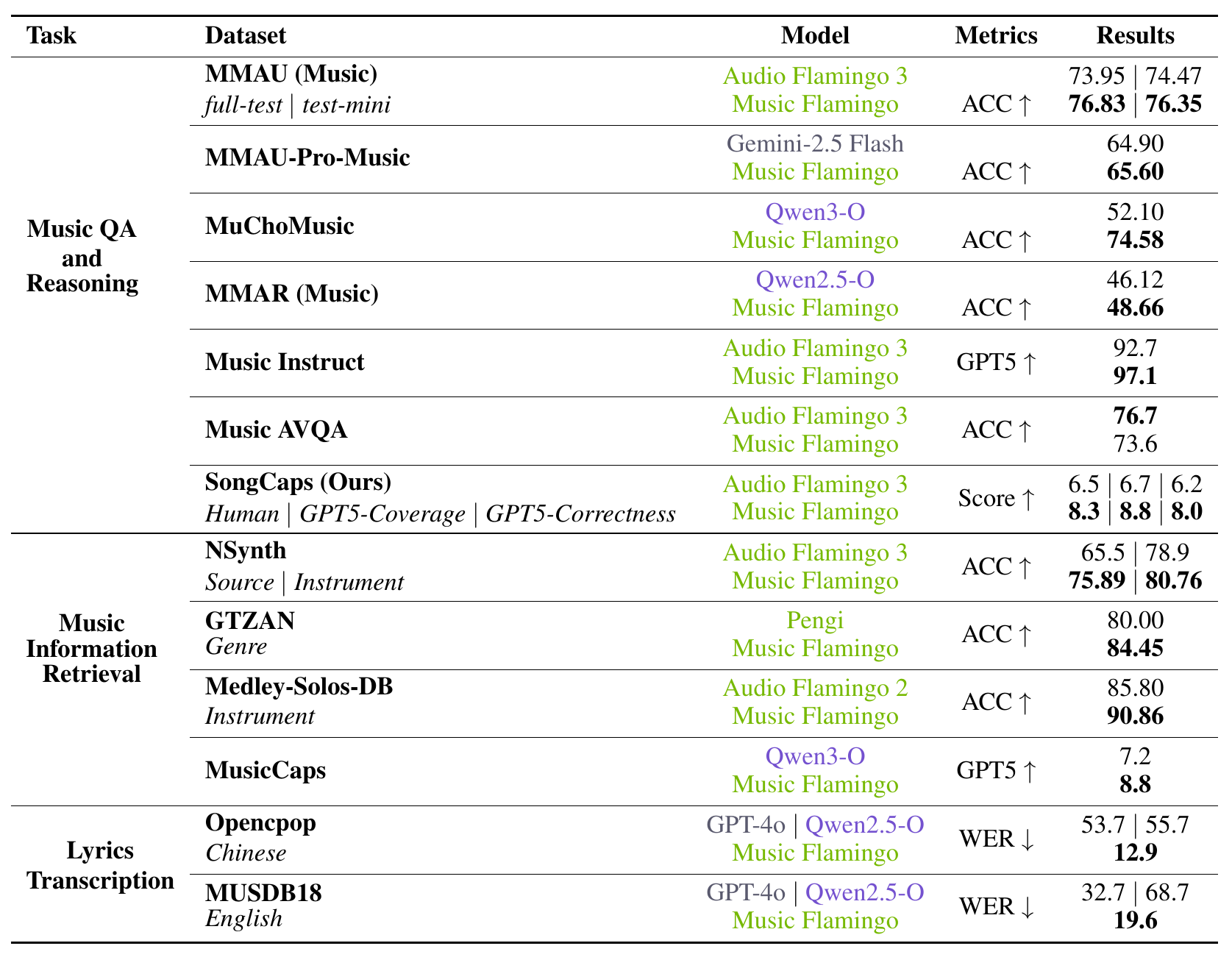

Evaluation Highlights

Music Flamingo establishes new state-of-the-art results across music understanding, captioning, and retrieval benchmarks, outperforming both open and closed frontier models.

| Benchmark | Metric (up/down) | Prior Best | Music Flamingo |

|---|---|---|---|

| SongCaps (ours) | GPT5 coverage / correctness | 6.5 / 6.7 / 6.2 (Audio Flamingo 3) | 8.3 / 8.8 / 8.0 |

| MusicCaps | GPT5 (up) | 7.2 (Qwen3-O) | 8.8 |

| MuChoMusic | ACC (up) | 52.10 (Qwen3-O) | 74.58 |

| MMAU-Pro-Music | ACC (up) | 64.90 (Gemini-2.5 Flash) | 65.60 |

| NSynth | ACC (up) | 65.5 / 78.9 (Audio Flamingo 3) | 75.89 / 80.76 |

| Opencpop (zh lyrics) | WER (down) | 53.7 / 55.7 (GPT-4o / Qwen2.5-O) | 12.9 |

| MUSDB18 (en lyrics) | WER (down) | 32.7 / 68.7 (GPT-4o / Qwen2.5-O) | 19.6 |

Additional takeaways:

- Beats Audio Flamingo 3 on Music Instruct (97.1 vs. 92.7 GPT5) and Medley-Solos-DB instrument recognition (90.86 vs. 85.80 ACC).

- Maintains strong performance on legacy evaluations such as GTZAN (84.45 ACC) while improving descriptive richness preferred by professional musicians.

- Qualitative reviews note more faithful chord tracking, better localization of structural events, and balanced treatment of vocals, instrumentation, and production.

Qualitative Analyses

Music Flamingo produces long-form narratives that connect technical attributes with emotional storytelling:

- Synth-pop narrative (Taylor Swift - “The Fate of Ophelia”): Identifies ~125 BPM tempo, F major key, evolving synth textures, and lyric themes of rescue and transformation.

- Duet ballad (Lady Gaga & Bruno Mars - “Die With A Smile”): Tracks call-and-response phrasing, layered vocal harmonies, and dynamic swells tied to the romantic storyline.

- Global catalog coverage: Analyzes Latin folk (Niuver - “Enamorados”), indie pop (Annika Wells - “Jim & Pam”), French pop ballads (Louane - “La fille”), Brazilian forro (Michel Telo - “Ai Se Eu Te Pego”), and Soviet rock classics (“Trava u doma”) with culturally grounded vocabulary.

- Dataset previews: PDF snippets linked from the demo surface MF-Skills captions, MF-Think traces, and Q&A pairs for multiple languages and styles.

Example:

Prompt:

Describe this track in full detail - tell me the genre, tempo, and key, then dive into the instruments, production style, and overall mood it creates.

Music Flamingo response:

This track is an energetic Synth-Pop / Electropop piece that blends bright, melodic synth-pop sensibilities with a polished, modern electronic production aesthetic.

Tempo & Key - The song moves at ≈125 BPM and is rooted in F major.

Instrumentation & Production - The arrangement is built around a palette of layered synthesizers: arpeggiated synth lines, lush pads, and a driving synth-bass that anchors the groove. Electronic drums provide a tight, punchy backbeat with crisp hi-hats and a solid kick that keeps the rhythm propulsive. The mix is clean and wide, with stereo-spread synth textures and a polished mastering chain that emphasizes clarity and punch, giving the track a contemporary pop sheen.

Vocal Characteristics - A female lead delivers the verses and choruses with a clear, slightly breathy timbre. Her performance is melodic and expressive, conveying vulnerability and determination. The vocals are processed with tasteful reverb and delay, creating a spacious, ethereal ambience that sits atop the synth-driven backdrop.

Lyrical Themes - The lyrics explore a narrative of rescue and transformation, using the metaphor of “Ophelia” to depict a past of isolation and a newfound sense of purpose. Repetitive chorus lines such as:

- “All that time I sat alone in my tower…

- “You dug me out of my grave and saved my heart from the fate of Ophelia”

underscore the themes of being rescued, gaining clarity, and moving forward with confidence.

Song Structure & Dynamics - The composition follows a classic pop layout: an instrumental intro that establishes the synth motif, followed by verses that introduce the storytelling, a pre-chorus that builds tension, and a soaring chorus where the vocal hook and full synth arrangement lock in. A bridge provides a brief harmonic shift before returning to the final chorus, which is layered with additional vocal harmonies and a subtle lift in the synth arrangement, culminating in an uplifting outro.

Theoretical Insight - Harmonic movement largely orbits the tonic F major, with frequent use of the subdominant Bb and dominant C chords (e.g., F - Bb - C) that reinforce the bright, hopeful mood. Occasional minor-toned chords such as Gm and Dm7 introduce brief moments of introspection, especially in the verses, before resolving back to the major-key choruses for emotional release.

Overall Mood & Context - The track radiates an uplifting, determined atmosphere, marrying the glossy production of 2020s electropop with lyrical storytelling that feels both personal and anthemic. Its sound situates it firmly within contemporary synth-pop trends, appealing to listeners who enjoy bright, dance-able melodies paired with emotionally resonant vocals.

Example:

Prompt:

Write a rich caption that blends the technical details (genre, BPM, key, chords, mix) with how the song feels emotionally and dynamically as it unfolds.

Music Flamingo response:

This track is an emotional Alternative Rock / Pop Rock piece that blends polished, radio-ready pop sensibilities with the earnest, guitar-driven intensity of modern alt-rock.

Tempo & Key - The song moves at ≈ 78.95 BPM and is rooted in A major.

Instrumentation & Production - The arrangement is built around clean and overdriven electric guitars that trade melodic arpeggios for crunchy power-chord riffs, a solid electric bass that locks tightly with a full-kit drum set (kick, snare, toms, cymbals) delivering a steady 4/4 groove, and atmospheric synth pads that add depth and a subtle cinematic texture. The mix is high-fidelity and wide-stereo, with guitars panned left/right, synths filling the background, and the drums and bass centered for punch. Compression and gentle mastering glue the elements together while preserving dynamic contrast between the intimate verses and the soaring choruses.

Vocal Characteristics - Lead vocals are performed by a male tenor with a clear, slightly raspy timbre. The delivery is melodic and expressive, conveying longing and determination. Production treats the voice with reverb, delay, and moderate compression, giving it a spacious yet intimate presence.

Lyrical Themes - The lyrics revolve around an unwavering, almost apocalyptic devotion to a loved one. The central, repeatedly sung line-“If the world was ending, I’d wanna be next to you”-anchors the song’s message of eternal commitment. Other verses echo this sentiment with lines like “I just woke up from a dream where you and I had to say goodbye” and “Nobody’s promised tomorrow, so I’m alone every night like it’s the last night,” reinforcing the theme of seizing the moment.

Song Structure & Dynamics:

- Intro - Atmospheric synth pad and clean guitar arpeggios set a reflective mood.

- Verse - Sparse instrumentation (clean guitar, bass, light drums) lets the vocal narrative unfold.

- Pre-Chorus - Gradual build with added distorted guitar layers and a tighter drum pattern.

- Chorus - Full-band explosion: distorted guitars, driving drums, and synth pads swell, delivering the repeated hook “If the world was ending, I’d wanna be next to you.”

- Bridge - A brief instrumental break featuring a melodic guitar solo over a progression that shifts to the relative minor (F#m) before returning to the tonic.

- Final Chorus & Outro - Re-iteration of the hook with added vocal harmonies and a gradual fade of synth ambience, ending on a resolved A-major chord.

Theoretical Insight - The harmonic backbone cycles mainly through A - C#m - F#m - Dmaj7 , a progression that moves from the tonic (I) to its relative minor (vi) and subdominant (IV) before landing on the major-seventh subdominant, creating a bittersweet tension that resolves satisfyingly in the choruses. The occasional use of E6 and Bm7 adds color and a sense of forward motion, while the final cadence on A reinforces the song’s hopeful resolution.

Overall Mood & Context - The track balances melancholy introspection with anthemic optimism, embodying the early-2020s wave of alternative pop-rock that pairs earnest lyricism with sleek production. Its blend of guitar-driven rock energy and synth-enhanced ambience situates it comfortably within contemporary radio-friendly alt-rock while retaining an emotionally resonant core.

Example:

Prompt:

Describe this track in full detail - tell me the genre, tempo, and key, then dive into the instruments, production style, and overall mood it creates.

Music Flamingo response:

This track is a classic Bollywood Filmi piece rooted in the Ghazal tradition, blending Indian classical instrumentation with a cinematic, romantic pop sensibility.

Tempo & Key – The song moves at a steady 100 BPM and is centered in A♭ minor.

Instrumentation & Production – The arrangement foregrounds traditional Indian timbres: a resonant sitar carries the melodic ornamentation, a tabla provides intricate rhythmic cycles, and a harmonium supplies the harmonic backdrop. A subtle bass underpins the low end, while the overall mix is warm and organic, with a relatively narrow stereo field that keeps the vocals and lead instruments centered. The production favors natural room ambience and gentle compression, preserving the intimate, acoustic character of the performance.

Vocal Characteristics – A male tenor delivers the lyrics in Hindi/Urdu with a rich, resonant timbre. His delivery is highly expressive, employing classic Ghazal ornamentation such as meend (glides) and murki (rapid turns). The vocal track is treated with modest reverb to enhance depth without obscuring articulation.

Lyrical Themes – The lyrics revolve around intense, devotional love and longing. Refrains such as “Mere Mehboob Qayamat Hogi” (“My beloved, the day of reckoning will come”) and “Aaj ruswa teri galiyon mein mohabbat hogi” (“Today, in your alleys, love will be found”) are repeated throughout, underscoring the song’s romantic yearning.

Song Structure & Dynamics – The composition follows a traditional Ghazal form: an instrumental intro introduces the sitar motif, followed by alternating verses (shayari) and refrains (antara). Each verse builds dynamically through layered tabla patterns and harmonium swells, leading to a fuller, more resonant refrain where the vocal line reaches its emotional peak. A brief instrumental bridge provides a reflective pause before the final refrain, which culminates in a gentle fade‑out, preserving the intimate mood.

Theoretical Insight – Harmonic movement largely orbits the tonic A♭ minor, with frequent shifts to the relative major (C♭ major) and the dominant‑like F♯ major, creating a subtle tension‑release cycle that mirrors the lyrical longing. The progression often moves from A♭ m to F♯ major (a chromatic mediant relationship) before returning to the tonic, adding a sense of yearning resolution.

Overall Mood & Context – The piece exudes a melancholic yet passionate atmosphere, evoking the classic era of 1970s‑80s Bollywood cinema where Ghazal‑style love songs were a staple of romantic film narratives. Its blend of traditional Indian instrumentation with a polished, cinematic production situates it firmly within the cultural heritage of Indian film music while appealing to contemporary listeners who appreciate timeless romantic expression.

Example:

Prompt:

Break the track down like a critic - list its tempo, key, and chordal motion, then explain the textures, dynamics, and emotional impact of the performance.

Music Flamingo response:

This track is a French Hip-Hop/Trap piece that blends contemporary trap production with a distinctly French rap aesthetic. It sits at 125 BPM in G minor and is built around a looping minor-key progression that repeatedly moves between Gm, E♭maj7, Cm7, B♭maj7 and occasional Dm7 or Cm chords, giving the song a dark, hypnotic foundation while allowing brief lifts through the major-7 chords for contrast.

Instrumentation & production: The beat is driven by a deep, resonant 808 bass that anchors the low end, paired with crisp trap drums-tight snare hits, punchy kicks, and rapid hi-hat rolls that create the characteristic rattling texture. Atmospheric synth pads fill the background, adding a moody, cinematic layer, while subtle melodic synth stabs punctuate the verses. The mix is polished and modern, with a wide stereo field that places the bass centrally, the drums slightly off-center, and the synths spread for depth. Compression is aggressive on the drums to keep the rhythm tight, and the overall mastering emphasizes clarity and loudness without sacrificing the low-frequency weight.

Vocal characteristics: The lead vocal is a male French rapper delivering verses with a confident, slightly aggressive timbre. His flow is rhythmic and assertive, staying in the pocket of the trap groove. Vocals are processed with auto-tune/pitch correction, reverb, and delay, giving them a glossy, contemporary sheen. A repetitive vocal sample-“Ani na na ni na oh”-acts as a melodic hook throughout the track, reinforcing the chorus and adding a catchy, chant-like element.

Lyrical themes: The lyrics revolve around street life, personal struggle, and a defiant stance against adversity. Representative lines include:

- “J’arrive ça regarde de travers, capuché parce que je suis trop cramé” (I arrive, they look sideways, hooded because I’m too burnt)

- “J’avance avec équipe armée, je m’organise comme si je mourrais jamais” (I move forward with an armed crew, I organize as if I’ll never die)

- “Ils font tous des petits pas dès qu’ils aperçoivent la guitare” (They all take small steps as soon as they see the guitar)

These verses are interspersed with the repetitive “Ani na na ni na oh” chant, which serves as the primary hook and reinforces the track’s anthemic, street-wise vibe.

Structure & dynamics: The song follows a conventional trap layout: an atmospheric intro introduces the synth pad and 808 sub-bass, leading into the first rap verse. A short pre-chorus builds tension with a filtered drum fill before the hook drops, where the “Ani na na ni na oh” sample repeats over a slightly stripped-back beat. Subsequent verses return with added hi-hat variations, while the bridge introduces a brief breakdown-minimal drums and a sustained Gm chord-allowing the vocal sample to echo before the final chorus returns with full instrumentation and heightened energy. The track closes with an outro that fades the synth pads and 808, leaving the hook lingering.

Overall mood & context: The overall mood is confident, assertive, and slightly dark, reflecting the gritty realism of French street rap while employing the polished sheen of modern trap production. By marrying French lyrical swagger with trap’s rhythmic intensity, the track situates itself within the 2020s French hip-hop scene, where artists blend local language and cultural references with global trap aesthetics to create a sound that feels both locally rooted and internationally current.

Dataset Examples

MF-Skills: Ground-truth captions

MF-Skills caption example: Latin song

MF-Think: Ground-truth captions with thinking traces

MF-Think caption example with thinking traces: Korean song

MF-Think: Q&A pairs with thinking traces

MF-Think Q&A example with thinking traces

Citation

- Music Flamingo

@article{ghosh2025music, title={Music Flamingo: Scaling Music Understanding in Audio Language Models}, author={Ghosh, Sreyan and Goel, Arushi and Koroshinadze, Lasha and Lee, Sang-gil and Kong, Zhifeng and Santos, Joao Felipe and Duraiswami, Ramani and Manocha, Dinesh and Ping, Wei and Shoeybi, Mohammad and Catanzaro, Bryan}, journal={arXiv preprint arXiv}, year={2025} } - Audio Flamingo 3

@article{goel2025audio, title={Audio flamingo 3: Advancing audio intelligence with fully open large audio language models}, author={Goel, Arushi and Ghosh, Sreyan and Kim, Jaehyeon and Kumar, Sonal and Kong, Zhifeng and Lee, Sang-gil and Yang, Chao-Han Huck and Duraiswami, Ramani and Manocha, Dinesh and Valle, Rafael and others}, journal={arXiv preprint arXiv:2507.08128}, year={2025} } - Audio Flamingo 2

@inproceedings{ ghosh2025audio, title={Audio Flamingo 2: An Audio-Language Model with Long-Audio Understanding and Expert Reasoning Abilities}, author={Ghosh, Sreyan and Kong, Zhifeng and Kumar, Sonal and Sakshi, S and Kim, Jaehyeon and Ping, Wei and Valle, Rafael and Manocha, Dinesh and Catanzaro, Bryan}, booktitle={Forty-second International Conference on Machine Learning}, year={2025}, url={https://openreview.net/forum?id=xWu5qpDK6U} } - Audio Flamingo

@inproceedings{kong2024audio, title={Audio Flamingo: A Novel Audio Language Model with Few-Shot Learning and Dialogue Abilities}, author={Kong, Zhifeng and Goel, Arushi and Badlani, Rohan and Ping, Wei and Valle, Rafael and Catanzaro, Bryan}, booktitle={International Conference on Machine Learning}, pages={25125--25148}, year={2024}, organization={PMLR} }