Human-Robot Handovers

Handovers

Handovers

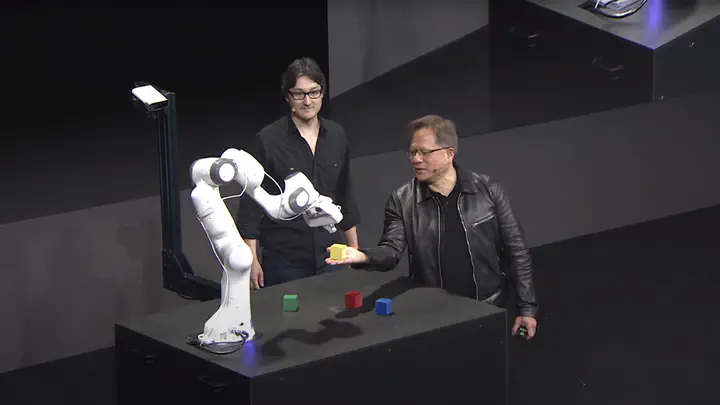

In 2019, NVIDIA’s CEO Jensen Huang presented an example robotics application, Leonardo, in his keynote speech at the GPU Technology Conference in Suzhou, China. The robot was able to take the block from Jensen’s hand.

Human Grasp Classification for Handovers

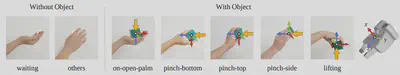

To continue to build robots that can safely and effectively collaborate with humans in warehouses and the home, we developed a human-to-robot handover method in which the robot meets the human halfway by classifying a set of grasps that describe the way the object is grasped by the human hand for the task of handover.

“If the hand is grasping a block, then the hand pose can be categorized as on-open-palm, pinch-bottom, pinchtop, pinch-side, or lifting,” as explained in our IROS 2020 paper, Human Grasp Classification for Reactive Human-to-Robot Handovers. “If the hand is not holding anything, it could be either waiting for the robot to handover an object or just doing nothing specific.”. See this NVIDIA technical blog for more details.

Handovers of Arbitrary Objects

In our follow-up work, we presents a human-to-robot handover system that is generalizable to diverse unknown objects using accurate hand and object segmentation and temporally consistent grasp generation. There are no hard constraints on how naive users might present an object to the robot, so long as they hold it in a way that is graspable by the robot. The system can adapt to user preferences, is reactive to a user’s movements and generates grasps and motions that are smooth and safe.

This work won the Best Paper Award on Human-Robot Interaction (HRI) at ICRA 2021.

Model Predictive Control for Fluid Handovers

Previous work did not look at how to plan motions that consider human comfort as a part of the human-robot handover process. In our recent work, we propose to generate smooth motions via an efficient model-predictive control (MPC) framework that integrates perception and complex domain-specific constraints into the optimization problem. We introduce a learning-based grasp reachability model to select candidate grasps which maximize the robot’s manipulability, giving it more freedom to satisfy these constraints. Finally, we integrate a neural net force/torque classifier that detects contact events from noisy data. The paper is accepted to ICRA 2022.

References

- Model Predictive Control for Fluid Human-to-Robot Handovers. Wei Yang*, Balakumar Sundaralingam*, Chris Paxton*, Iretiayo Akinola, Yu-Wei Chao, Maya Cakmak, Dieter Fox. International Conference on Robotics and Automation (ICRA), Philadelphia, USA, 2022.

- Reactive Human-to-Robot Handovers of Arbitrary Objects. Wei Yang, Chris Paxton, Arsalan Mousavian, Yu-Wei Chao, Maya Cakmak, Dieter Fox. International Conference on Robotics and Automation (ICRA), Xi’an, China, 2021.

- Goal-Auxiliary Actor-Critic for 6D Robotic Grasping with Point Clouds. Lirui Wang, Yu Xiang, Wei Yang, Arsalan Mousavian and Dieter Fox. Conference on Robot Learning (CoRL), London, UK, 2021.

- Human Grasp Classification for Reactive Human-to-Robot Handovers. Wei Yang, Chris Paxton, Maya Cakmak and Dieter Fox. International Conference on Intelligent Robots and Systems (IROS), On-Demand, 2020.

- Collaborative Interaction Models for Optimized Human-Robot Teamwork. Adam Fishman, Chris Paxton, Wei Yang, Nathan Ratliff, Byron Boots, Dieter Fox. International Conference on Intelligent Robots and Systems (IROS), On-Demand, 2020