|

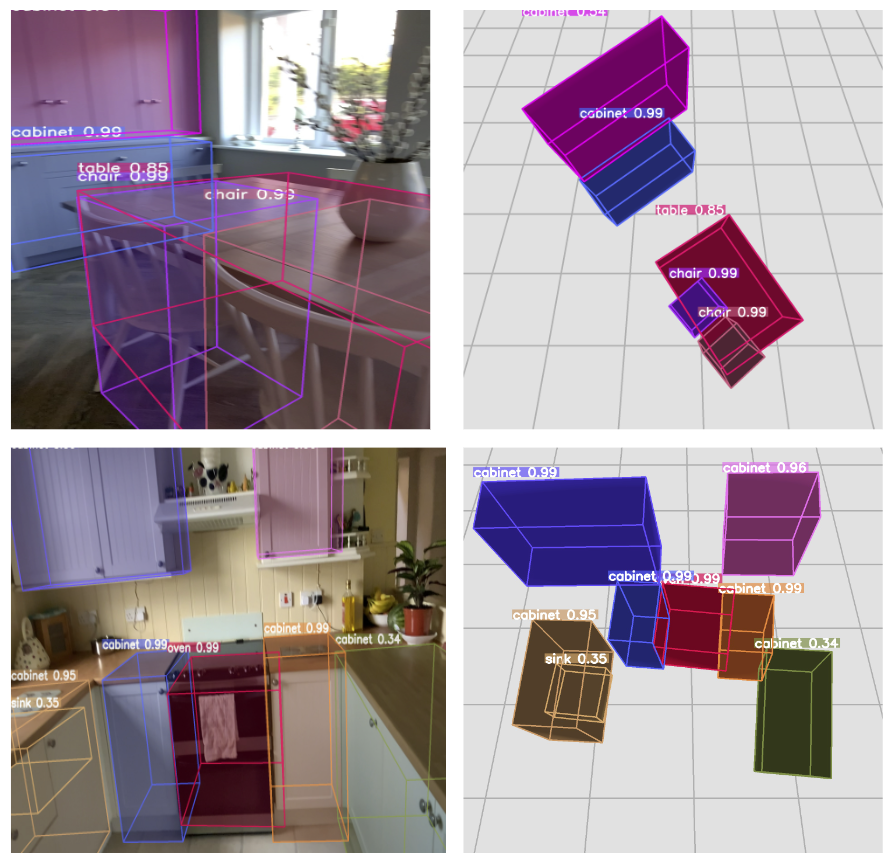

We present 3DiffTection, a cutting-edge method for 3D detection from single

images, grounded in features from a 3D-aware diffusion model. Annotating

large-scale image data for 3D object detection is both resource-intensive and

time-consuming. Recently, large image diffusion models have gained traction as

potent feature extractors for 2D perception tasks. However, since these features,

originally trained on paired text and image data, are not directly adaptable to 3D

tasks and often misalign with target data, our approach bridges these gaps through

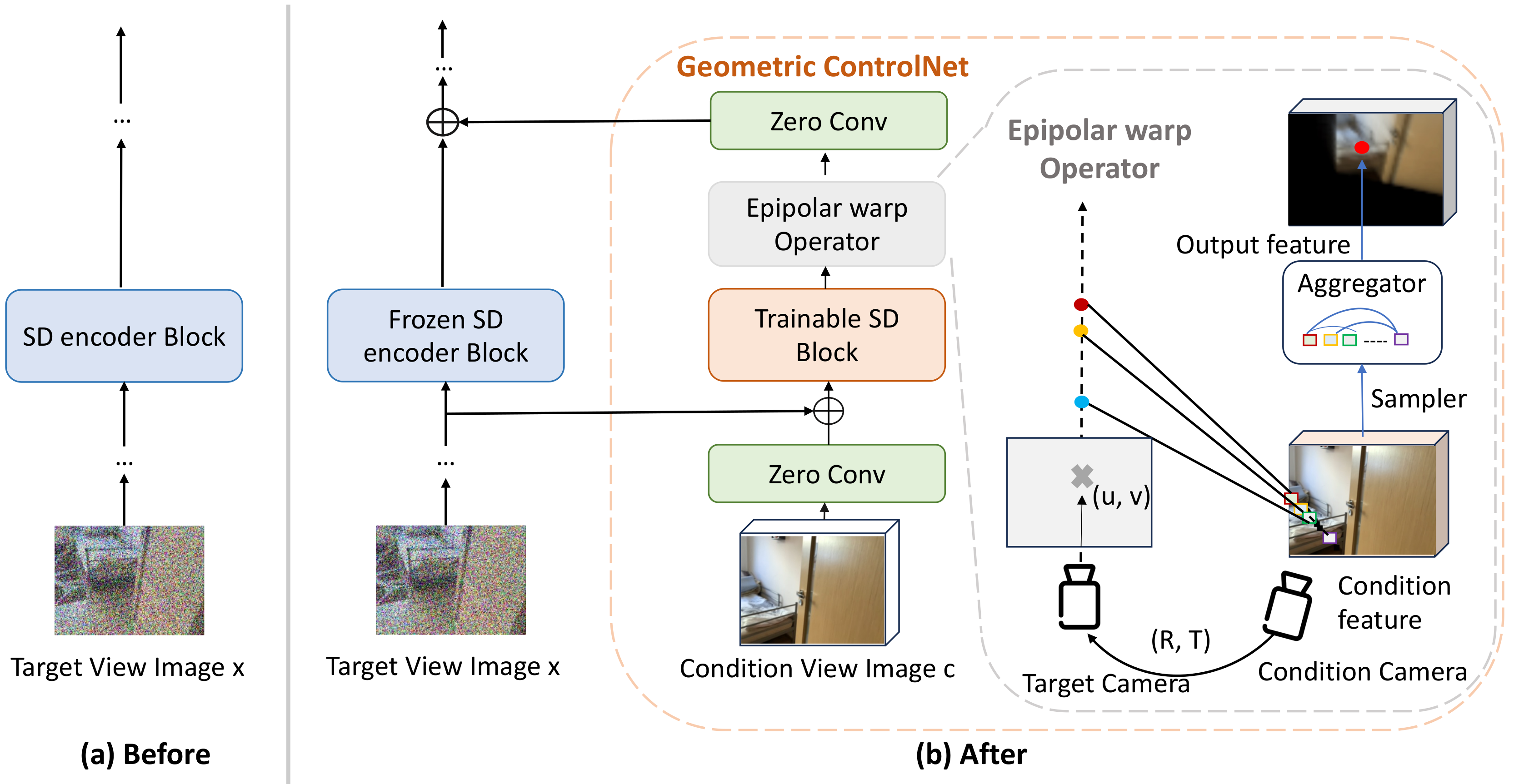

two specialized tuning strategies: geometric and semantic. For geometric tuning,

we refine a diffusion model on a view synthesis task, introducing a novel epipolar

warp operator. This task meets two pivotal criteria: the necessity for 3D awareness and reliance solely on posed image data, which are readily available (e.g.,

from videos). For semantic refinement, we further train the model on target data

using box supervision. Both tuning phases employ a ControlNet to preserve the

integrity of the original feature capabilities. In the final step, we harness these

capabilities to conduct a test-time prediction ensemble across multiple virtual viewpoints. Through this methodology, we derive 3D-aware features tailored for 3D

detection and excel in identifying cross-view point correspondences.

|

.png)

>

>