DIB-R++: Learning to Predict Lighting and

Material with a Hybrid Differentiable Renderer

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

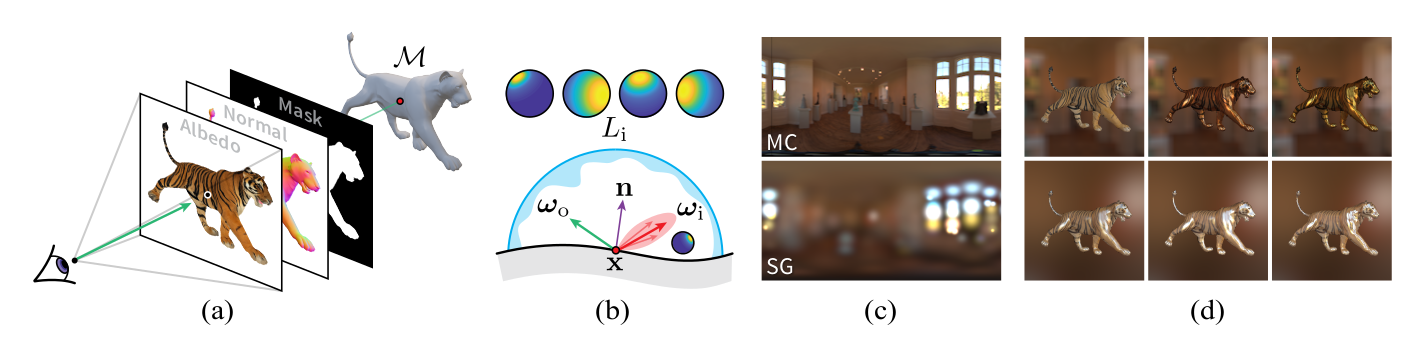

We consider the challenging problem of predicting intrinsic object properties from a single image by exploiting differentiable renderers. Many previous learning-based approaches for inverse graphics adopt rasterization-based renderers and assume naive lighting and material models, which often fail to account for non-Lambertian, specular reflections commonly observed in the wild. In this work, we propose DIB-R++, a hybrid differentiable renderer which supports these photorealistic effects by combining rasterization and ray-tracing, taking the advantage of their respective strengths—speed and realism. Our renderer incorporates environmental lighting and spatially-varying material models to efficiently approximate light transport, either through direct estimation or via spherical basis functions. Compared to more advanced physics-based differentiable renderers leveraging path tracing, DIB-R++ is highly performant due to its compact and expressive shading model, which enables easy integration with learning frameworks for geometry, reflectance and lighting prediction from a single image without requiring any ground-truth. We experimentally demonstrate that our approach achieves superior material and lighting disentanglement on synthetic and real data compared to existing rasterization-based approaches and showcase several artistic applications including material editing and relighting.

|

|

|

|

|

|

Jointly Predicting Geometry, Texture, Light and Material |

| GT |

|

|

|||

| DIB-R++ |

|

|

|||

| DIB-R |

|

|

|||

|

|

|

|

|

||

|

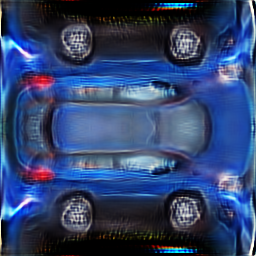

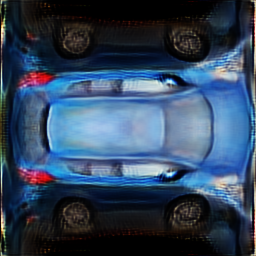

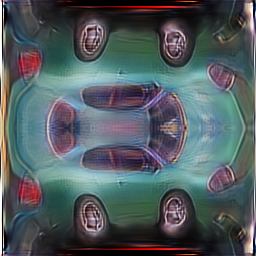

3D Reconstruction on Glossy Surfaces with Spherical Gaussian Shading: Given an input image (1st row, 1st column), we predict 3D geometry, texture(1st column), spherical gaussian light(4th column) and material and render them with and without lighting effect(2nd & 3rd columns). We compare our model with GT(1st row) and our previous work, DIB-R(3rd row). |

| GT |

|

|

|||

| DIB-R++ |

|

|

|||

| DIB-R |

|

|

|||

|

|

|

|

|

||

|

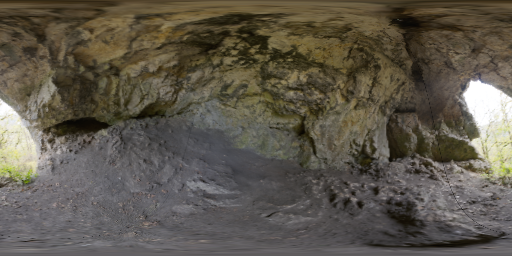

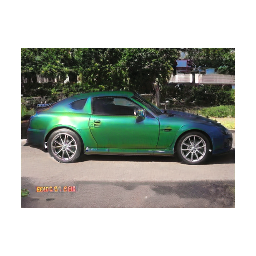

3D Reconstruction on Metallic Surfaces with Monte Carlo Shading: Given an input image (1st row, 1st column), we predict 3D geometry, texture(1st column), environment map light(4th column) and render them with and without lighting effect(2nd & 3rd columns). We compare our model with GT(1st row) and our previous work, DIB-R(3rd row). |

|

Real Imagery Reconstruction

|

| GT |

|

||||

| DIB-R++ |

|

|

|||

| DIB-R |

|

|

|||

|

|

|

|

|

||

|

3D Reconstruction on GAN-generated Dataset with Spherical Gaussian Shading: We further verify our model on realistic image dataset generated by StyleGAN. DIBR++ can recover a meaningful decomposition of texture and light, as shown by cleaner texture maps and directional highlights in the re-rendered videos. On the other side, DIB-R fails to capture specular lighting effect due to its naive shading model. |

|

3D Reconstruction on LSUN Dataset with Spherical Gaussian Shading: Lastly, we verify our model on real images. DIB-R++, trained on StyleGAN dataset, can generalize well to real images since the distributions are similar. Moreover, it also predicts correct high specular lighting directions and usable, clean textures. |