Abstract

Realistic simulators are critical for training and verifying robotics systems. While most of the contemporary simulators are hand-crafted, a scaleable way to build simulators is to use machine learning to learn how the environment behaves in response to an action, directly from data. In this work, we aim to learn to simulate a dynamic environment directly in pixel-space, by watching unannotated sequences of frames and their associated action pairs. We introduce a novel high-quality neural simulator referred to as DriveGAN that achieves controllability by disentangling different components without supervision. In addition to steering controls, it also includes controls for sampling features of a scene, such as the weather as well as the location of non-player objects. Since DriveGAN is a fully differentiable simulator, it further allows for re-simulation of a given video sequence, offering an agent to drive through a recorded scene again, possibly taking different actions. We train DriveGAN on multiple datasets, including 160 hours of real-world driving data. We showcase that our approach greatly surpasses the performance of previous data-driven simulators, and allows for new features not explored before.

Our objective is to learn a high-quality controllable neural simulator by watching sequences of video frames and their associated actions.

We aim to achieve controllability in two aspects:

1) We assume there is an egocentric agent that can be controlled by a given action.

2) We want to control different aspects of the current scene, for example, by modifying an object or changing the background color.

Generating high-quality temporally-consistent image sequences is a challenging problem.

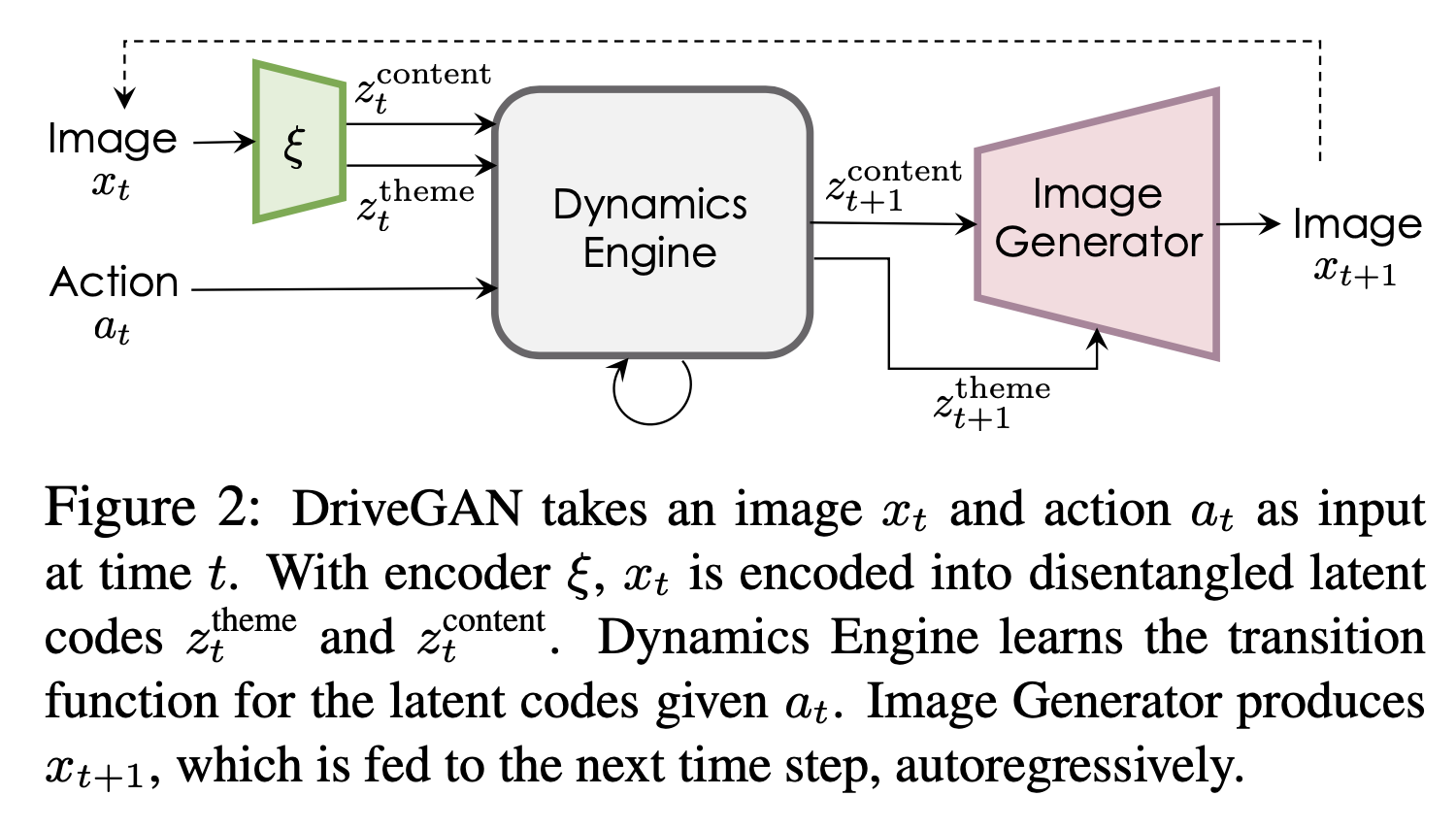

Rather than generating a sequence of frames directly, we split the learning process into two steps, motivated by World Model.

We propose our encoder-decoder architecture that is pre-trained to produce the latent space for images.

It disentangles themes and content while achieving high-quality generation by leveraging a Variational Auto-Encoder (VAE) and Generative Adversarial Networks (GAN).

The Dynamics Engine then learns the latent space dynamics.

It further disentangles action-dependent and action-independent content.

We build an interactive user interface for users to play with DriveGAN. It has controls for the steering wheel and speed which can be controlled with the keyboard. We can randomize different components or use the pre-defined list of themes and objects that users can selectively use for specific changes.

All models are given the same initial frame as the ground-truth video, and generate frames autoregressively using the same action sequence as the ground-truth video.

Ground Truth Action-RNN [1] SAVP [2] World Model [3] GameGAN [4] DriveGAN (Ours)

Ground Truth Action-RNN [1] SAVP [2] World Model [3] GameGAN [4] DriveGAN (Ours)

Ground Truth Action-RNN [1] SAVP [2] World Model [3] GameGAN [4] DriveGAN (Ours)

[1] Chiappa, Silvia, Sébastien Racaniere, Daan Wierstra, and Shakir Mohamed. "Recurrent environment simulators." arXiv preprint arXiv:1704.02254 (2017).

[2] Lee, Alex X., Richard Zhang, Frederik Ebert, Pieter Abbeel, Chelsea Finn, and Sergey Levine. "Stochastic adversarial video prediction." arXiv preprint arXiv:1804.01523 (2018).

[3] Ha, David, and Jürgen Schmidhuber. "World models." arXiv preprint arXiv:1803.10122 (2018).

[4] Kim, Seung Wook, Yuhao Zhou, Jonah Philion, Antonio Torralba, and Sanja Fidler. "Learning to simulate dynamic environments with gamegan." In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1231-1240. 2020.