|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

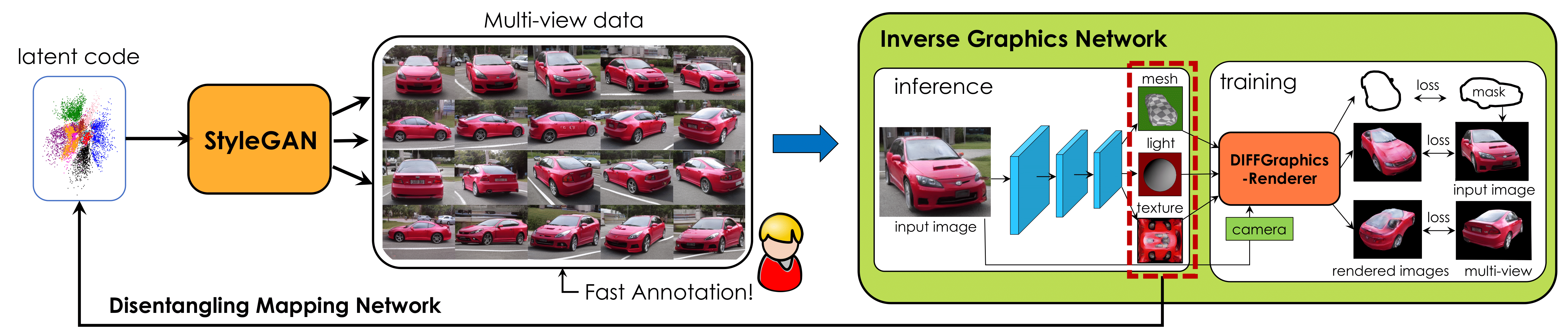

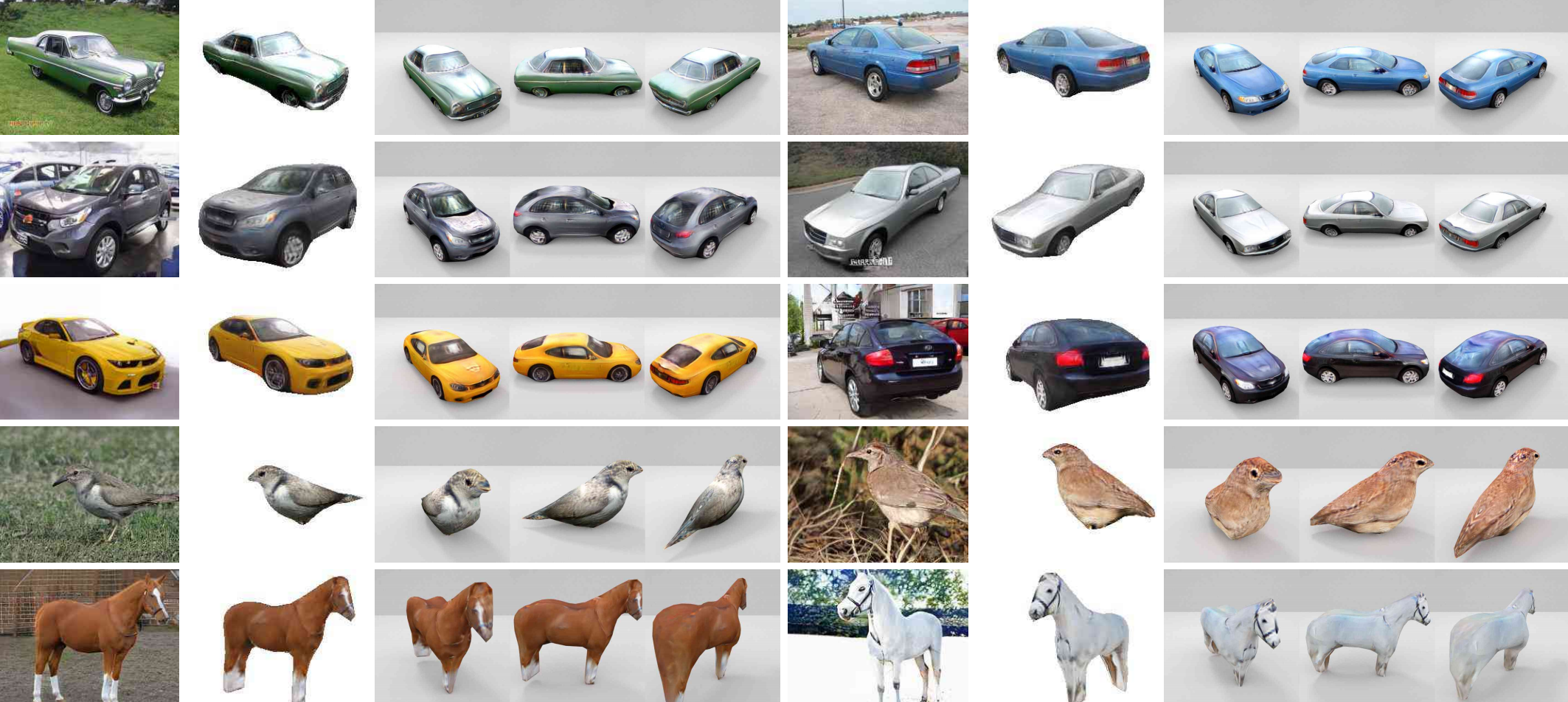

Differentiable rendering has paved the way to training neural networks to perform "inverse graphics" tasks such as predicting 3D geometry from monocular photographs. To train high performing models, most of the current approaches rely on multi-view imagery which are not readily available in practice. Recent Generative Adversarial Networks (GANs) that synthesize images, in contrast, seem to acquire 3D knowledge implicitly during training: object viewpoints can be manipulated by simply manipulating the latent codes. However, these latent codes often lack further physical interpretation and thus GANs cannot easily be inverted to perform explicit 3D reasoning. In this paper, we aim to extract and disentangle 3D knowledge learned by generative models by utilizing differentiable renderers. Key to our approach is to exploit GANs as a multi-view data generator to train an inverse graphics network using an off-the-shelf differentiable renderer, and the trained inverse graphics network as a teacher to disentangle the GAN's latent code into interpretable 3D properties. The entire architecture is trained iteratively using cycle consistency losses. We show that our approach significantly outperforms state-of-the-art inverse graphics networks trained on existing datasets, both quantitatively and via user studies. We further showcase the disentangled GAN as a controllable 3D "neural renderer", complementing traditional graphics renderers.

|

|

|

|

|

|

|

|

3D Reconstruction from Single-view Images

|

|

|

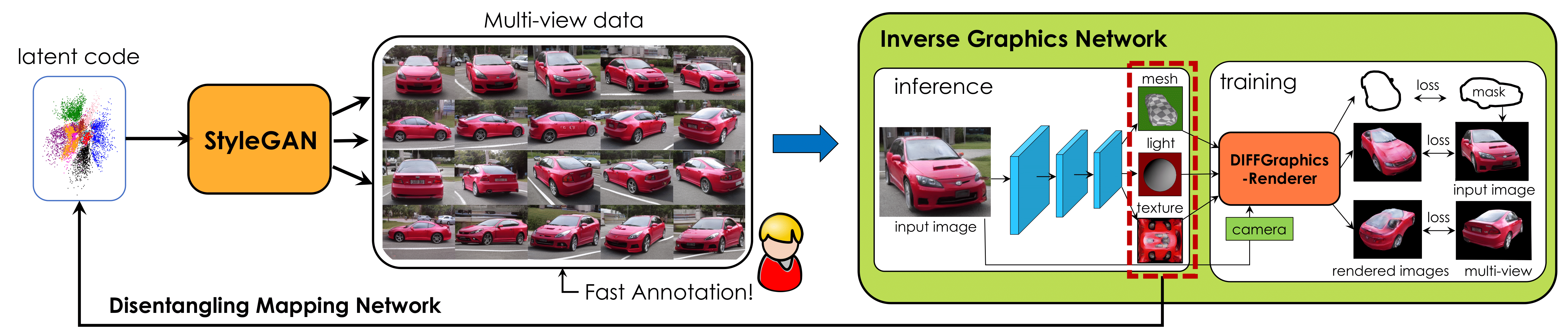

3D Reconstruction Result: Given input images (1st column), we predict 3D shape, texture, and render them into the same viewpoint (2nd column). We also show renderings in 3 other views in remaining columns to showcase 3D quality. Our model is able to reconstruct cars with various shapes, textures and viewpoints. We also show the same approach on harder (articulated) objects, i.e., bird and horse. |

|

|

More Examples: We provide more examples to show the 3D reconstruction quality. |

|

StyleGAN Disentanglement via Interpretable 3D Properties

|

|

|

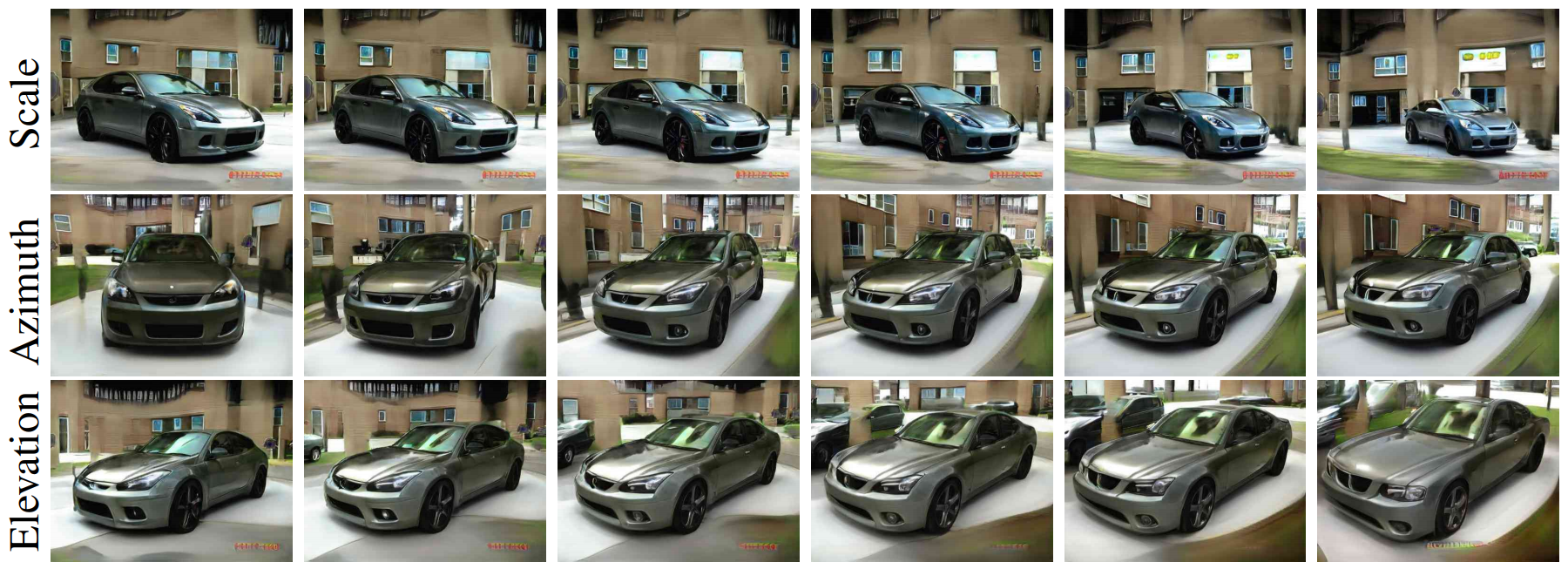

Camera Controller: We manipulate azimuth, scale,elevation parameters with StyleGAN-R to synthesize images in new viewpoints while keeping content code fixed. |

|

|

3D Manipulation: We sample 3 cars in column 1. We replace the shape of all cars with the shape of Car 1 ( red box) in 2nd column. We transfer texture of Car 2 ( green box) to other cars (3rd col). In last column, we paste background of Car 3 (blue box) to the other cars. Examples indicated with boxes are unchanged. |

|

Multi-view Synthesization

|

|

|

|

Objects from Different Views: We show an example of car images synthesized from different viewpoints by controlling the lantent code of StyleGAN using the technique we proposed. |

|

|

|

More Examples: We show more examples of diverse shape and textures. Notice how well aligned the cars are in each column. |