* Equal Contribution

Neural radiance fields achieve unprecedented quality for novel view synthesis, but their volumetric formulation remains expensive, requiring a huge number of samples to render high-resolution images. Volumetric encodings are essential to represent fuzzy geometry such as foliage and hair, and they are well-suited for stochastic optimization. Yet, many scenes ultimately consist largely of solid surfaces which can be accurately rendered by a single sample per pixel. Based on this insight, we propose a neural radiance formulation that smoothly transitions between volumetric- and surface-based rendering, greatly accelerating rendering speed and even improving visual fidelity. Our method constructs an explicit mesh envelope which spatially bounds a neural volumetric representation. In solid regions, the envelope nearly converges to a surface and can often be rendered with a single sample. To this end, we generalize the NeuS formulation with a learned spatially-varying kernel size which encodes the spread of the density, fitting a wide kernel to volume-like regions and a tight kernel to surface-like regions. We then extract an explicit mesh of a narrow band around the surface, with width determined by the kernel size, and fine-tune the radiance field within this band. At inference time, we cast rays against the mesh and evaluate the radiance field only within the enclosed region, greatly reducing the number of samples required. Experiments show that our approach enables efficient rendering at very high fidelity. We also demonstrate that the extracted envelope enables downstream applications such as animation and simulation.

Overview of the proposed approach.

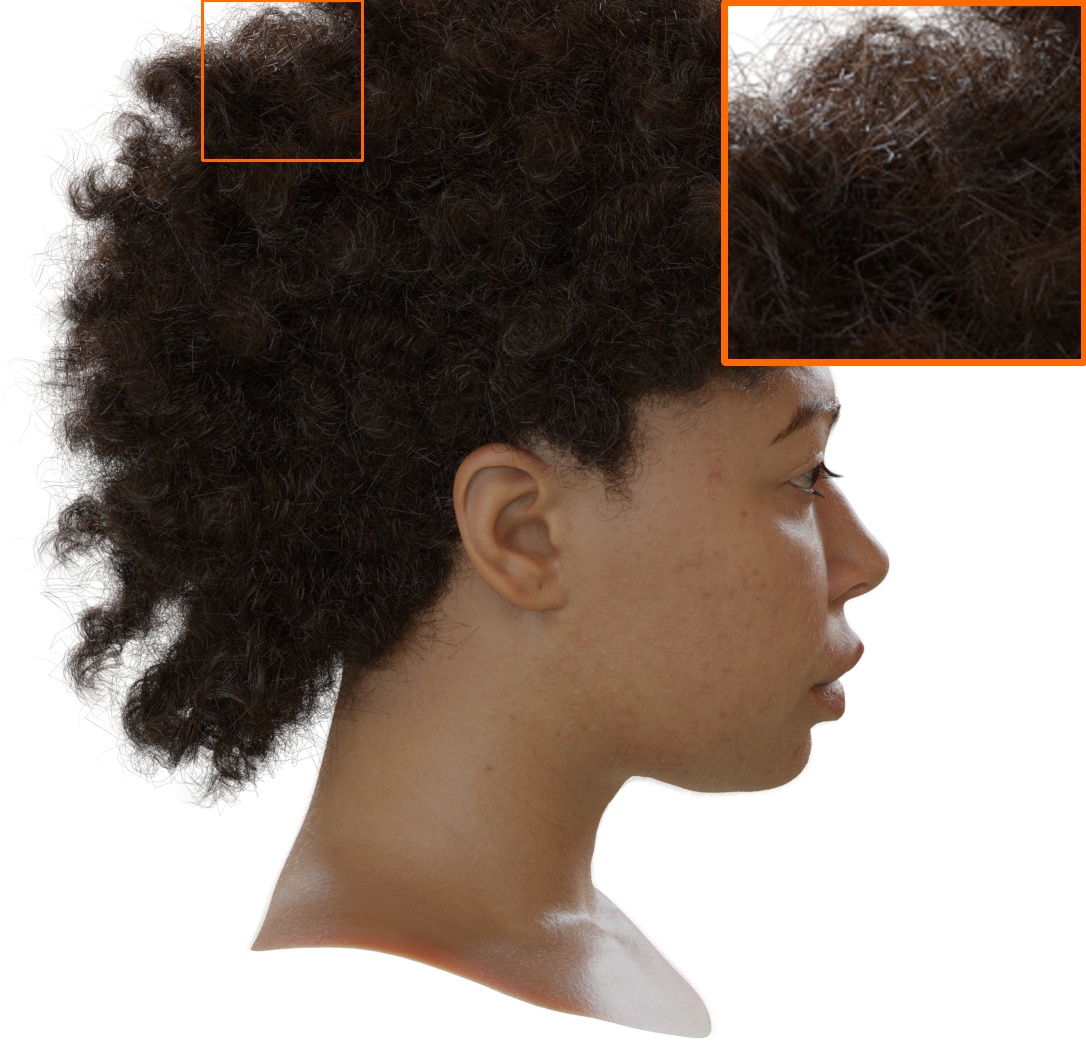

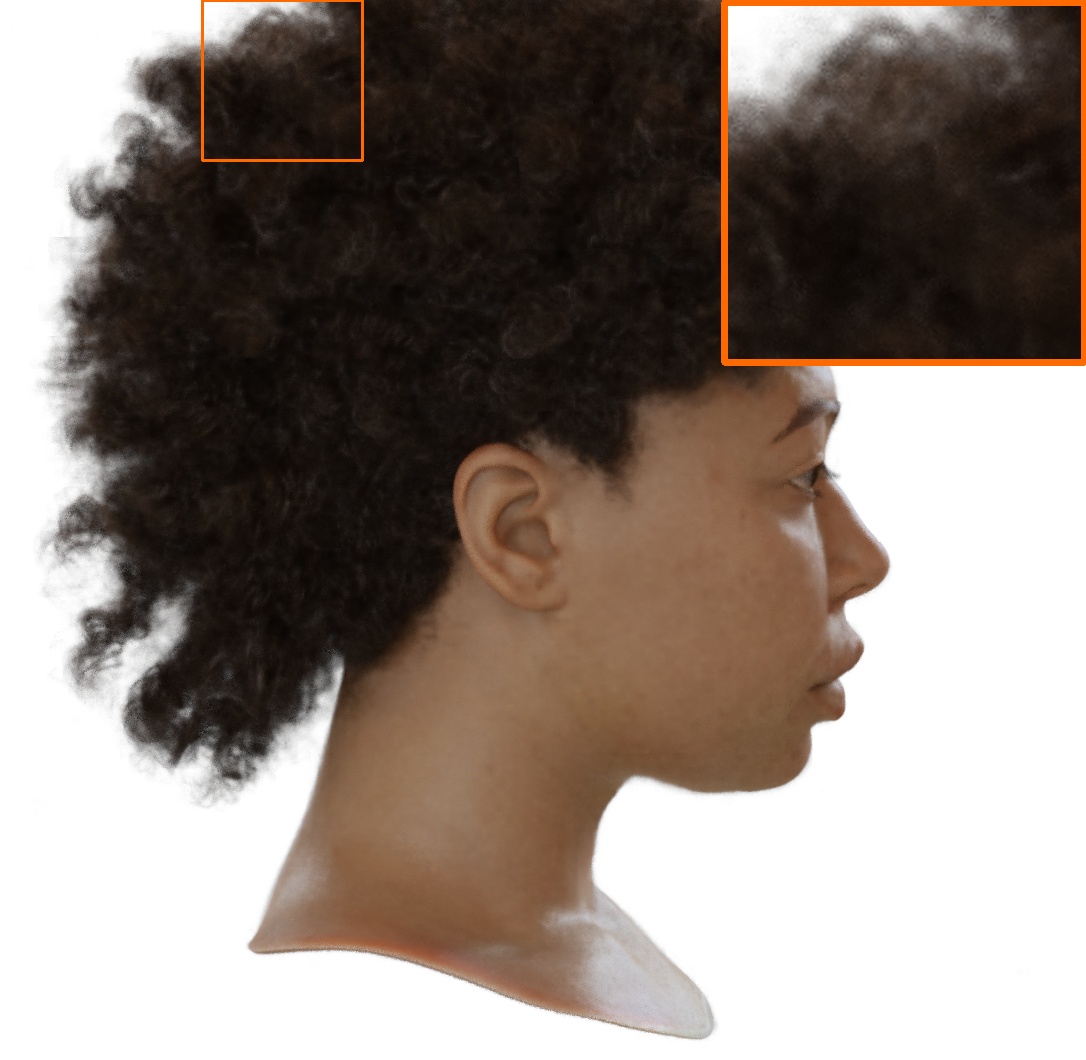

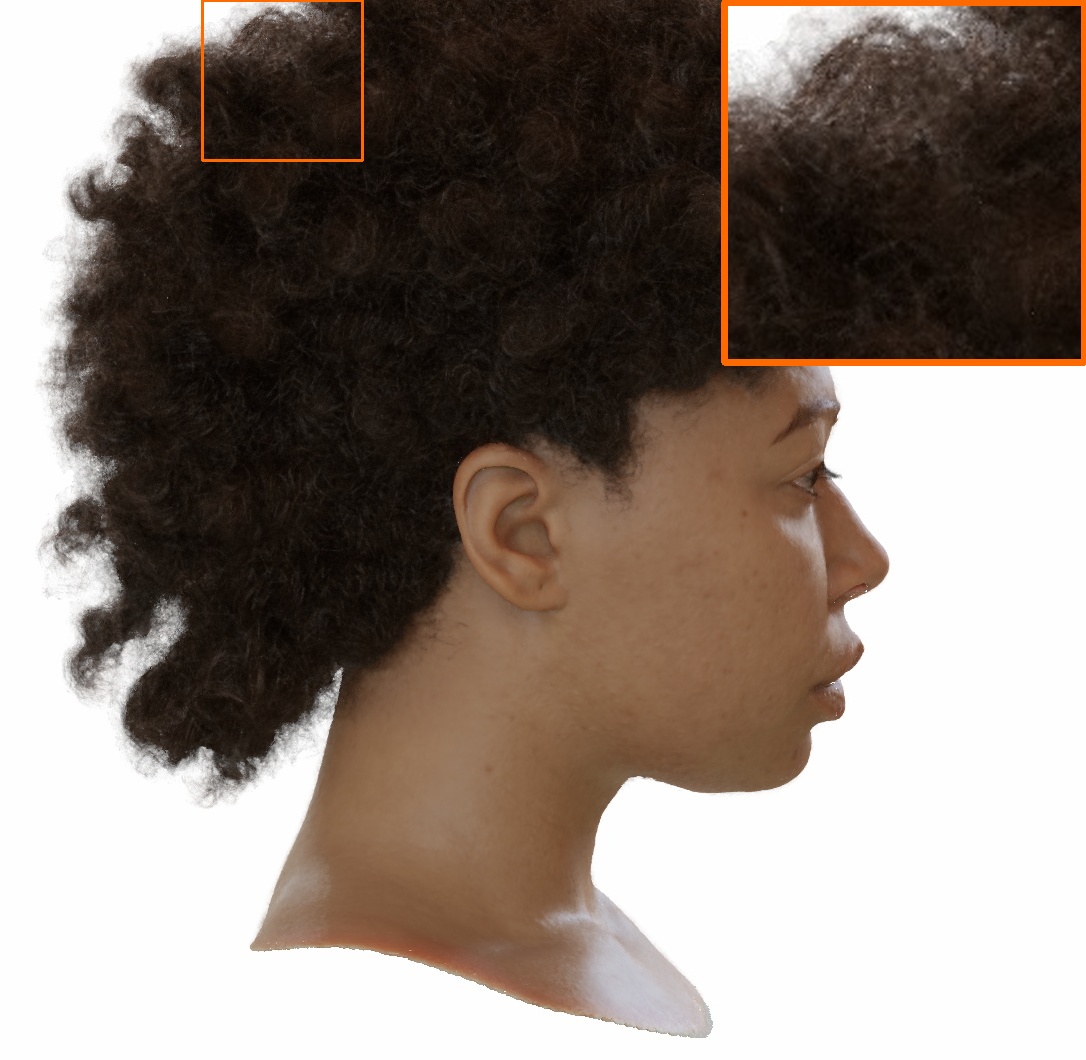

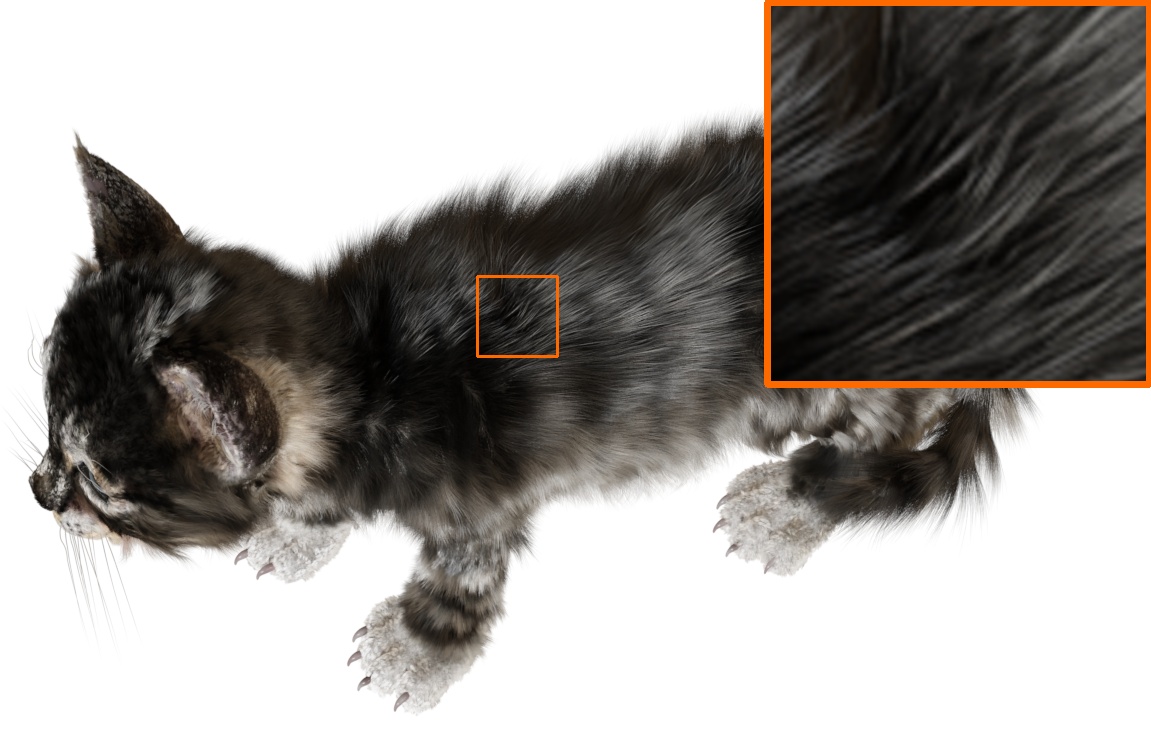

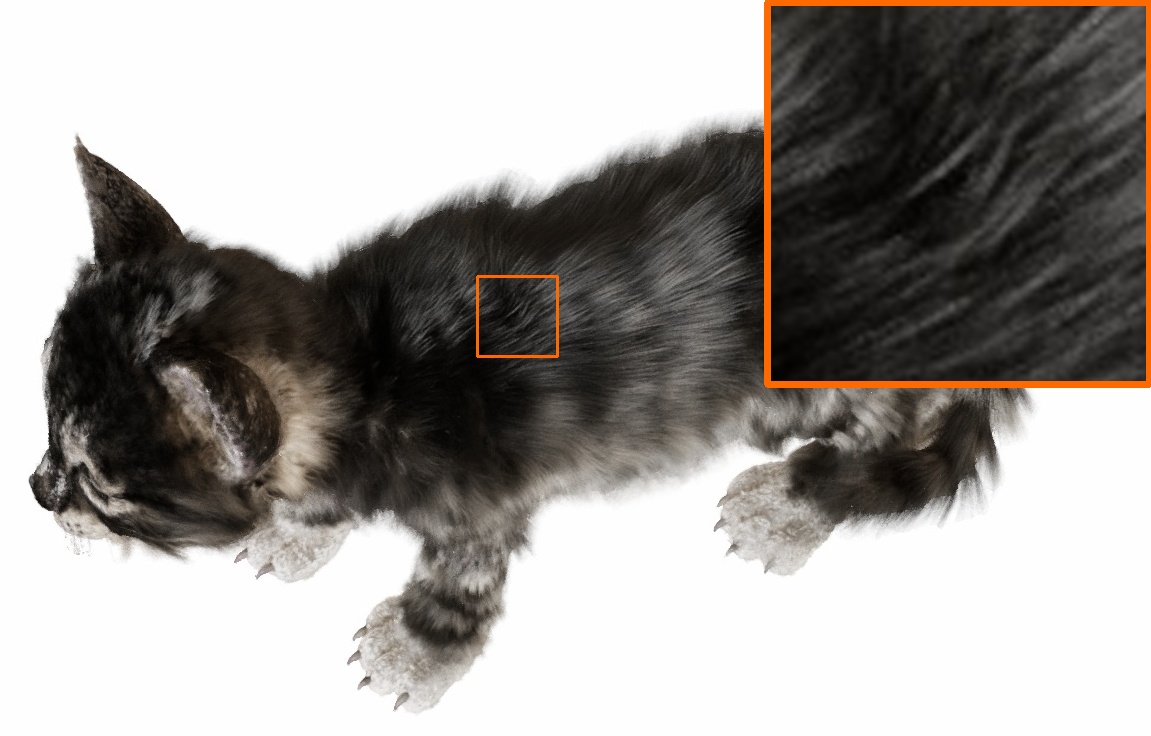

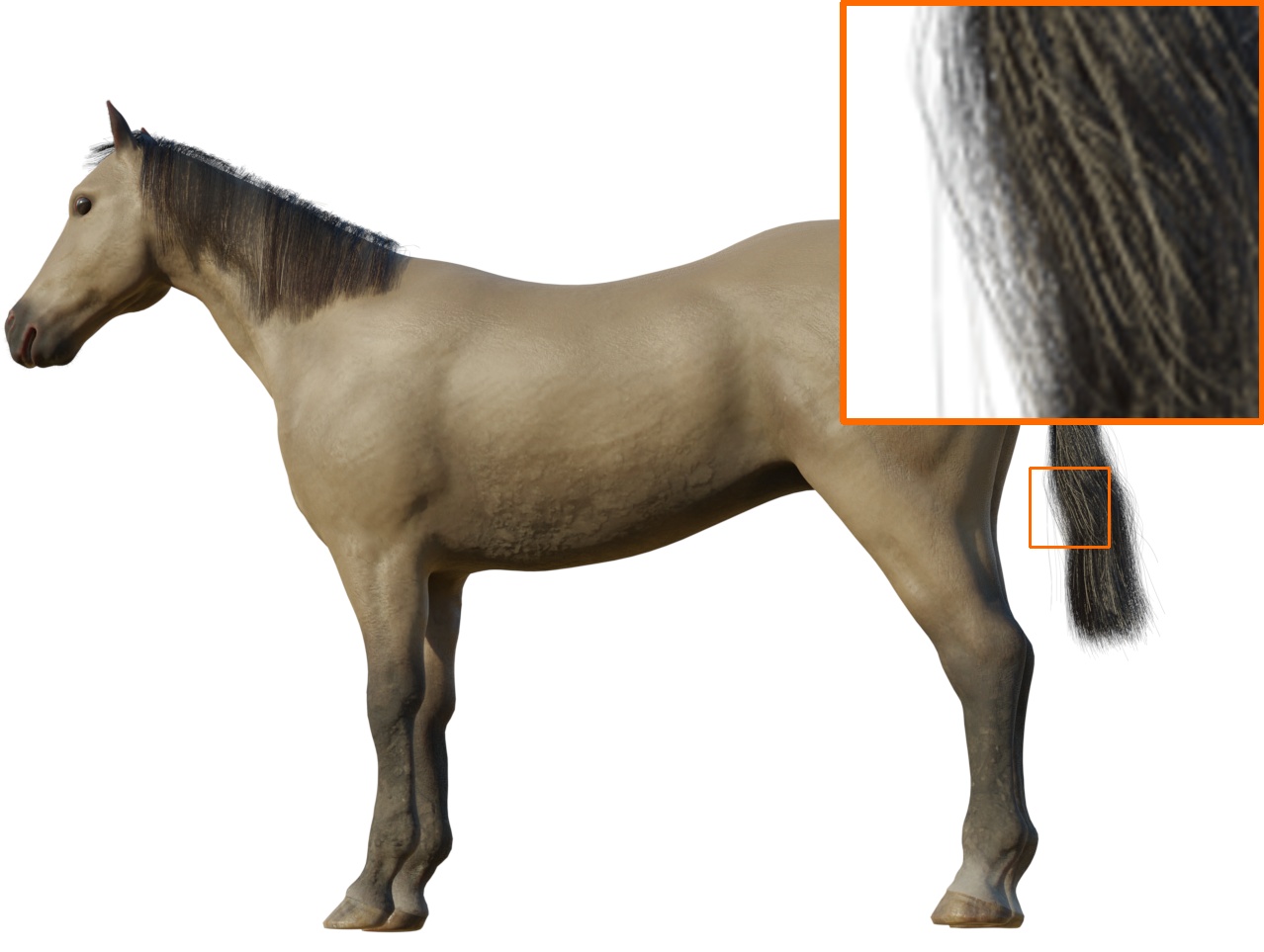

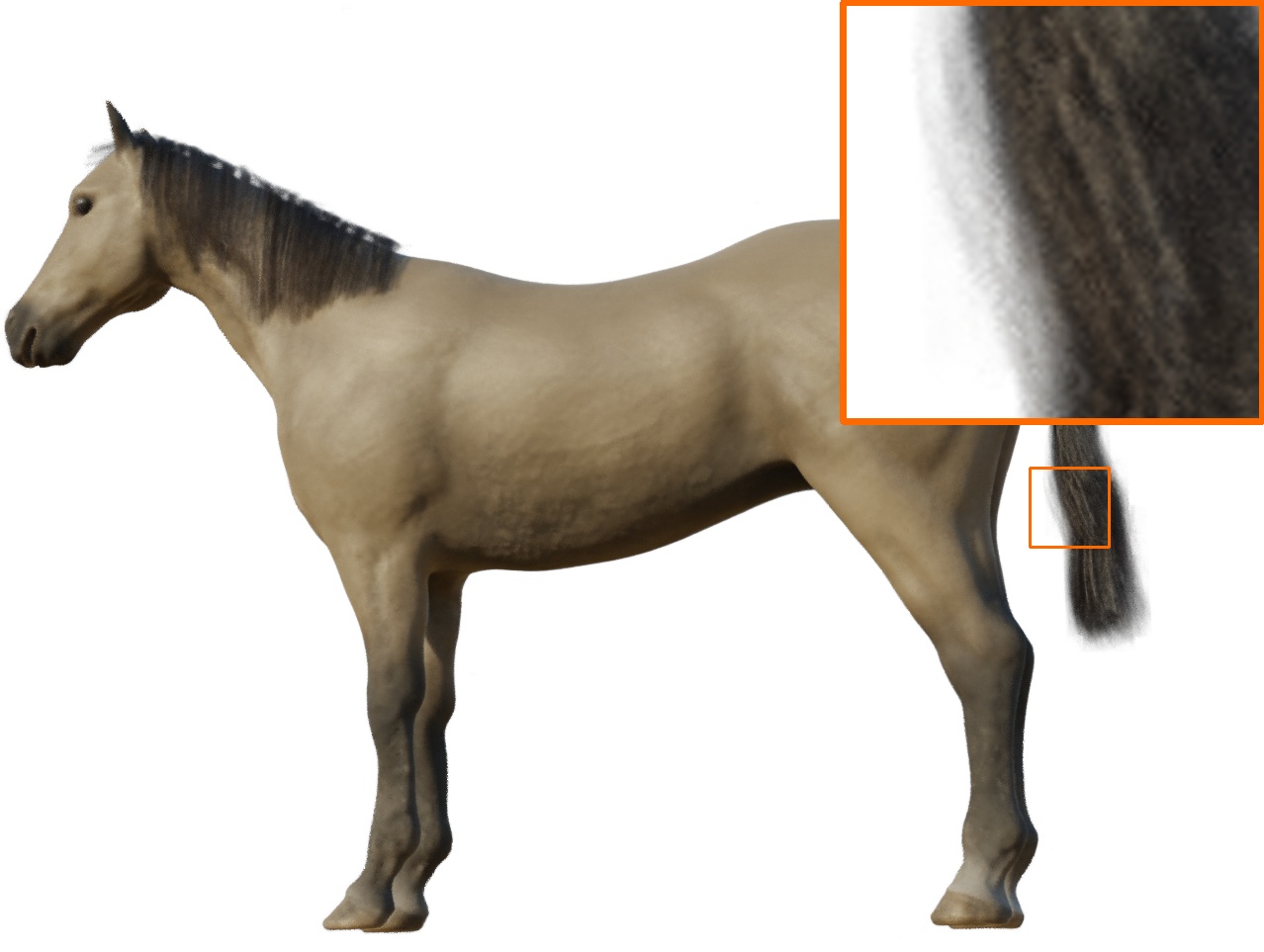

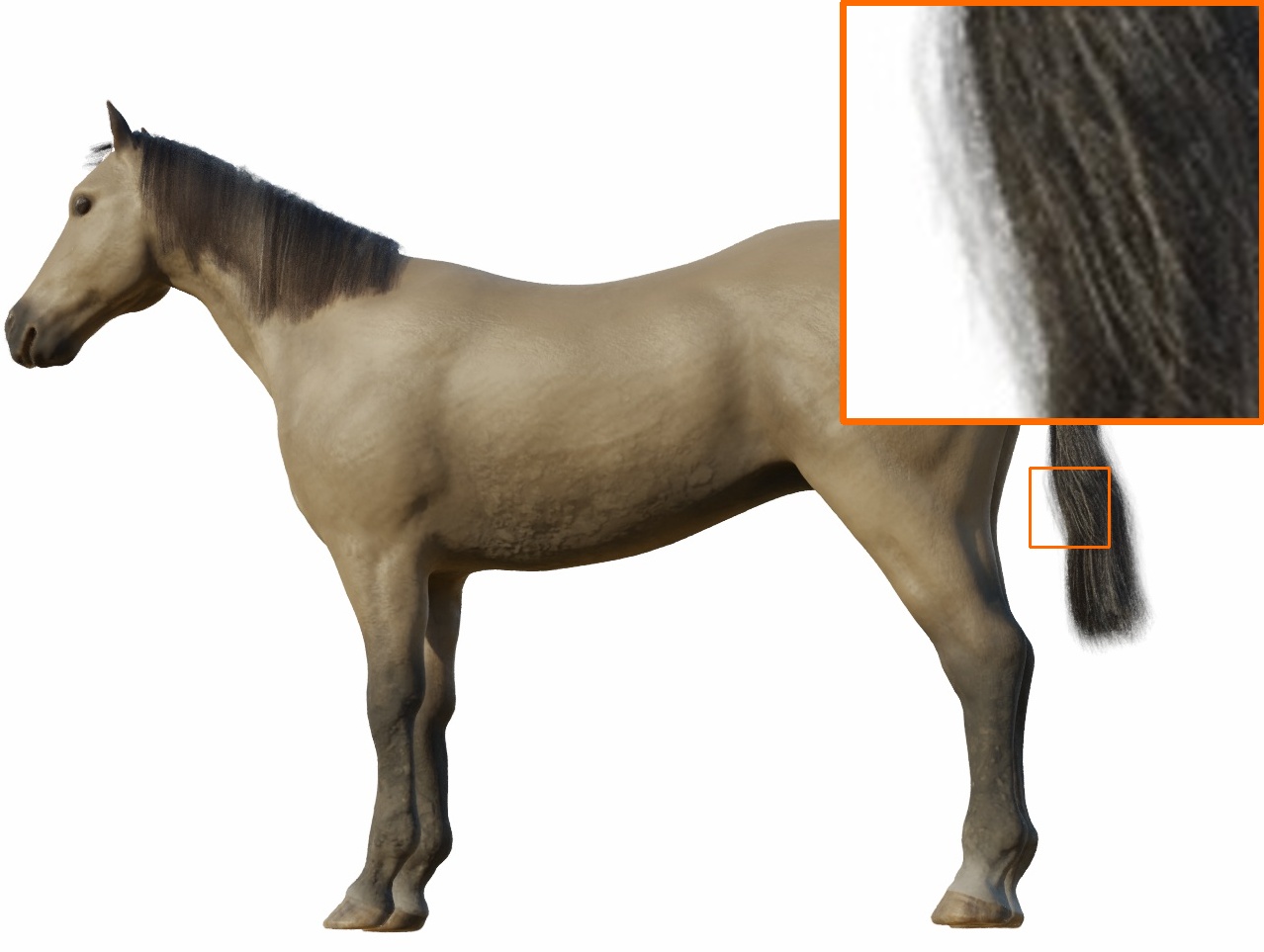

We introduce a new synthetic data set, which we name Shelly. It covers a wider variety of appearance including fuzzy surfaces such as hair, fur, and foliage. Here we show a gallery of results on the test-views of our Shelly data set. Our method significantly outperforms prior methods. Hover over each image to see the zoom-in box in full size.

Real-time rendering in interactive GUI.

Visualization of number of queries per ray (brighter colors indicate more samples).

On the challenging outbound 360 scenes, our method runs at real-time rates and achieves comparable performance to other interactive methods.

We show two examples of applying physical simulation and animation to the reconstructed objects. The basic idea is to construct a coarse tetrahedral cage around a neural volume, deform the cage, and use it to render the deformed appearance of the underlying volume. Note that even in the presence of deformations, the rendering process still benefits from our efficient adaptive shell representation, and is able to efficiently sample the underlying neural volume.

@article{adaptiveshells2023,

author = {Zian Wang and Tianchang Shen and Merlin Nimier-David and Nicholas Sharp and Jun Gao

and Alexander Keller and Sanja Fidler and Thomas M\"uller and Zan Gojcic},

title = {Adaptive Shells for Efficient Neural Radiance Field Rendering},

journal = {ACM Trans. Graph.},

issue_date = {December 2023},

volume = {42},

number = {6},

year = {2023},

articleno = {259},

numpages = {15},

url = {https://doi.org/10.1145/3618390},

doi = {10.1145/3618390},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {neural radiance fields, fast rendering, level set methods, novel view synthesis}

}

Adaptive Shells for Efficient Neural Radiance Field Rendering

Zian Wang*, Tianchang Shen*, Merlin Nimier-David*, Nicholas Sharp, Jun Gao, Alexander Keller, Sanja Fidler, Thomas Müller, Zan Gojcic

The authors are grateful to Alex Evans for the insightful brainstorming sessions and his explorations in the early stages of this project. We also appreciate the feedback received from Jacob Munkberg, Jon Hasselgren, Chen-Hsuan Lin, and Wenzheng Chen during the project. We would like to thank Lior Yariv for providing the results of BakedSDF. Finally, we are grateful to the original artists of assets appearing in this work: TEXTURING.XYZ, JHON MAYCON, Pierre-Louis Baril, ABDOUBOUAM, CKAT609, and the BlenderKit team.

|

|