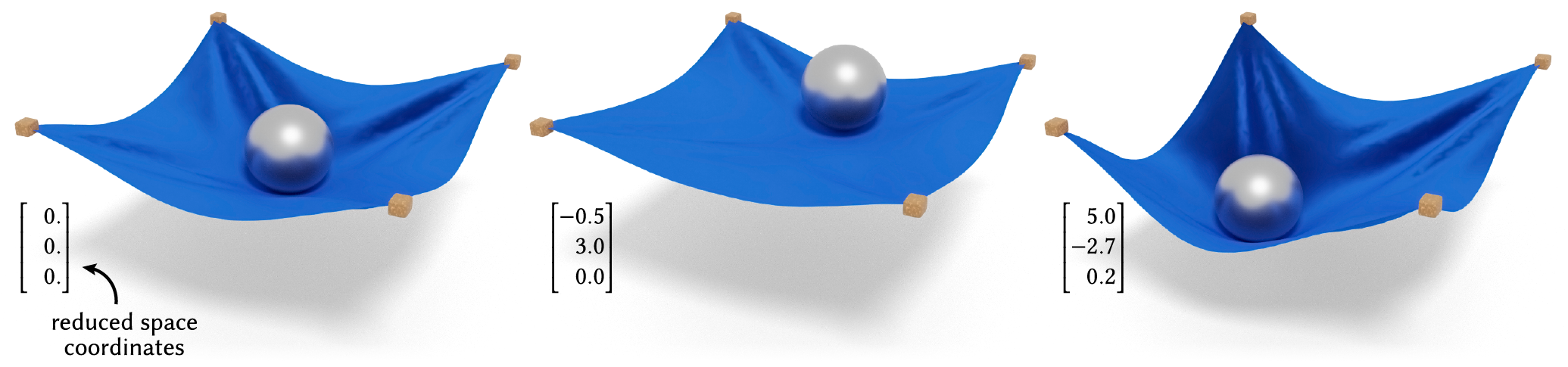

Physical systems ranging from elastic bodies to kinematic linkages are defined on high-dimensional configuration spaces, yet their typical low-energy configurations are concentrated on much lower-dimensional subspaces. This work addresses the challenge of identifying such subspaces automatically: given as input an energy function for a high-dimensional system, we produce a low-dimensional map whose image parameterizes a diverse yet low-energy submanifold of configurations. The only additional input needed is a single seed configuration for the system to initialize our procedure; no dataset of trajectories is required. We represent subspaces as neural networks that map a low-dimensional latent vector to the full configuration space, and propose a training scheme to fit network parameters to any system of interest. This formulation is effective across a very general range of physical systems; our experiments demonstrate not only nonlinear and very low-dimensional elastic body and cloth subspaces, but also more general systems like colliding rigid bodies and linkages. We briefly explore applications built on this formulation, including manipulation, latent interpolation, and sampling.

@article{sharp2023data,

title={Data-Free Learning of Reduced-Order Kinematics},

author={Sharp, Nicholas and Romero, Cristian and Jacobson, Alec and Vouga, Etienne and Kry, Paul G and Levin, David IW and Solomon, Justin},

booktitle={ACM SIGGRAPH 2023 Conference Proceedings},

year={2023}

}

Data-Free Learning of Reduced-Order Kinematics

Nicholas Sharp, Cristian Romero, Alec Jacobson, Etienne Vouga, Paul G. Kry, David I.W. Levin, Justin Solomon

The Bellairs Workshop on Computer Animation was instrumental in the conception of the research presented in this paper. The authors acknowledge the support of the Natural Sciences and Engineering Research Council of Canada (NSERC), the Fields Institute for Mathematics, and the Vector Institute for AI. This research is funded in part by the National Science Foundation (HCC2212048), NSERC Discovery (RGPIN-2022-04680), the Ontario Early Research Award program, the Canada Research Chairs Program, a Sloan Research Fellowship, the DSI Catalyst Grant program and gifts by Adobe Systems. The MIT Geometric Data Processing group acknowledges the generous support of Army Research Office grants W911NF2010168 and W911NF2110293, of Air Force Office of Scientific Research award FA9550-19-1-031, of National Science Foundation grants IIS-1838071 and CHS-1955697, from the CSAIL Systems that Learn program, from the MIT-IBM Watson AI Laboratory, from the Toyota-CSAIL Joint Research Center, from a gift from Adobe Systems, and from a Google Research Scholar award.

|

|