We present Neural Kernel Fields: a novel method for reconstructing implicit 3D shapes based on a learned kernel ridge regression. Our technique achieves state-of-the-art results when reconstructing 3D objects and large scenes from sparse oriented points, and can reconstruct shape categories outside the training set with almost no drop in accuracy. The core insight of our approach is that kernel methods are extremely effective for reconstructing shapes when the chosen kernel has an appropriate inductive bias. We thus factor the problem of shape reconstruction into two parts: (1) a backbone neural network which learns kernel parameters from data, and (2) a kernel ridge regression that fits the input points on-the-fly by solving a simple positive definite linear system using the learned kernel. As a result of this factorization, our reconstruction gains the benefits of data- driven methods under sparse point density while maintaining interpolatory behavior, which converges to the ground truth shape as input sampling density increases. Our experiments demonstrate a strong generalization capability to objects outside the train-set category and scanned scenes.

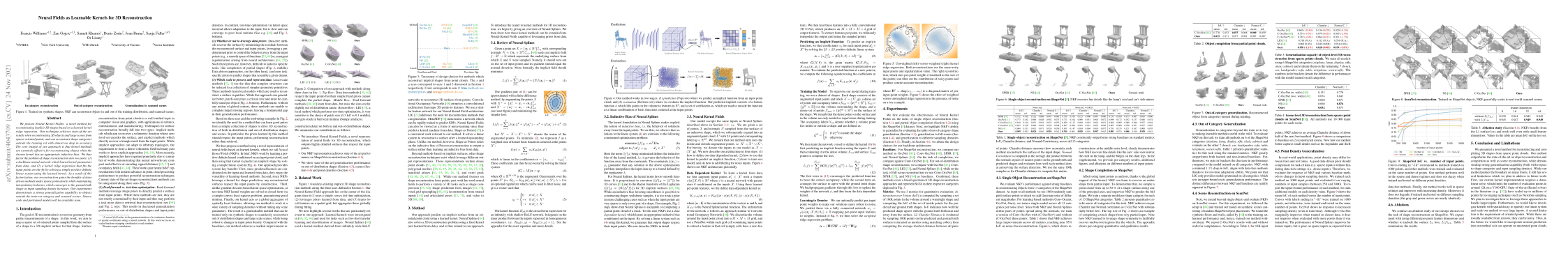

Our method works in two stages: (1) prediction (Top row) where we predict an implicit function from an input point cloud, and (2) evaluation (Bottom row) where we evaluate the implicit function. Our predicted implicit consists of a feature function \(\phi\), which lifts points in the volume to features in \(R^d\), and a set of coefficients \(\boldsymbol{\alpha}\), which are used to encode the function as a linear combination of basis functions centered at the input points.

In-category single shape reconstruction. Visualizations are interactive . To load a new random shape press the button in the top right corner.

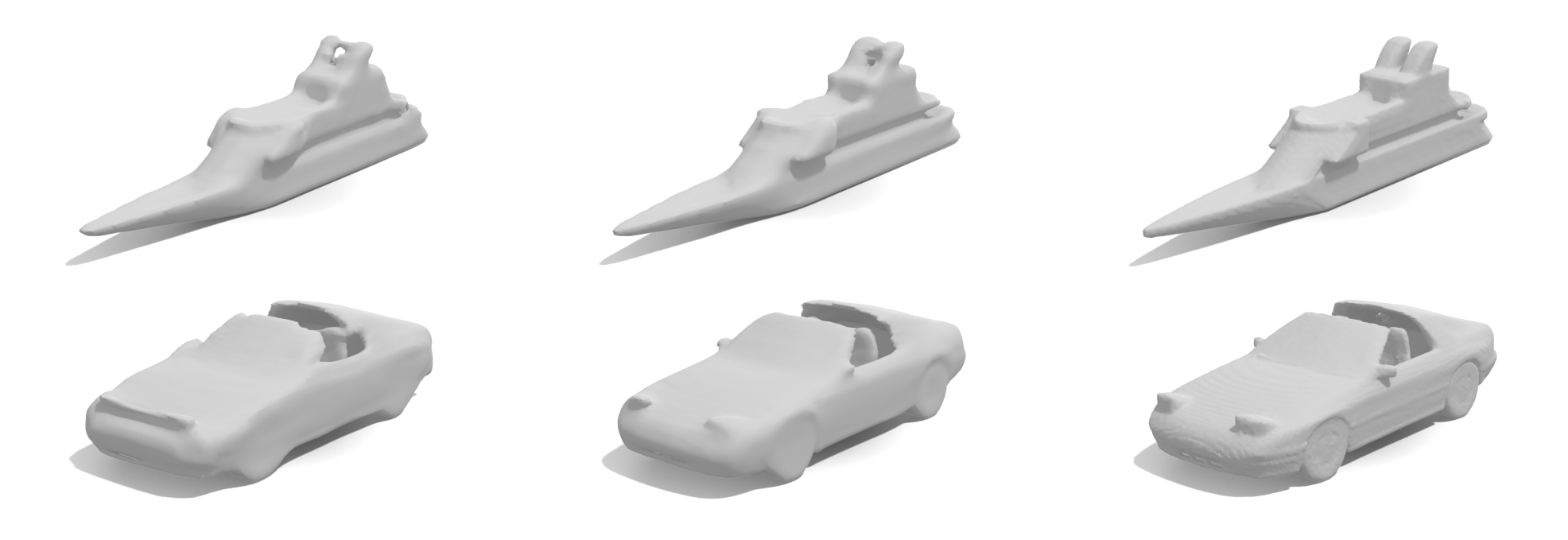

Qualitative side-by-side comparison of our method (gray) to the baselines (violet) on scene level reconstruction. Note that our method is trained only on synthetic shapes from ShapeNet dataset.

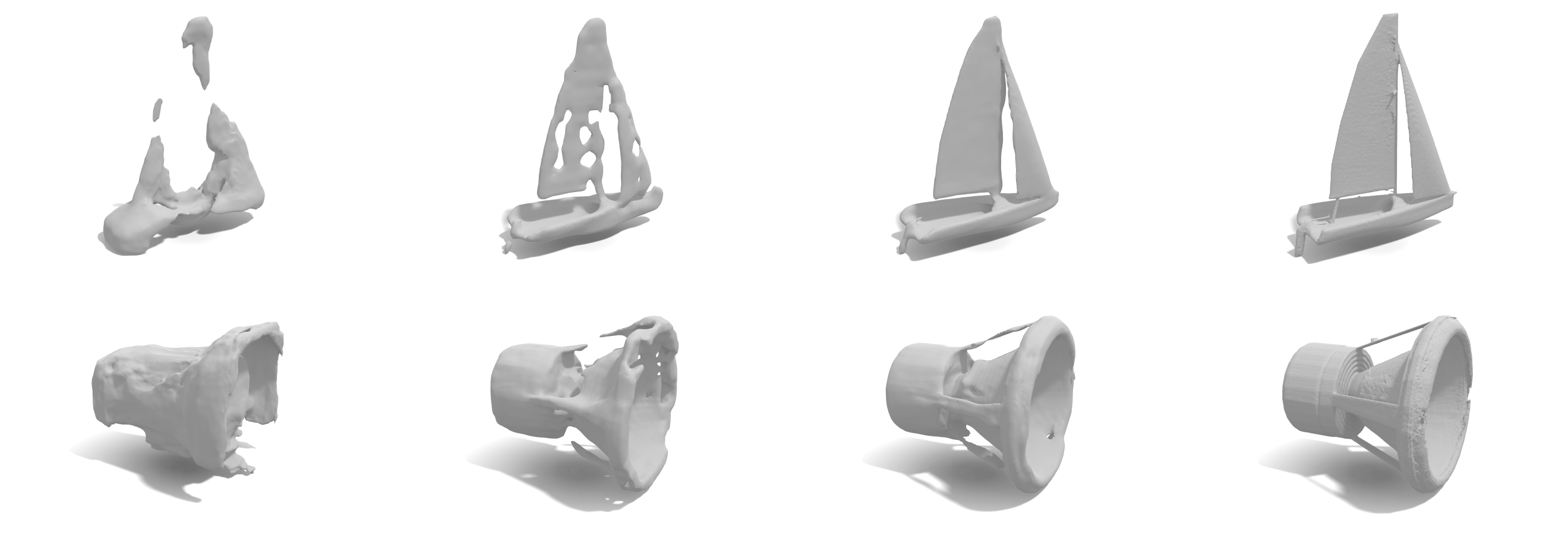

Out-of-category generalization. Models trained on categories airplane, lamp, display, rifle, chair, and cabinet are used to reconstruct the shapes from other seven categories of ShapeNet dataset.

Our model trained only on chairs (left) can seamlessly generalize to other 12 ShapeNet categories, achieving only slightly worse performance than the model trained on all categories (middle).

@misc{williams2021nkf,

title={Neural Fields as Learnable Kernels for 3D Reconstruction},

author={Francis Williams and Zan Gojcic and Sameh Khamis and Denis Zorin

and Joan Bruna and Sanja Fidler and Or Litany},

year={2021},

eprint={2111.13674},

archivePrefix={arXiv},

primaryClass={cs.CV}}