|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Human motion synthesis is an important problem with applications in graphics, gaming and simulation environments for robotics. Existing methods require accurate motion capture data for training, which is costly to obtain. Instead, we propose a framework for training generative models of physically plausible human motion directly from monocular RGB videos, which are much more widely available. At the core of our method is a novel optimization formulation that corrects imperfect image-based pose estimations by enforcing physics constraints and reasons about contacts in a differentiable way. This optimization yields corrected 3D poses and motions, as well as their corresponding contact forces. Results show that our physically-corrected motions significantly outperform prior work on pose estimation. We can then use these to train a generative model to synthesize future motion. Samples from the generative model can optionally be further refined with the same physics correction optimization. We demonstrate both qualitatively and quantitatively significantly improved motion estimation, synthesis quality and physical plausibility achieved by our method on the large scale Human3.6m dataset~\cite{h36m_pami} as compared to prior kinematic and physics-based methods. By enabling learning of motion synthesis from video, our method paves the way for large-scale, realistic and diverse motion synthesis.

|

Kevin Xie, Tingwu Wang, Umar Iqbal, Yunrong Guo, Sanja Fidler, Florian Shkurti Physics-based Human Motion Estimation and Synthesis from Videos ICCV 2021 |

|

|

|

|

|

More Recovered Motions Combined

|

|

|

|

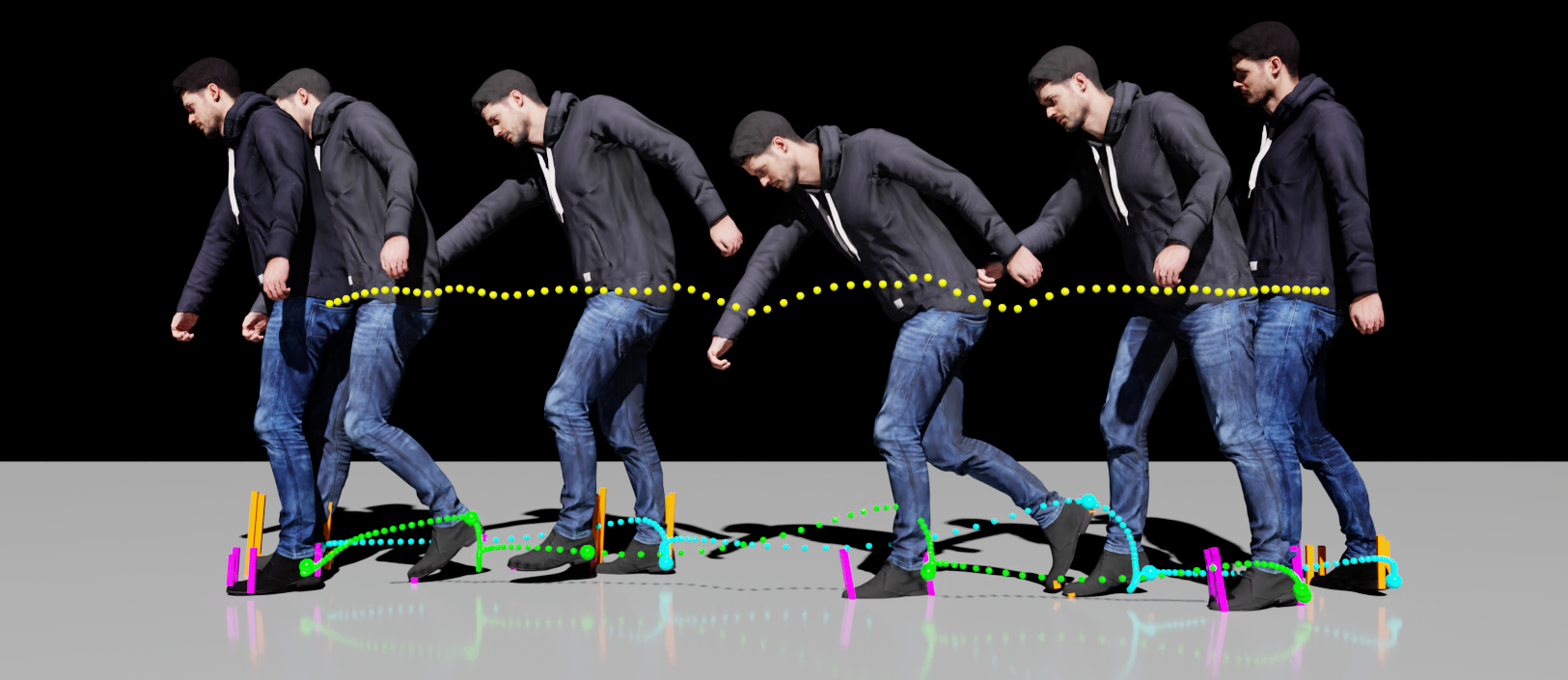

We recover high quality motions from video inputs only. Above we compose a sample of motions from the validation set of Human3.6m recovered using our method. |

|

More Motions from in the Wild Videos

|

|

|

|

|

For in-the-wild motions, we do not assume access to ground truth camera parameters. Instead we optimize the parameters of the ground plane jointly with the motion. They are initialized such that the initial character motion is on average standing upright with respect to the ground plane and gravity assumed to be perpendicular. |

|

|

|

|

|

|

More Motion Comparisons

|

|

Failure Cases

|

|||

|

|

||

We show frames selected from the highest error ones. Failure cases are generally due to inaccuracies in the underlying pose estimator due to occlusion or depth ambiguity. The motion stays physically correct.

|

|