How to Stop Epidemics: Controlling Graph Dynamics with Reinforcement Learning and Graph Neural Networks

Abstract

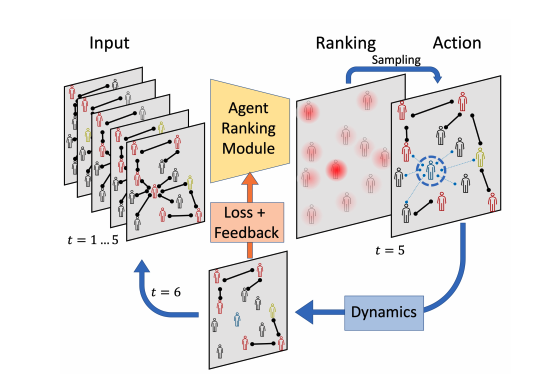

We consider the problem of monitoring and controlling a partially-observed dynamic process that spreads over a graph. This problem naturally arises in contexts such as scheduling virus tests or quarantining individuals to curb a spreading epidemic; detecting fake news spreading on online networks by manually inspecting posted articles; and targeted marketing where the objective is to encourage the spread of a product. Curbing the spread and constraining the fraction of infected population becomes challenging when only a fraction of the population can be tested or quarantined. To address this challenge, we formulate this setup as a sequential decision problem over a graph. In face of an exponential state space, combinatorial action space and partial observability, we design RLGN, a novel tractable Reinforcement Learning (RL) scheme to prioritize which nodes should be tested, using Graph Neural Networks (GNNs) to rank the graph nodes. We evaluate this approach in three types of social-networks: community-structured, preferential attachment, and based on statistics from real cellular tracking. RLGN consistently outperforms all baselines in our experiments. It suggests that prioritizing tests using RL on temporal graphs can increase the number of healthy people by 25% and contain the epidemic 30% more often than supervised approaches and 2.5× more often than non-learned baselines using the same resources.