|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

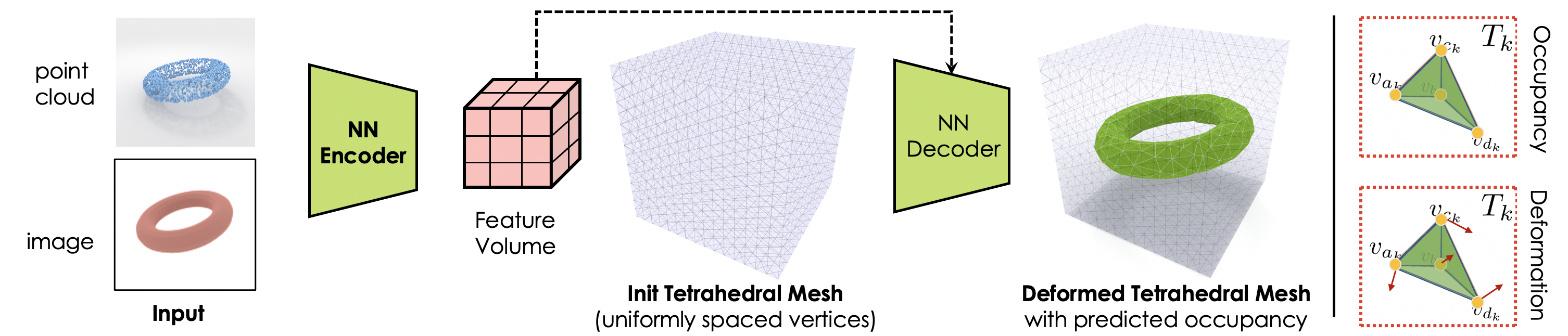

3D shape representations that accommodate learning-based 3D reconstruction are an open problem in machine learning and computer graphics. Previous work on neural 3D reconstruction demonstrated benefits, but also limitations, of point cloud, voxel, surface mesh, and implicit function representations. We introduce Deformable Tetrahedral Meshes (DefTet) as a particular parameterization that utilizes volumetric tetrahedral meshes for the reconstruction problem. Unlike existing volumetric approaches, DefTet optimizes for both vertex placement and occupancy, and is differentiable with respect to standard 3D reconstruction loss functions. It is thus simultaneously high-precision, volumetric, and amenable to learning-based neural architectures. We show that it can represent arbitrary, complex topology, is both memory and computationally efficient, and can produce high-fidelity reconstructions with a significantly smaller grid size than alternative volumetric approaches. The predicted surfaces are also inherently defined as tetrahedral meshes, thus do not require post-processing. We demonstrate that DefTet matches or exceeds both the quality of the previous best approaches and the performance of the fastest ones. Our approach obtains high-quality tetrahedral meshes computed directly from noisy point clouds, and is the first to showcase high-quality 3D tet-mesh results using only a single image as input.

|

Jun Gao, Wenzheng Chen, Tommy Xiang, Clement Fuji Tsang, Alec Jacobson, Morgan McGuire, Sanja Fidler Learning Deformable Tetrahedral Meshes for 3D Reconstruction NeurIPS 2020 |

|

|

|

|

Point Cloud 3D Reconstruction using 3D Supervision

|

|

|

We run experiments on reconstructing 3D shapes from the point cloud using 3D supervision. The input is noisy point clouds (top left corner at each subfigure), and we show the reconstructed shapes in 360 degrees. |

|

Single Image 3D Reconstruction using 3D Supervision

|

|

|

We run experiments on reconstructing 3D shapes from a single image using 3D supervision. The input is a single 64x64 image (top left corner at each subfigure), and we show the reconstructed shapes in 360 degrees. |

|

Single Image 3D Reconstruction using 2D Supervision

|

|

|

We run experiments on reconstructing 3D shapes from a single image using 2D supervision. The input is a single 64x64 image (top left corner at each subfigure), and we use differentiable rendering to train the network to predict 3D shapes without 3D supervision. We show the reconstructed shapes in 360 degrees. |

|

Tetrahedral Meshing

|

|

|

|

|

|

|

| TetGen TetWild QuarTet DefTet |

|---|

|

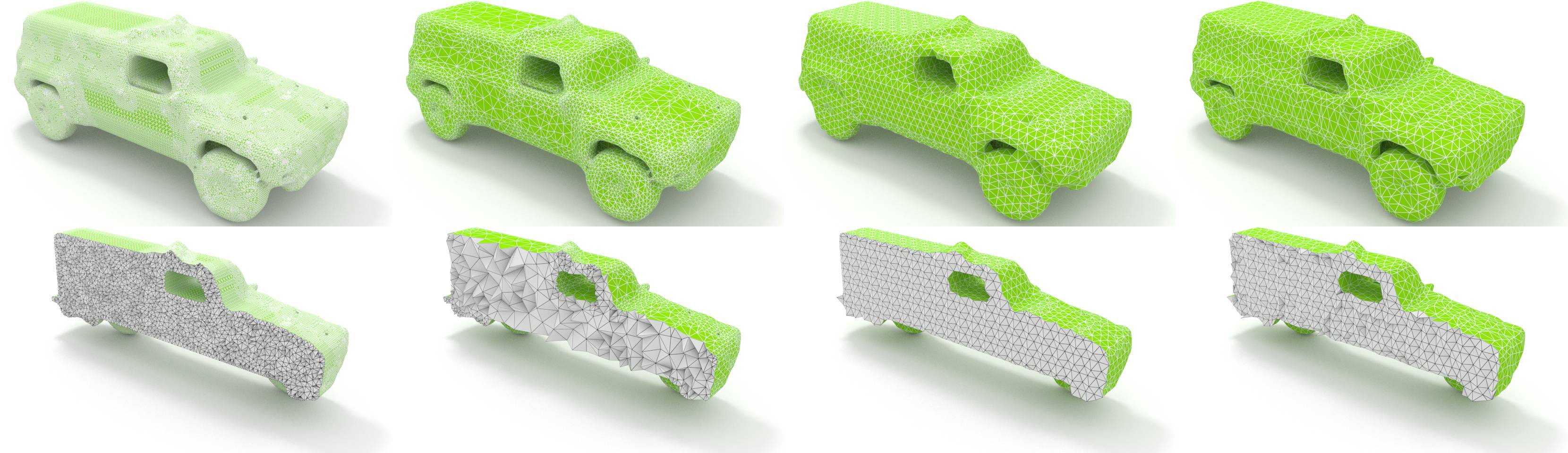

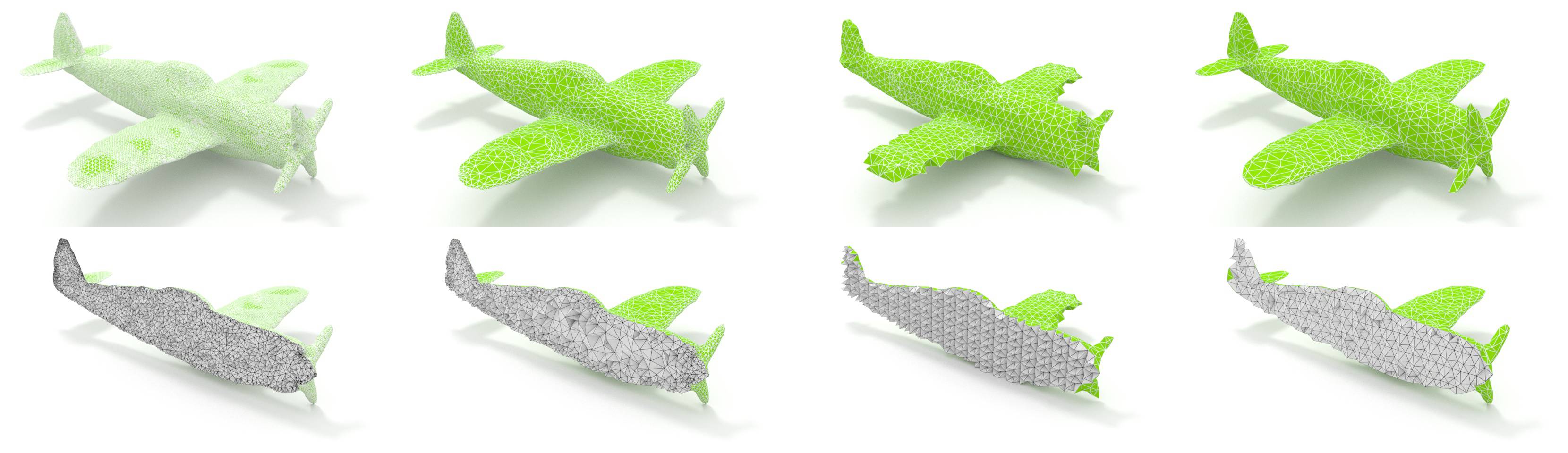

We show the experiments on tetrahedral meshing, comparing with traditional techniques in computer graphics: TetGen (1st column), TetWild (2nd column), Quartet (3rd column), and DefTet (4th column). |

|

Optimization Procedure

|

|

|

|

|

We show the procedure of overfitting DefTet to one single shape by jointly optimizing the occupancy and deformation in NVIDIA's Omniverse. We subdivide the DefTet to obtain higher accuracy and finer geometric details. |

| We thank Bowen Chen for visualization of DefTet in NVIDIA's Omniverse. |

|

Novel View Synthesis and Multiview 3D Reconstruction

|

|

|

|

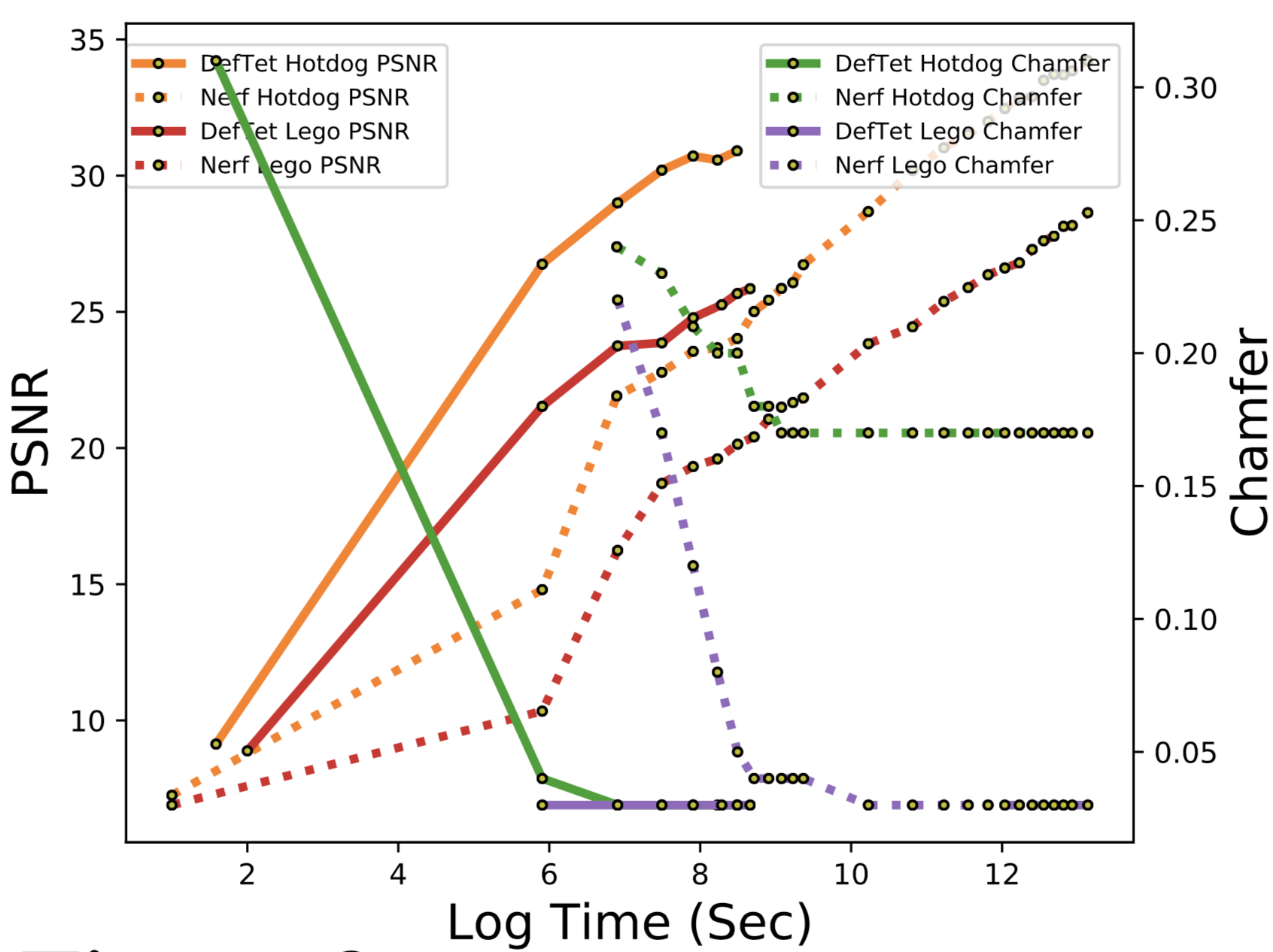

| Novel View Synthesis Speed v.s performance |

|---|

|

|

| Physics-based Simulation |

|---|

|

DefTet synthesizes realistic novel views (first row). Since the output is a tetrahedral mesh, it can directly be used in physics based simulation. In the second row, we show simulation where we manually define the Lamé parameters with Neo Hookean material. DefTet also converges significantly faster (421 seconds on average) than Nerf (36,000 seconds, around 10 hours), as shown in the last column of the first row. |

|

|