Neural Brushstroke Engine (NeuBE) includes a GAN model that learns to mimic many drawing media styles by learning from unlabeled images. The result is a GAN model that can be directly controlled by user strokes, with style code z corresponding to the style of the interactive brush (and not the final image). Together with a patch-based paining engine, NeuBE allows seamless drawing on a canvas of any size -- in a wide variety of learned natural and novel AI brush styles. Our model can be trained on only about 200 images of drawing media, is shown to match the training styles well, and generalizes to unseen out-of-distribution styles. This invites novel applications, like text-based retrieval of brushes and matching brushes to existing art to allow interactive painting in that style. Our generator supports user control of stroke color for any brush style and compositing of strokes on clear background. We also support automatic stylization of line drawings. Code, data and demo are coming soon.

NeuBE's continuous latent space of brushes allows text-based search over interactive tools for the first time. By leveraging CLIP embedding space our system can discover interactive brush styles very different from the training data.

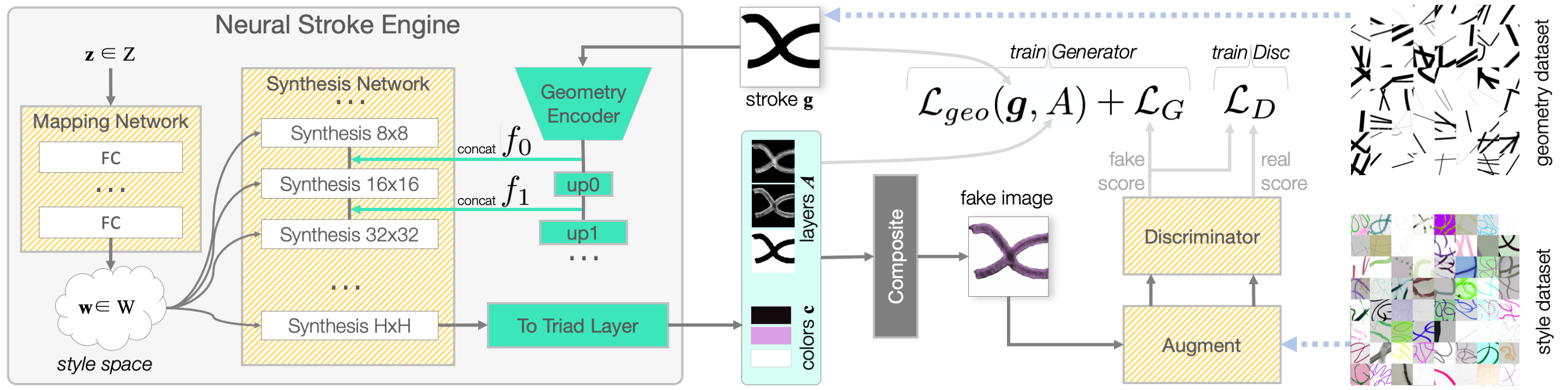

To approximate a distribution of interactive drawing styles, NeuBE is trained on a style dataset of unlabeled scribbles in many target media and an unpaired geometry dataset of plain black on white strokes, generated synthetically. NeuBE's patch-based generator extends StyleGAN2 architecture (striped yellow blocks) to condition image generation on stroke geometry g. Represented as a binary image, g is passed through a pre-trained Geometry Encoder to produce spatial features that are concatenated with the StyleGAN features. Instead of outputting RGB, the final ToTriad layer of our generator produces a decomposed image representation that allows recoloring, compositing and formulating a geometric loss L𝑔𝑒𝑜.

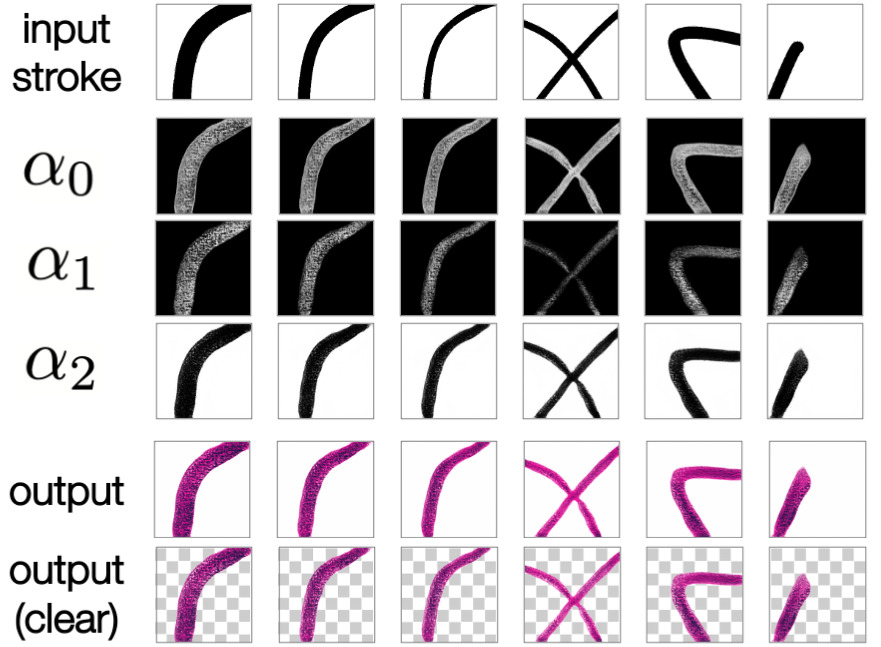

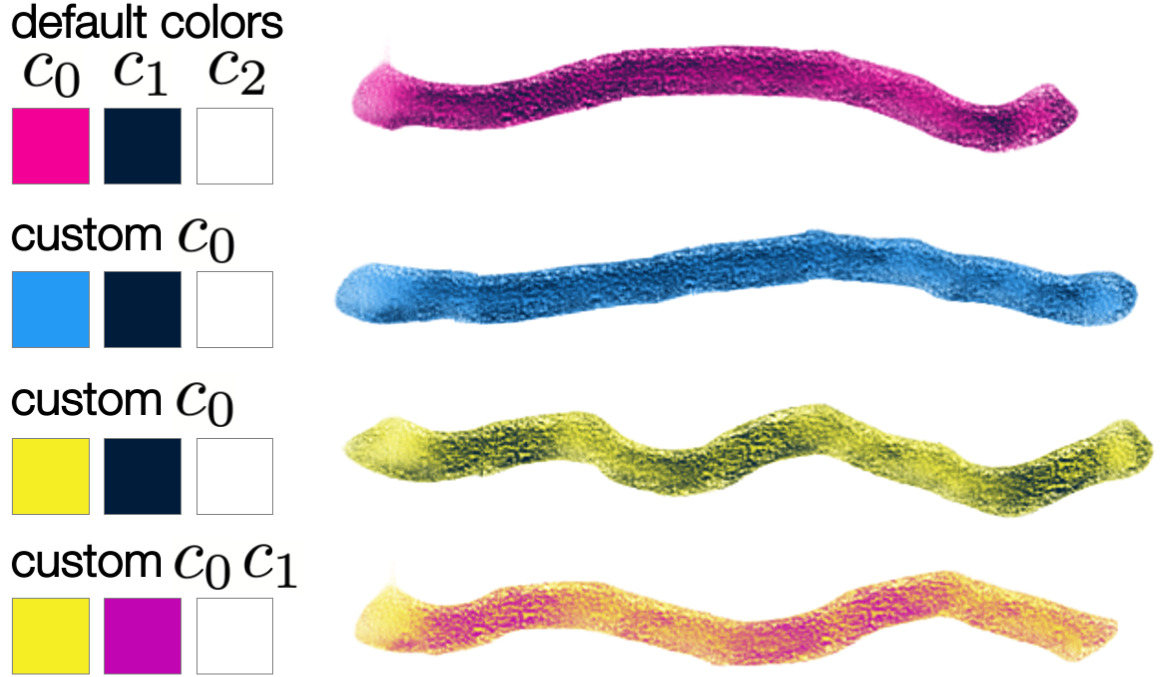

Instead of RGB images, the ToTriad layer outputs Alpha-color (AC) parametrization: 3 colors and 3 spatial alpha maps that can be deterministically composited to produce the final image. This allows us to support user control of color for any brush style z as well as geometric control and compositing of clear strokes.

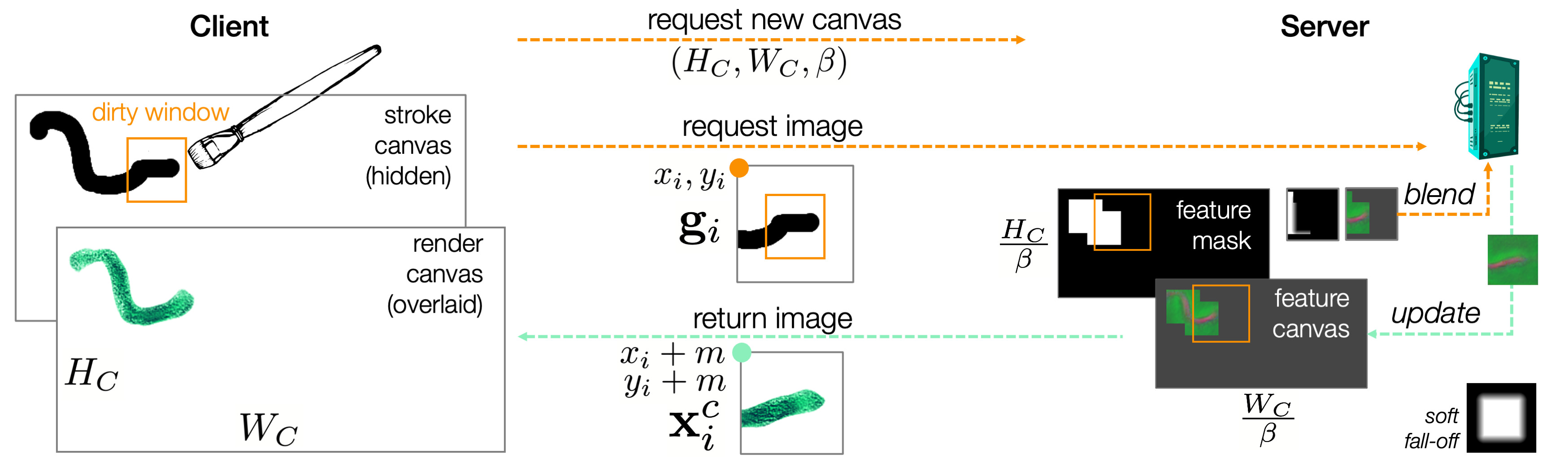

Together with a carefully-designed painting engine, the generator backbone allows NeuBE to support interactive drawing in a wide variety of learned styles on a canvas of any size by generating on the patch level. Our demos are implemented as a client-server web-based demo, with the generator running on a remote GPU server and the drawing interface operating in the browser on another machine or tablet.

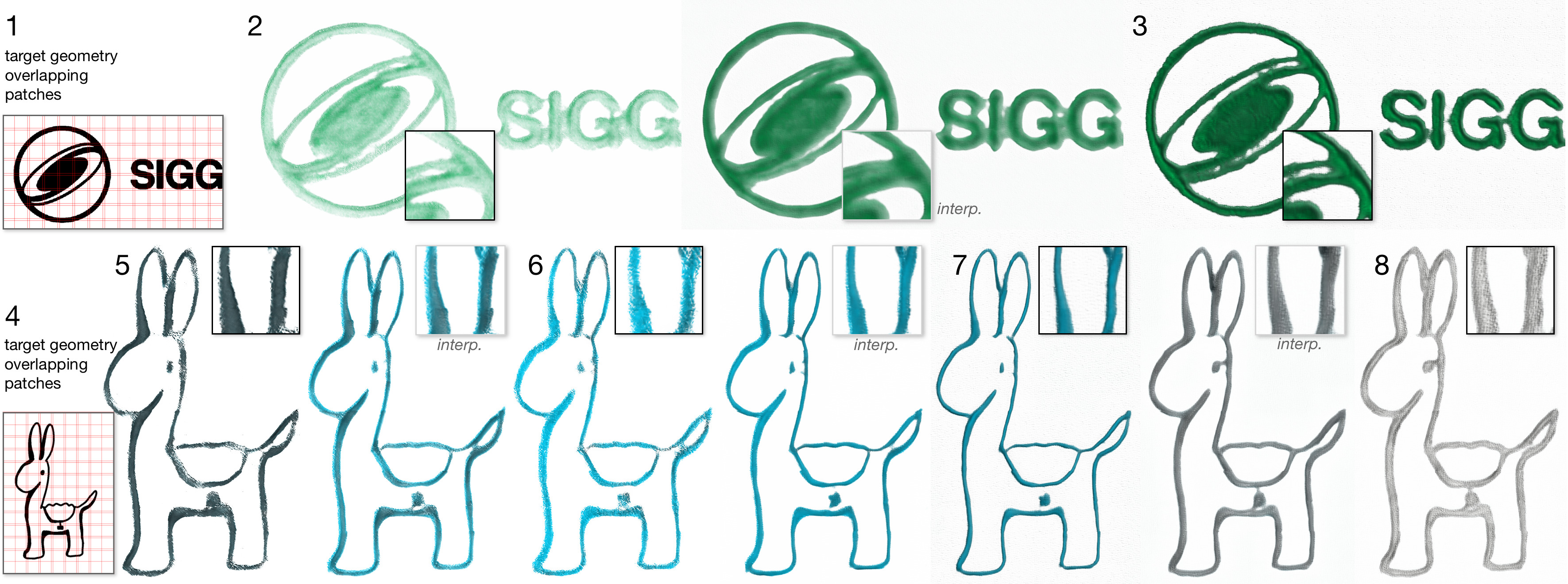

In addition to interactive drawing, NeuBE supports automatic stylization of line drawings in any of the learned styles by running the painting engine on the source broken up into patches, as show below. Interpolation of styles for these stylizations is also possible (see styles labeled interp.), allowing smooth style transitions for any line drawing.

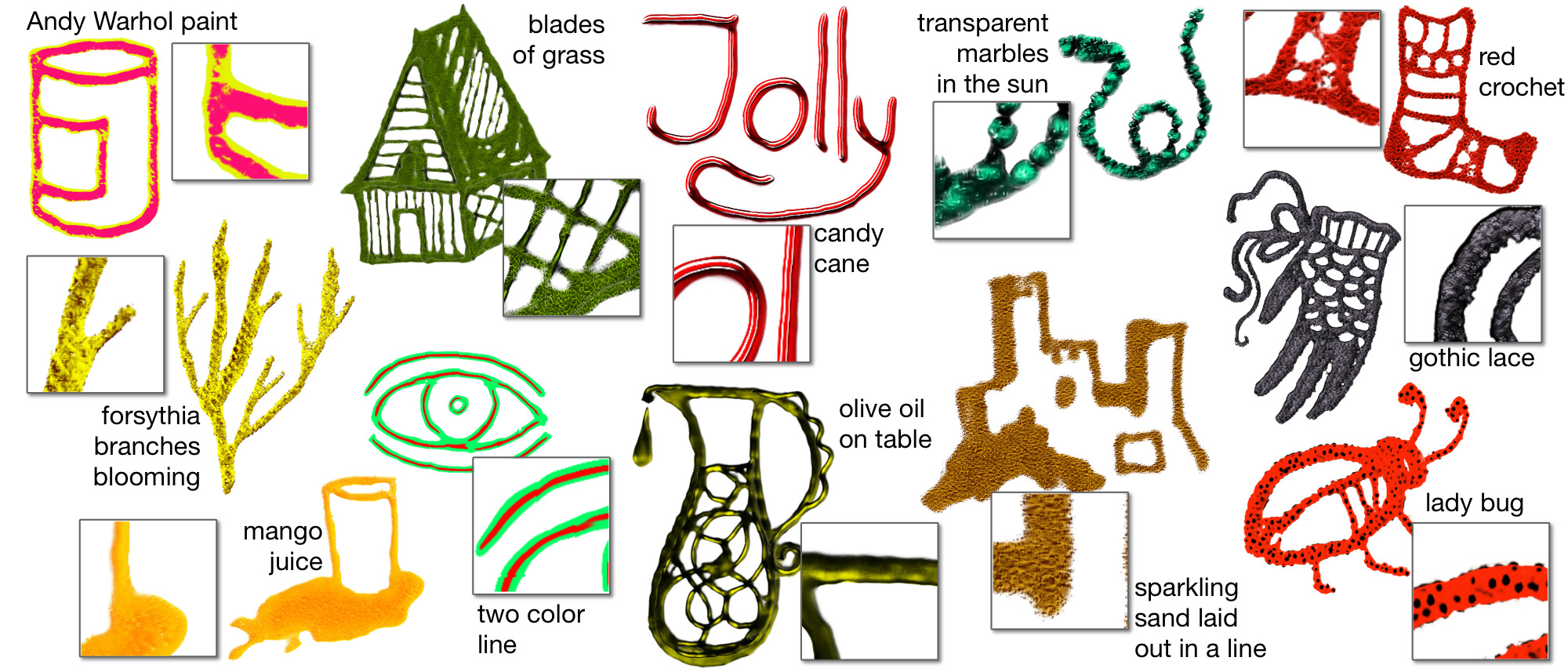

Here are some random brush styles learned by NeuBE on our Style Dataset 1, comprised of 148 informally captured scribbles of natural media. All drawing is done interactively.

When embedded into the latent W+ space, training examples are accurately represented as interactive brushes. In addition, embedding styles from unseen datasets shows excellent generalization to all but the most challenging of styles.

Generalization of the latent space to unseen styles allows novel applications for brush search and discovery in the latent space of drawing tools. For example, we use CLIP to search for a brush, given text. Here are some sample discovered styles.

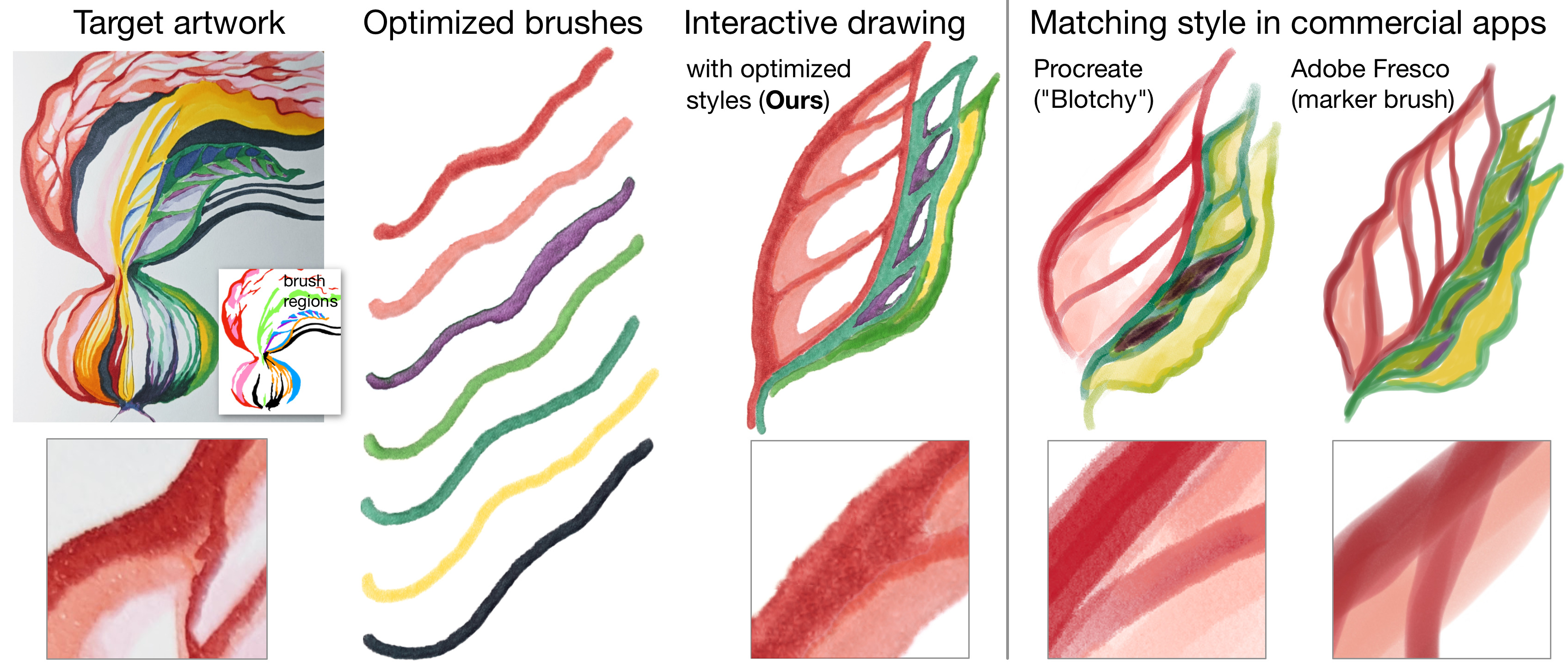

We further probe optimizing the brush latent code of a pre-trained model to match an artwork. New art can then be interactively drawn by the user in the style of the original. Our preliminary results show that NeuBE's latent space can faithfully replicate textural details of styles that fail to be accurately matched by commercial digital drawing applications. We are excited to see further work in this area.

Drawing in our web-based user interface. Drawing is performed in the Safari browser on an iPad device with apple pencil, while the GAN generator is running on the server. See additional high-resolution drawings: CLIP ("burnt umber oil paint"), CLIP ("candy cane"), several embedded playdoh styles, single style with two-tone color control.

If building on our ideas, code or data, please cite:

@article{shugrina2022neube,

title={Neural Brushstroke Engine: Learning a Latent Style Space of Interactive Drawing Tools},

author={Shugrina, Maria and Li, Chin-Ying and Fidler, Sanja},

journal={ACM Transactions on Graphics (TOG)},

volume={41},

number={6},

year={2022},

publisher={ACM New York, NY, USA}

}