Neural Geometric Level of Detail:

Real-time Rendering with Implicit 3D Shapes

News

Abstract

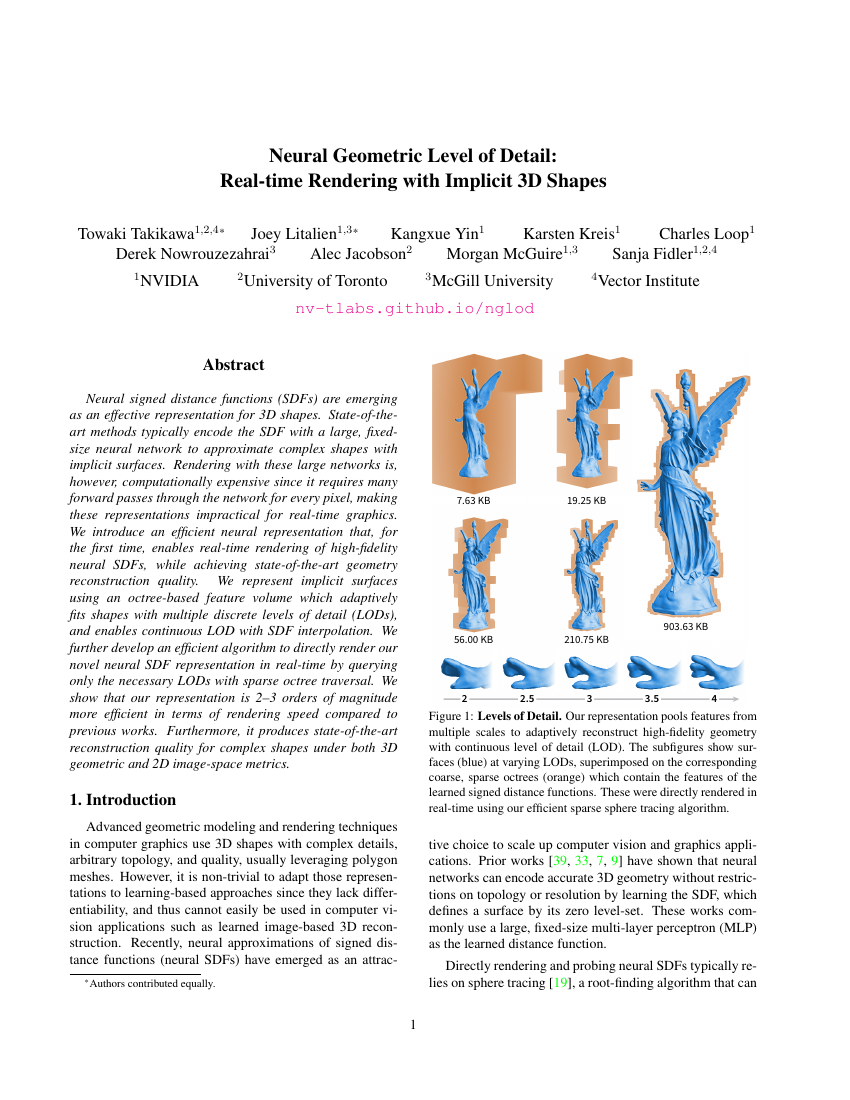

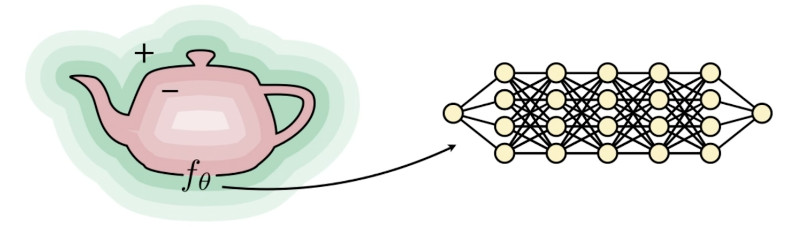

Neural signed distance functions (SDFs) are emerging as an effective representation for 3D shapes. SDFs encode 3D surfaces with a function of position that returns the closest distance to a surface. State-of-the-art methods typically encode the SDF with a large, fixed-size neural network to approximate complex shapes with implicit surfaces. Rendering these large networks is, however, computationally expensive since it requires many forward passes through the network for every pixel, making these representations impractical for real-time graphics applications.

Neural SDFs are typically encoded using large, fixed-size MLPs which are expensive to render.

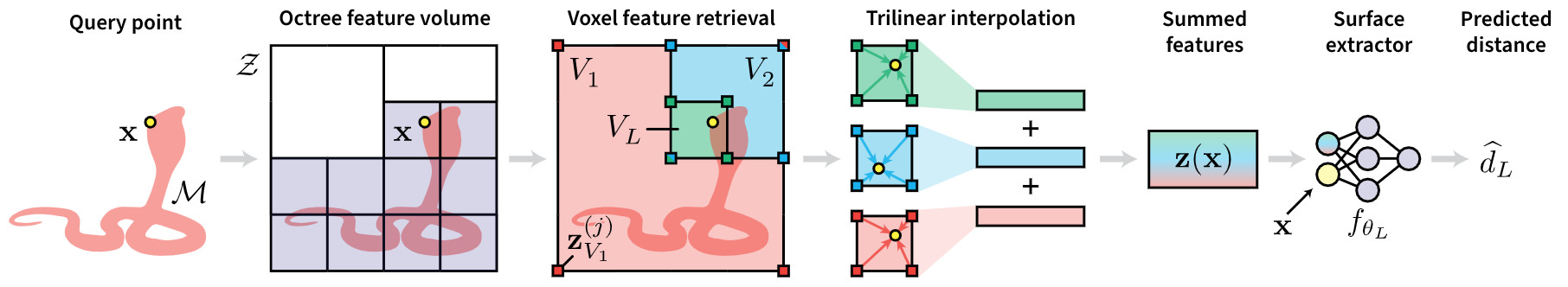

We introduce an efficient neural representation that, for the first time, enables real-time rendering of high-fidelity neural SDFs, while achieving state-of-the-art geometry reconstruction quality. We represent implicit surfaces using an octree-based feature volume which adaptively fits shapes with multiple discrete levels of detail (LODs), and enables continuous LOD with SDF interpolation. We further develop an efficient algorithm to directly render our novel neural SDF representation in real-time by querying only the necessary LODs with sparse octree traversal. We show that our representation is 2-3 orders of magnitude more efficient in terms of rendering speed compared to previous works. Furthermore, it produces state-of-the-art reconstruction quality for complex shapes under both 3D geometric and 2D image-space metrics.

We encode shapes using a sparse voxel octree (SVO) based feature volume, where the levels of the tree corresponds to LOD. This lets us use a very small MLP, which accelerates rendering by 2-3 orders of magnitudes.

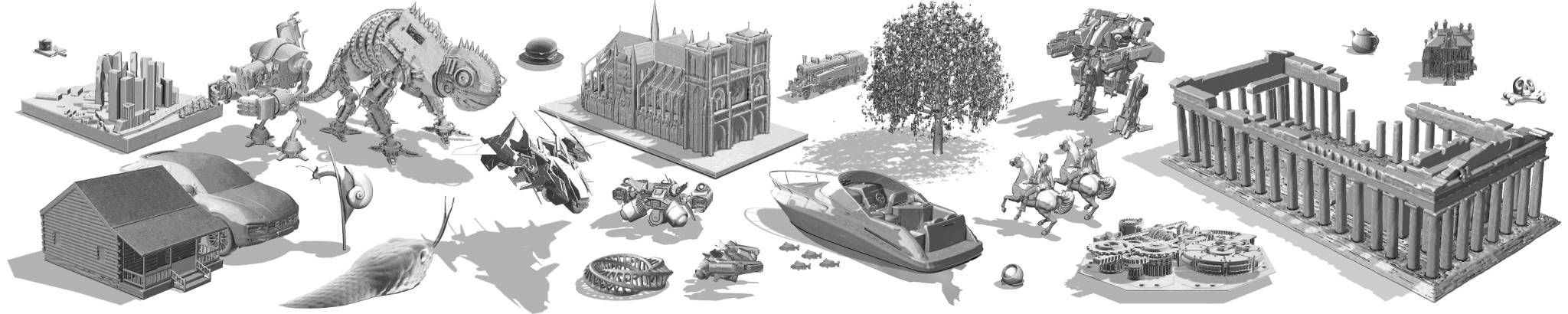

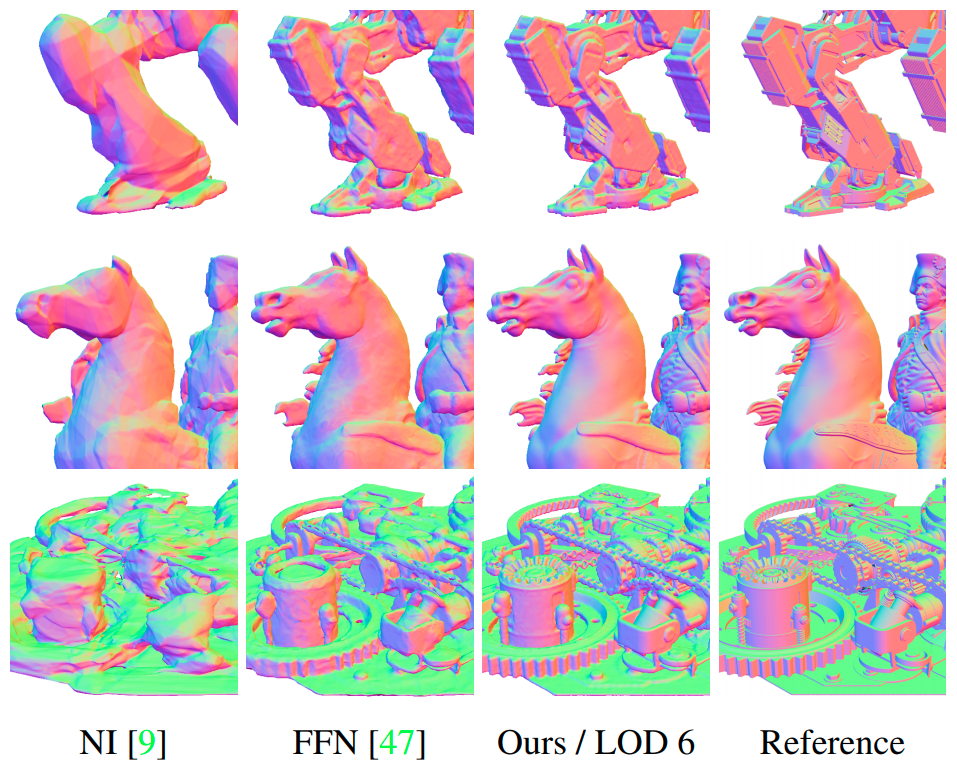

We qualitatively compare the mesh reconstructions. Only ours is able to recover fine details, with speeds 50× faster than FFN and comparable to NI. We render surface normals to highlight geometric details.

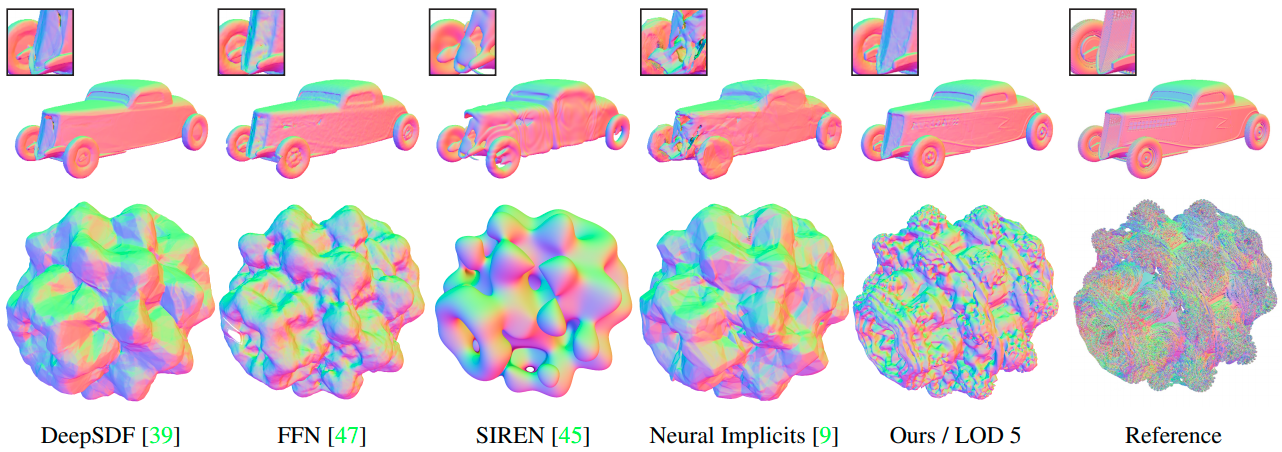

We test against two difficult analytic SDF examples from Shadertoy; the Oldcar, which contains a highly non-metric signed distance field, as well as the Mandelbulb, which is a recursive fractal structure that can only be expressed using implicit surfaces. Only our architecture can reasonably reconstruct these hard cases. We render surface normals to highlight geometric details.

Additional reconstructions.

Neural Geometric Level of Detail: Towaki Takikawa*, Joey Litalien*, Kangxue Yin, Karsten Kreis, Charles Loop, Derek Nowrouzezahrai, Alec Jacobson, Morgan McGuire & Sanja Fidler *Authors contributed equally Please send feedback and questions to Towaki Takikawa We would like to thank

Jean-Francois Lafleche,

Peter Shirley,

Kevin Xie,

Jonathan Granskog,

Alex Evans, and

Alex Bie at NVIDIA for interesting discussions throughout the project.

We also thank

Peter Shirley,

Alexander Majercik,

Jacob Munkberg,

David Luebke,

Jonah Philion and

Jun Gao for their help with paper editing.

Results

Paper

Real-time Rendering with Implicit 3D ShapesCitation

@article{takikawa2021nglod,

title = {Neural Geometric Level of Detail: Real-time Rendering with Implicit {3D} Shapes},

author = {Towaki Takikawa and

Joey Litalien and

Kangxue Yin and

Karsten Kreis and

Charles Loop and

Derek Nowrouzezahrai and

Alec Jacobson and

Morgan McGuire and

Sanja Fidler},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2021}

}

Acknowledgements