Abstract

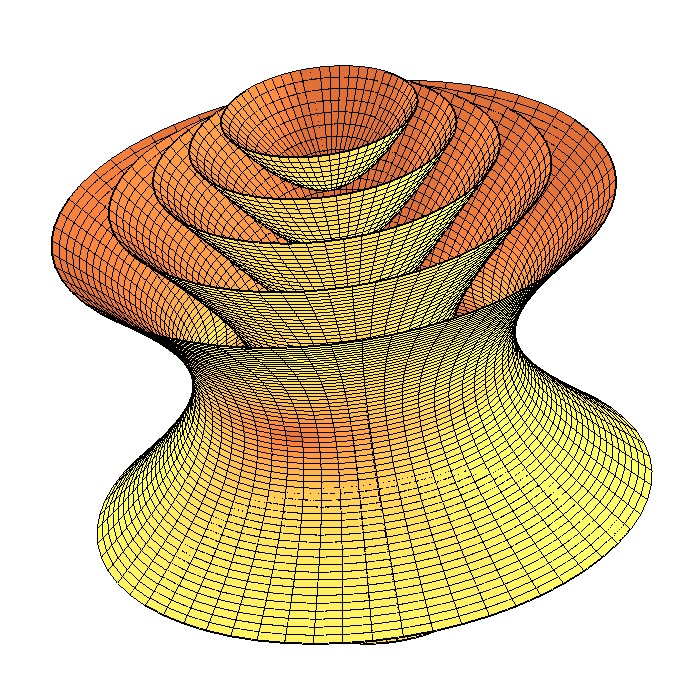

In machine learning, data is usually represented in a (flat) Euclidean space where distances between points are along straight lines. Researchers have recently considered more exotic (non-Euclidean) Riemannian manifolds such as hyperbolic space which is well suited for tree-like data. In this paper, we propose a representation living on a pseudo-Riemannian manifold with constant nonzero curvature. It is a generalization of hyperbolic and spherical geometries where the nondegenerate metric tensor is not positive definite. We provide the necessary learning tools in this geometry and extend gradient method optimization techniques. More specifically, we provide closed-form expressions for distances via geodesics and define a descent direction that guarantees the minimization of the objective problem. Our novel framework is applied to graph representations.