We present \(\mathcal{X}^3\) (pronounced XCube), a novel generative model for high-resolution sparse 3D voxel grids with arbitrary attributes. Our model can generate millions of voxels with a finest effective resolution of up to \(1024^3\) in a feed-forward fashion without time-consuming test-time optimization. To achieve this, we employ a hierarchical voxel latent diffusion model which generates progressively higher resolution grids in a coarse-to-fine manner using a custom framework built on the highly efficient VDB data structure. Apart from generating high-resolution objects, we demonstrate the effectiveness of XCube on large outdoor scenes at scales of 100m\(\times\)100m with a voxel size as small as 10cm. We observe clear qualitative and quantitative improvements over past approaches. In addition to unconditional generation, we show that our model can be used to solve a variety of tasks such as user-guided editing, scene completion from a single scan, and text-to-3D.

A sparse voxel hierarchy is a sequence of coarse-to-fine 3D sparse voxel grids such that every fine voxel is contained within a coarser voxel.

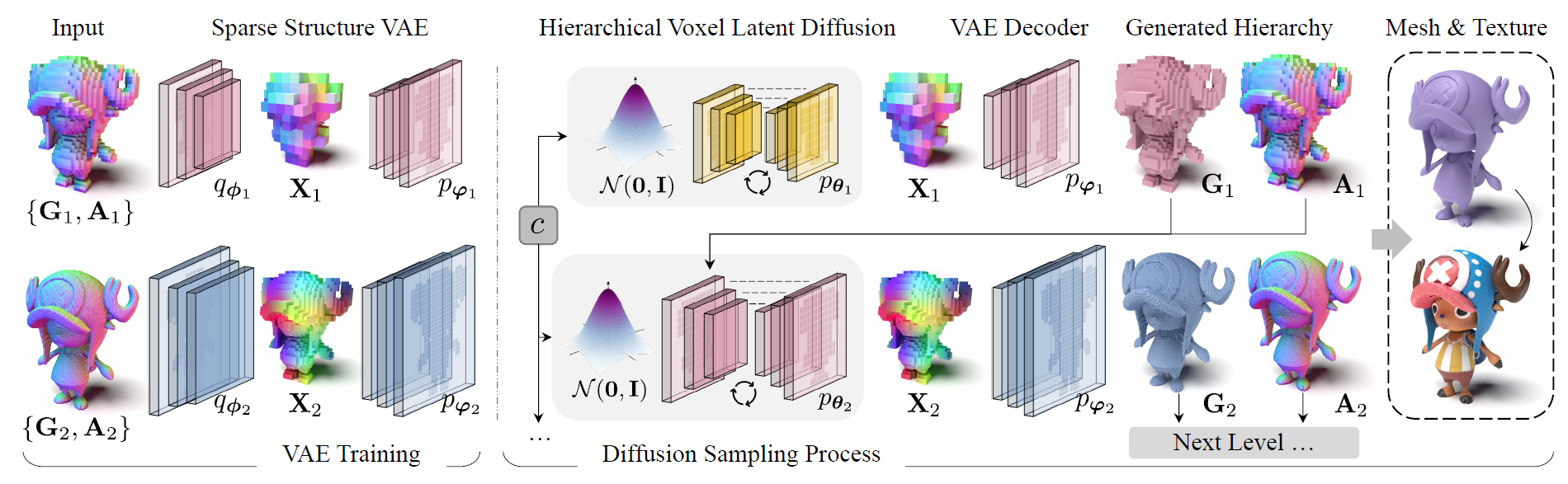

Our method trains a hierarchy of latent diffusion models over a hierarchy of sparse voxel grids \(\mathcal{G} = \{G_1, ..., G_L\}\). Our model first trains a sparse structure VAE which learns a compact latent representation for each level of the hierarchy. We then train a latent diffusion model to generate each level of the hierarchy conditioned on the coarser level above it. At inference time, we simply run each diffusion model in a cascaded fashion from coarse to fine to generate novel shapes and scenes. By leveraging the decoder of the sparse structure VAE at inference time, our generated high-resolution voxel grids additionally contain various attributes, such as normals and semantics, which can be used in down-stream applications.

Our method is capable of generating intricate geometry and thin structures.

We generate high-resolution shapes from text. Textures are generated by a separate model as a post-processing step.

Unconditional scene generation with semantics trained on autonomous driving data.

Our model trained on a small sythetic dataset highlights the detail and spatial resolution XCube can achieve.

Conditioning our model on single LiDAR scans and accumulating to reconstruct large drives.

@inproceedings{ren2024xcube,

title={XCube: Large-Scale 3D Generative Modeling using Sparse Voxel Hierarchies},

author={Ren, Xuanchi and Huang, Jiahui and Zeng, Xiaohui and Museth, Ken

and Fidler, Sanja and Williams, Francis},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}

The authors acknowledge James Lucas and Jun Gao for their help proofreading the paper and for their feedback during the project. We also thank Jonah Philion for generously sharing his compute quota allowing us to train our model before the deadline.