DreamGen

Unlocking Genearlization in Robot Learning through Video World Models

We introduce DreamGen, a 4-stage pipeline to generate neural trajectories, synthetic robot data generated from video world models. This work is the first in literature to enable zero-shot behavior generalization and zero-shot environment generalization: we enable a humanoid robot to perform 22 new behaviors in both seen and unseen environments, while requiring teleoperation data from only a single pick-and-place task in one environment. Through DreamGen, we change the paradigm of robot learning from scaling human teleoperation data to scaling GPU compute through world models.

DreamGen is divided into 4-steps:

- We first finetune video world models (image-to-video diffusion models) on a target robot to learn the dynamics of the given robot embodiment.

- We prompt the models with initial frames and language instructions, generating robot videos that not only include in-domain behaviors, but also novel behaviors in novel environments.

- We extract pseudo robot actions via a latent action model or an inverse dynamics model (IDM).

- We use these videos labeled with pseudo actions, named as neural trajectories, for downstream visuomotor policy learning

With DreamGen, we enable a humanoid robot to perform 22 new verbs in 10 new environments. In the next subsections, we show videos of the generated neural trajectory along with the visuomotor policy execution from training soley on the neural trajectories (50 per task) for learning (1) new verbs in the lab, (2) seen verbs in new scenes, and (3) new verbs in new scenes. Note that both the video world model and the IDM model was trained only on pick-and-place teleoperation data in a single environment.

#1. Behavior Generalization:

Select a behavior to see the corresponding neural trajectory and real-robot execution videos:

Neural Trajectory

Real-Robot Execution

#2. Environment Generalization:

Select an environment task to see the corresponding neural trajectory and real-robot execution videos:

Neural Trajectory

Real-Robot Execution

#3. Behavior + Environment Generalization

Select a behavior and environment task to see the corresponding neural trajectory and real-robot execution videos:

Neural Trajectory

Real-Robot Execution

Performance Comparison

The chart below shows the performance comparison between GR00T N1 and GR00T N1 w/ DreamGen across different generalization conditions:

DreamGen for augmenting data for contact-rich tasks

While prior work shows that generating synthetic robot data is possible through simulation, they still face the sim2real issue and have a hard time generating training data for contact-rich tasks. We show that DreamGen enables augmenting data for contact-rich tasks such as manipulating deformable objects (folding), tool-use (hammering), etc., and goes straight from real2real, starting first the initial frame. The same pipeline can also be applied to any robotic systems, including a single-arm robot (Franka) as well as a $100 robot arm (SO-100), and with multiple camera views (e.g. wrist cams). Below are neural trajectories from DreamGen along with the downstream policy co-trained with real-world and neural trajectories.

Neural Trajectories

Real-Robot Execution

The graphs below shows the performance gain from DreamGen across different robotic platforms (GR1, Franka, SO-100) & visuomotor robot policies (DP, π0, GR00T N1), highlighting the flexibility of DreamGen.

Data Scaling

We analyze whether increasing the amount of neural trajectories would lead to better performance by measuring RoboCasa (sample neural trajectories and policy evals shown above) performance in simulation. We vary the total amount of neural trajectories, from 0 to 240k nerual trajectories, across different scenarios of ground-truth data (low, medium, high). We explore using both latent actions (LAPA) and IDM for getting pseudo actions. We observe that both IDM and LAPA actions lead in performance boost for all data regime. Also, we observe that there is a log-linear slope between the total number of neural trajectories and the downstream robot policy performance, establishing a promising new axis for scaling robot training data.

DreamGen Bench

Select a subset of the benchmark to see different model rollouts of the same instruction:

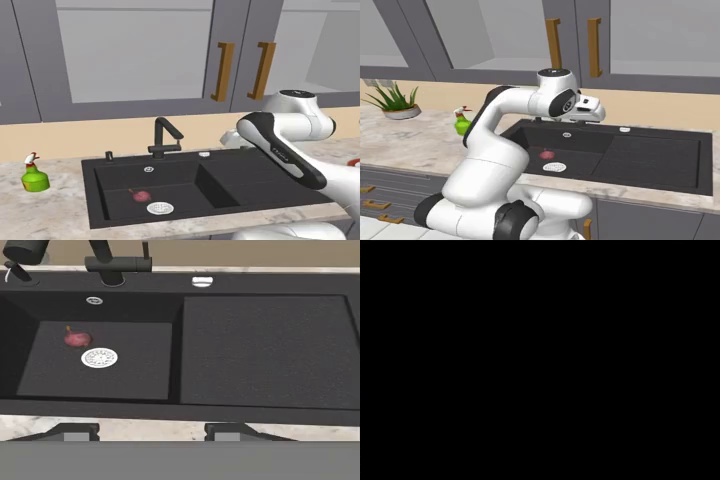

Pick the onion from the sink and place it on the plate located on the counter

We introduce DreamGen Bench, a world modeling benchmark that aims to quantify the capacity of existing video generative models to adapt to a specific robot embodiment. We measure two key metrics: instruction following (whether the generated video strictly adheres to given instructions) and physics following (evaluating the physical plausibility of the generated videos). We evaluate 4 video world models (Cosmos, WAN 2.1, Hunyuan, CogVideoX) on 4 different setups (Robocasa, GR1 Object, GR1 Behavior, GR1 Environment). We observe positive correlation between DreamGen Bench scores and downstream task scores.