Why Do Robots Need to Think Ahead?

Imagine you're reaching for your coffee mug on a crowded desk. Without thinking about it, your brain predicts how your hand will move, what obstacles it might encounter, and how the mug will feel when grasped. This ability to anticipate future states - what we call "world modeling" - is something we humans do naturally.

For robots, this same ability is crucial. A robot that can predict the consequences of its actions will make better decisions than one that simply reacts to the current moment. When a robot can "imagine" what will happen after it moves, it gains a powerful advantage in complex tasks like picking up objects, opening drawers, or manipulating tools.

Explicit World Modeling is Challenging, But Is It Necessary?

However, teaching robots to predict the future has been incredibly expensive computationally until now. Existing approaches such as UniPi, GR1 & GR2 train the robot policy model to predict entire future video / image frames pixel-by-pixel - like asking someone to paint a detailed portrait just to decide where to place their hand. This approach requires massive generative models that are slow and computationally intensive.

Is it really necessary to predict the future pixel by pixel? For the concrete task of "pick up the water bottle and place it in the yellow container", the core visual information is the position, orientation, and geometry of water bottle and the yellow container. Yet, the pixel-by-pixel prediction also reconstructs irrelevant details, such as a person passing by in the background. Instead, learning a policy-oriented world model is more efficient: we can shift from predicting pixels to predicting embeddings.

Introducing FLARE: A Simple, Lightweight Solution for Joint Policy and Latent World Modeling

This is where our approach, Future LAtent REpresentation Alignment (FLARE), comes in. Instead of forcing the model to predict entire visual scenes, we teach them to predict compact, meaningful representations of future states.

FLARE works by adding a few additional learnable "future tokens" to standard vision-language-action (VLA) models. These tokens are trained to align with the future robot observation embedding as an auxiliary loss alongside the regular action flowmatching loss. In this way, we encourage the denoising policy network to internally reason about the future latent state while maintaining its action prediction capability.

The beauty of FLARE is its simplicity - it requires only minor modifications to existing architectures and adds minimal overhead to both training and inference, yet delivers substantial performance improvements. During inference, no future vision-language embedding needs to be calculated, making the overhead especially minimal at policy deployment time.

A compact yet action aware embedding model

To make FLARE even more powerful, we developed a specialized embedding model that captures exactly what matters for robot control. Our action aware embedding model compresses the robot's vision language observations into a compact representation that's explicitly optimized for predicting actions.

This embedding model is pretrained on diverse robotic datasets spanning different robot embodiments, tasks, and environments, with data mixture visualized in the pie chart below. The result is representation that is compact but understands the essential features of a scene that matter for robot control, filtering out distractions and focusing on actionable information.

Empirical Results of FLARE on Multitask Imitation Learning

Empirically, FLARE achieves state-of-the-art performance on two challenging multitask robotic manipulation imitation learning benchmarks. Specifically, across both benchmarks—one featuring a single robotic arm and the other a humanoid robot—FLARE consistently outperforms baseline methods that rely on explicit world modeling or conventional policy learning approaches.

Beyond simulation benchmarks, we also evaluate FLARE on four real-world GR-1 humanoid manipulation tasks. With 100 trajectories per task, FLARE achieves an average success rate of 95.1%. Below, we showcase the rollout videos of the FLARE trained policy at 1x playback speed.

Learning from Human Egocentric Videos

FLARE can also be naturally extended to trajectories without action annotations, such as human egocentric demonstrations collected by a head-mounted GoPro. This setting is particularly attractive, as collecting human demonstrations is substantially more cost-effective and efficient than teleoperating a robot to execute the same tasks.

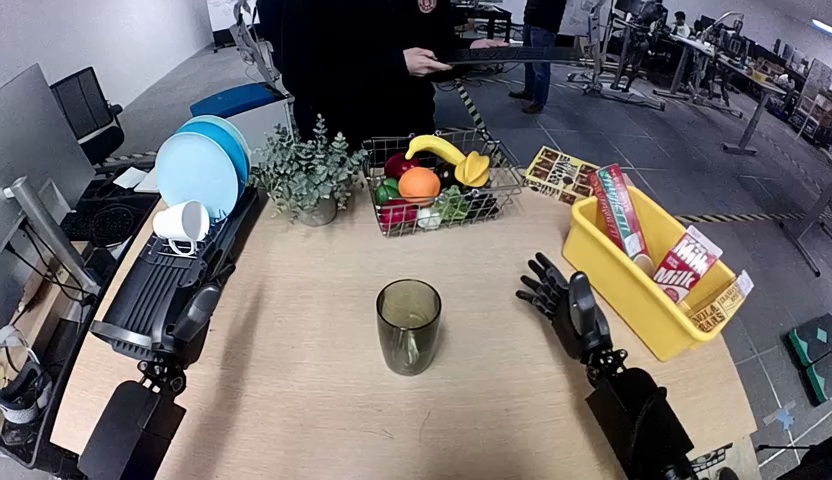

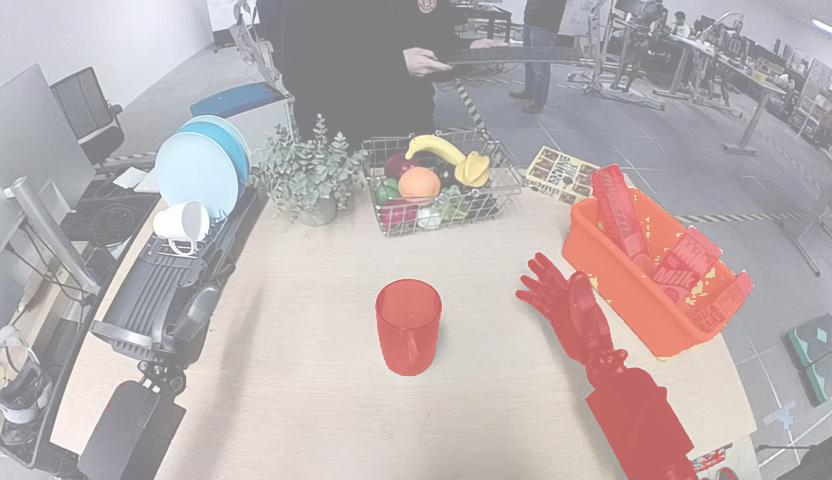

Human Demonstration

Robot Teleoperation Trajectory

However, learning from human videos presents unique challenges. Camera angles differ significantly between a head-mounted GoPro and a robot's perspective. The embodiment gap means humans and robots have different physical capabilities and constraints. Above we show a pair of human egocentric video and a humanoid robot demonstration trajectory performing the similar task.

For our experiments, we chose five objects with novel shapes that demanded special grasping techniques. We collected abundant human demonstrations (150 per object) using a simple head-mounted GoPro, but only a handful (up to 10) of robot demonstrations. We then trained FLARE on this data mixture - blending our limited robot demos, our GR1 in-house pretraining dataset, and the abundant human videos. For robot demos, we used both action flow-matching and future alignment objectives, but for human videos (which lack action labels), we relied solely on the future alignment loss to capture the underlying dynamics.

As shown in the barplot above, with only 1 teleoperated demonstration per object, FLARE trained with 1 shot achieves 37.5% success. Adding human egocentric videos boosts this to 60% with one demo and 80% with 10 demos per object. Below, we show rollout videos of the FLARE trained policy with 1 demo per object performing on five different objects.