Abstract

Modern deep learning-based video portrait generators render synthetic talking-head videos with impressive levels of photorealism, ushering in new user experiences such as videoconferencing with limited-bandwidth connectivity. Their safe adoption, however, requires a mechanism to verify if the rendered video is trustworthy. For instance, in videoconferencing we must identify cases when a synthetic video portrait uses the appearance of an individual without their consent. We term this task ''avatar fingerprinting''. We propose to tackle it by leveraging the observation that each person emotes in unique ways and has characteristic facial motion signatures. These signatures can be directly linked to the person ''driving'' a synthetic talking-head video. We learn an embedding in which the motion signatures derived from videos driven by one individual are clustered together, and pushed away from those of others, regardless of the facial appearance in the synthetic video. This embedding can serve as a tool to help verify authorized use of a synthetic talking-head video. Avatar fingerprinting algorithms will be critical as talking head generators become more ubiquitous, and yet no large scale datasets exist for this new task. Therefore, we contribute a large dataset of people delivering scripted and improvised short monologues, accompanied by synthetic videos in which we render videos of one person using the facial appearance of another. Since our dataset contains human subjects' facial data, we have taken many steps to ensure proper use and governance, including: IRB approval, informed consent prior to data capture, removing subject identity information, pre-specifying the subject matter that can be discussed in the videos, allowing subjects the freedom to revoke our access to their provided data at any point in future (and stipulating that interested third parties maintain current contact information with us so we can convey these changes to them). Lastly, we acknowledge the societal importance of introducing guardrails for the use of talking-head generation technology, and note that we present this work as a step towards trustworthy use of such technologies.

Links

The NVFAIR dataset

We propose the NVIDIA FAcIal Reenactment dataset: the largest collection of facial reenactment videos till date, featuring both self- and cross-reenactments (exchaustively sampled) across 161 unique subjects in the dataset, and a diverse set of subjects captured in a variety of settings. We highlight some data-capture details below and on a dedicated website here.

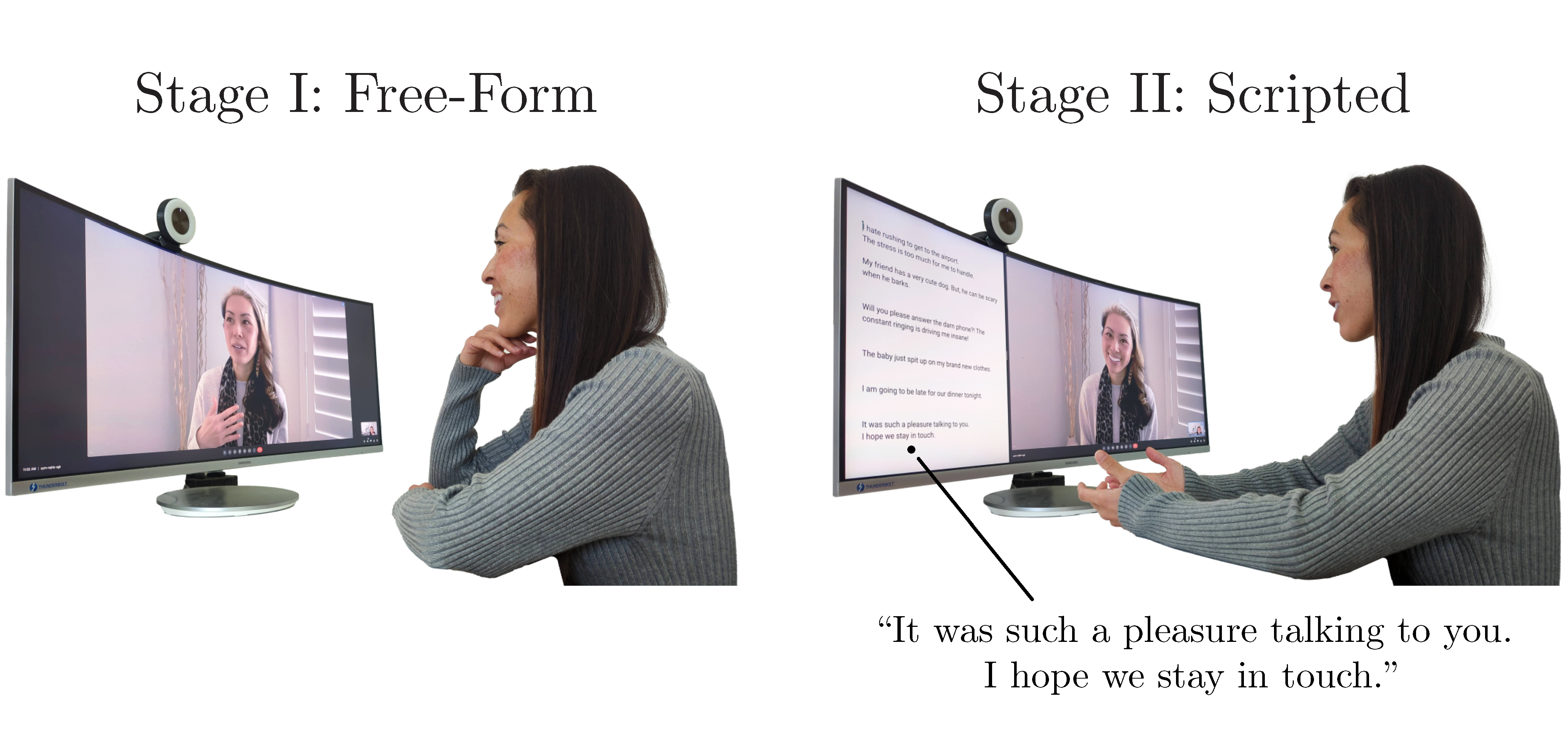

We begin the data capture process by asking 46 subjects to engage in recorded video calls in pairs. Relevant per-subject data is clipped out of these calls and made available as standalone files. This forms the original/real component of our dataset (Figure 1). We ask subjects to interact over recorded video calls, instead of asking them to simply record themselves, since video calls allow for a more natural interaction. Each video call proceeds in two stages. In the first stage, subjects speak on 7 common everyday topics in a free-form monologue when prompted by their recording partner (and the speaker and prompter switch roles for each topic). Next, subjects memorize and recite a predetermined set of scripted monologues to their partners in a manner most natural to them. In Figure 2, we show a subset of the real data.

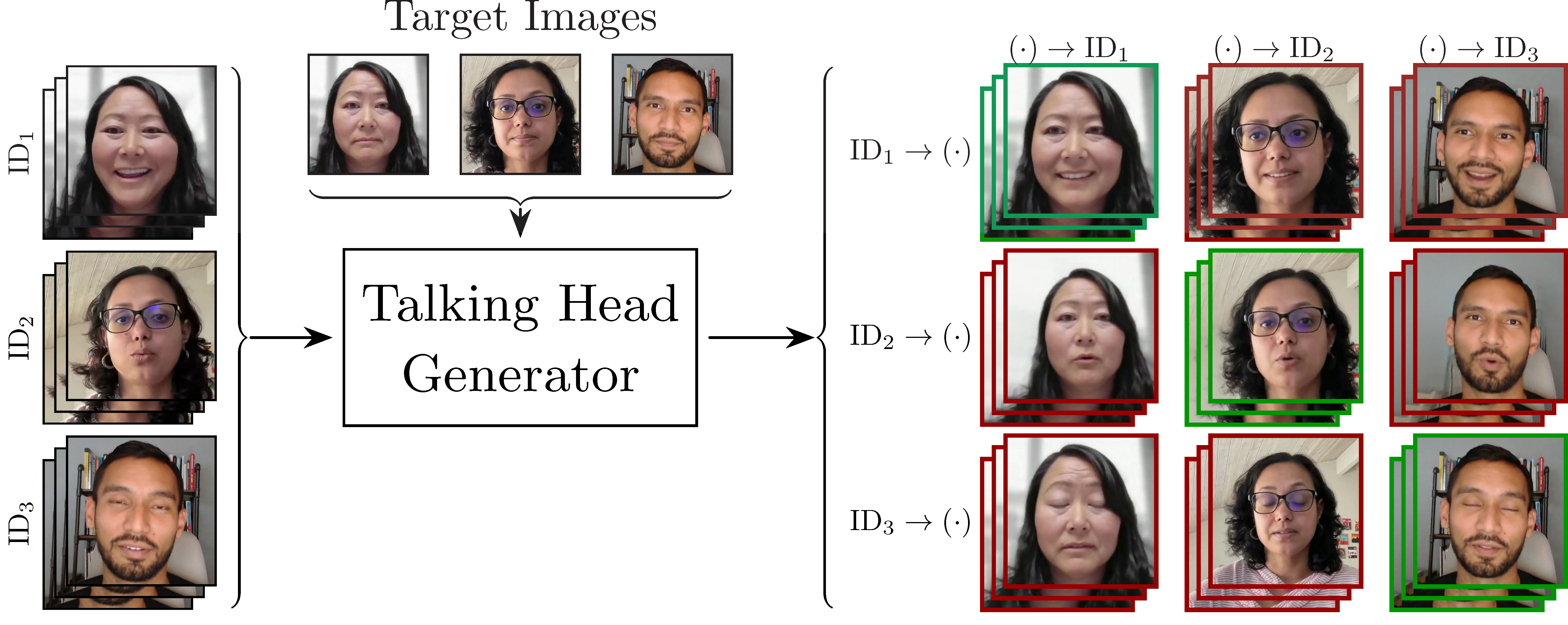

Our dataset also contains a synthetic component (Figure 3): talking-head videos generated using three facial reenactment methods: 1) face-vid2vid, 2) LIA(currently released for test-set only), and 3) TPS (currently released for test-set only). To generate these synthetic videos, we pool the data of 46 subjects from our video call-based data capture with those from the RAVDESS and CREMA-D datasets, leading to a total of 161 unique identities in the synthetic part of our dataset. For each synthetic talking-head video, a neutral facial image of an identity is driven by expressions from their own videos -- termed ''self-reenactment'' -- and the videos of all other identities -- termed ''cross-reenactment''. In a self-reenactment, the identity shown in the synthetic video (''target'' identity) matches the identity driving the video (''driving'' identity). In contrast, the target and driving identities are not the same in a cross-reenactment -- a case which could potentially indicate unauthorized use of the target identity. By recording scripted and free-form monologues for the original data of the 46 subjects, and providing synthetically-generated self and cross-reenactments for all identities (including those from RAVDESS and CREMA-D), we combine many unique properties in our dataset. This makes our dataset well-suited for training and evaluating avatar fingerprinting models and it can also be beneficial for other related tasks such as detection of synthetically-generated content.

Method

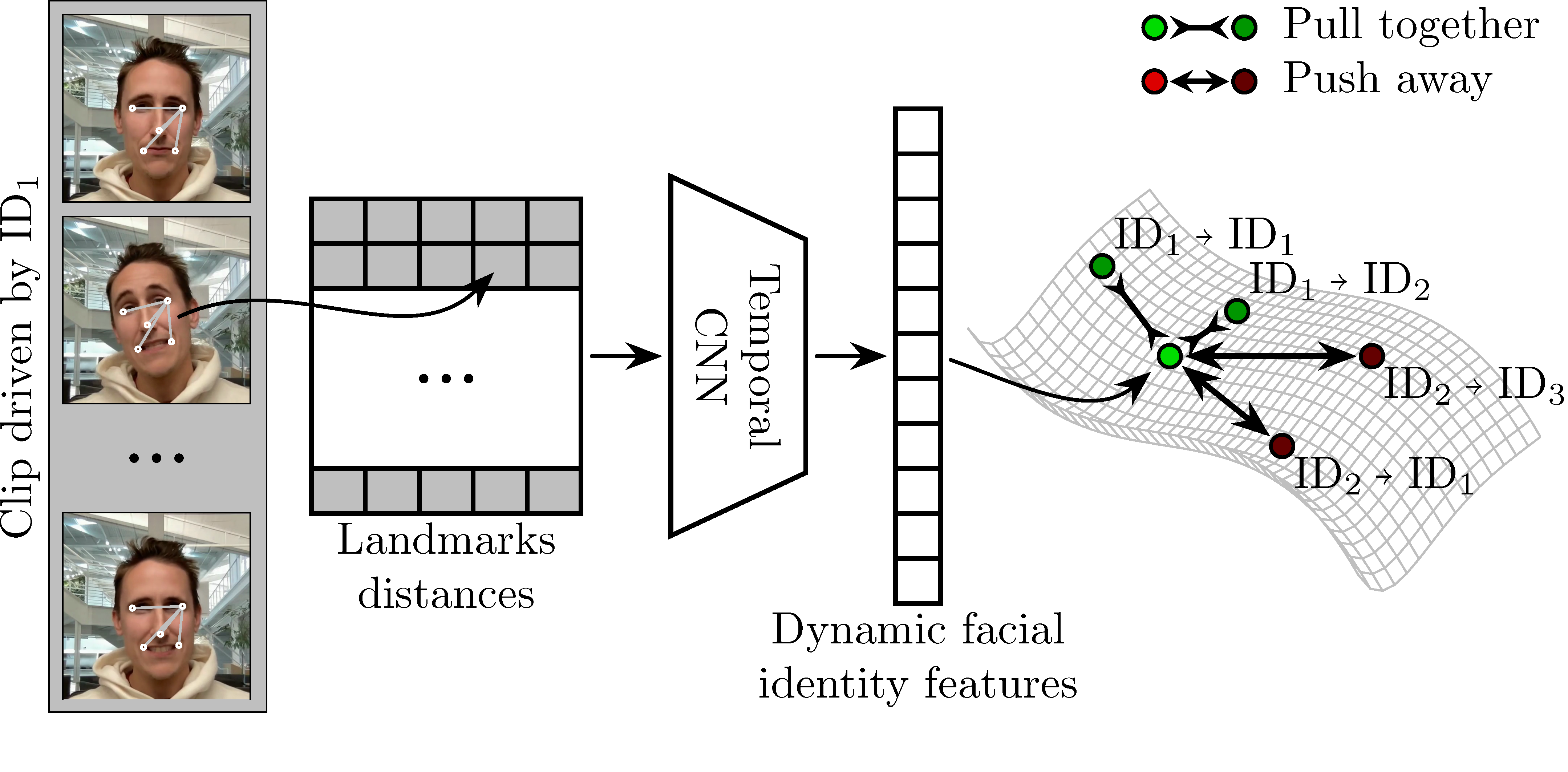

We also propose a baseline method for avatar fingerprinting. Given an input clip, we rely on per-frame facial landmarks to derive the input features to a temporal-processing convolutional network. Here, we are only showing a subset of the 126 landmarks that we use. To abstract away from underlying shape while still capturing facial dynamics, we compute normalized pairwise landmark distances, which are concatenated across frames to obtain the final input feature for the network. We want to cluster these features based on the driving identity, regardless of the target identity. To achieve the desired clustering we propose a dynamic identity embedding contrastive loss. With our loss formulation, a clip driven by a given identity (say ID1 in the case below) would be pulled towards other clips driven by the same identity, while being pushed away from the clips driven by another identity (say ID4 in the case below).

Video Results

Here we evaluate whether our method predicts embedding vectors that lie close together when the synthetic video clips have the same driving identity. We first choose a reference identity: for example, ID1 in the first case shown above. The two leftmost videos for this case show the synthetic video clips driven by ID1. The two rightmost clips have ID1 as the target for other drivers. We report the average Euclidean distance, d, between the dynamic facial identity embedding vectors of each of these four clips and a set of reference clips containing self-reenactments of ID1. As is clear from the values of d, when a clip is driven by ID1, it lies closer to the other videos driven by ID1. On the other hand, when ID1 is a target for other drivers, their embedding vectors are far from those of the clips driven by ID1. A similar observation holds for ID5, and ID7. We analyze this further in the paper with additional AUC-based metrics.

Additional Considerations

We acknowledge the societal importance of introducing guardrails when it comes to the use of talking-head generation technology. We present this work as a step towards trustworthy use of such technologies. Nevertheless, our work could be misconstrued as having solved the problem and inadvertently accelerate the unhindered adoption of talking head technology. We do not advocate for this. Instead we emphasize that this is only the first work on this topic and underscore the importance of further research in this area. Since our dataset contains human subjects' facial data, we have taken many steps to ensure proper use and governance: IRB approval, informed consent prior to data capture, removing subject identity information, pre-specifying the subject matter that can be discussed in the videos, allowing subjects the freedom to revoke our access to their provided data at any point in future (and stipulating that interested third parties maintain current contact information with us so we can convey these changes to them).

Acknowledgments

We would like to thank the participants for contributing their facial audio-visual recordings for our dataset, and Desiree Luong, Woody Luong, and Josh Holland for their help with Figure 1. We thank David Taubenheim for the voiceover in the demo video for our work, and Abhishek Badki for his help with the training infrastructure. We acknowledge Joohwan Kim, Rachel Brown, Anjul Patney, Ruth Rosenholtz, Ben Boudaoud, Josef Spjut, Saori Kaji, Nikki Pope, Daniel Rohrer, Rochelle Pereira, Rajat Vikram Singh, Candice Gerstner, Alex Qi, and Kai Pong for discussions and feedback on the security aspects, data capture and generation steps, informed consent form, photo release form, and agreements for data governance and third-party data sharing. We base this website off of the StyleGAN3 website template. Koki Nagano, Ekta Prashnani, and David Luebke were partially supported by DARPA’s Semantic Forensics (SemaFor) contract (HR0011-20-3-0005). This research was funded, in part, by DARPA’s Semantic Forensics (SemaFor) contract HR0011-20-3-0005. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the U.S. Government. Distribution Statement “A” (Approved for Public Release, Distribution Unlimited).

Citing our dataset or method

@article{prashnani2024avatar,

title={Avatar Fingerprinting for Authorized Use of Synthetic Talking-Head Videos},

author={Ekta Prashnani and Koki Nagano and Shalini De Mello and David Luebke and Orazio Gallo},

booktitle={Proceedings of European Conference on Computer Vision (ECCV)},

year={2024}

}