We present a complete system for real-time rendering of scenes with complex appearance previously reserved for offline use. This is achieved with a combination of algorithmic and system level innovations.

Our appearance model utilizes learned hierarchical textures that are interpreted using neural decoders, which produce reflectance values and importance-sampled directions. To best utilize the modeling capacity of the decoders, we equip the decoders with two graphics priors. The first prior—transformation of directions into learned shading frames—facilitates accurate reconstruction of mesoscale effects. The second prior—a microfacet sampling distribution—allows the neural decoder to perform importance sampling efficiently. The resulting appearance model supports anisotropic sampling and level-of-detail rendering, and allows baking deeply layered material graphs into a compact unified neural representation.

By exposing hardware accelerated tensor operations to ray tracing shaders, we show that it is possible to inline and execute the neural decoders efficiently inside a real-time path tracer. We analyze scalability with increasing number of neural materials and propose to improve performance using code optimized for coherent and divergent execution. Our neural material shaders can be over an order of magnitude faster than non-neural layered materials. This opens up the door for using film-quality visuals in real-time applications such as games and live previews.

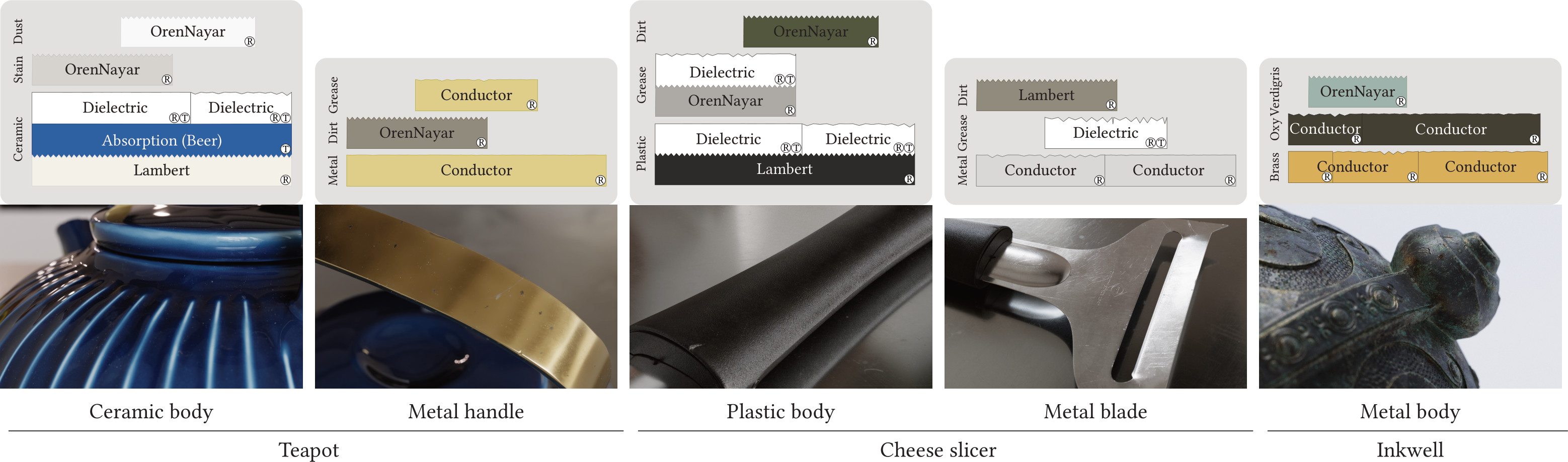

We show rendered images of five materials that we approximate with neural models for representing the BRDF and importance sampling. The illustrations show stacks of scattering layers that each material consists of.

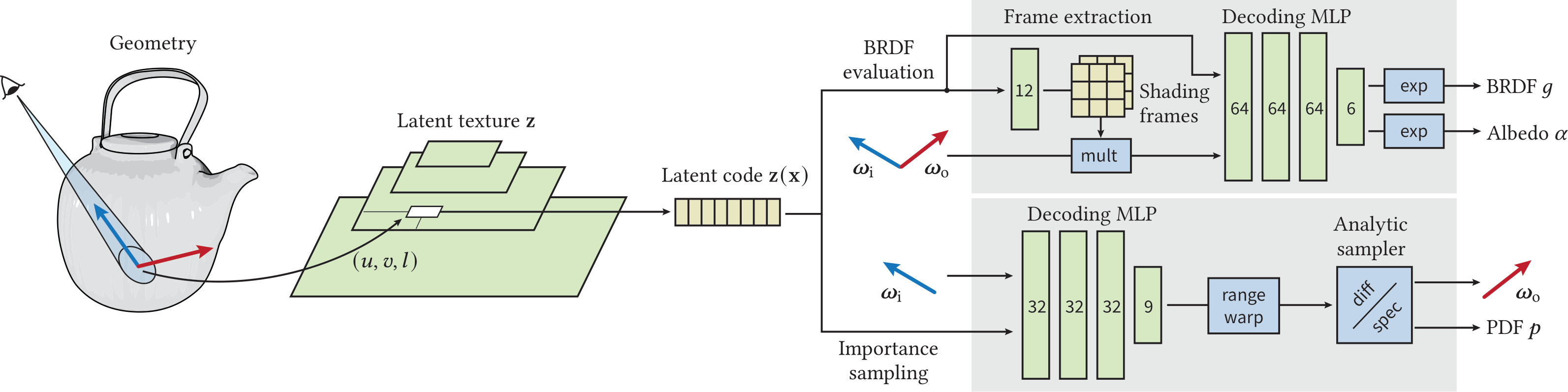

We use our neural BRDFs in a renderer as follows: for each ray that hits a surface with a neural BRDF, we perform standard and MIP level computation, and query the latent texture of the neural material. Then we input the latent code into one or two neural decoders, depending on the needs of the rendering algorithm. The BRDF decoder (top box) first extracts two shading frames from , transforms directions and into each of them, and passes the transformed directions and to an MLP that predicts the BRDF value (and optionally the directional albedo). The importance sampler (bottom box) extracts parameters of an analytical, two-lobe distribution, which is then sampled for an outgoing direction , and/or evaluated for PDF .

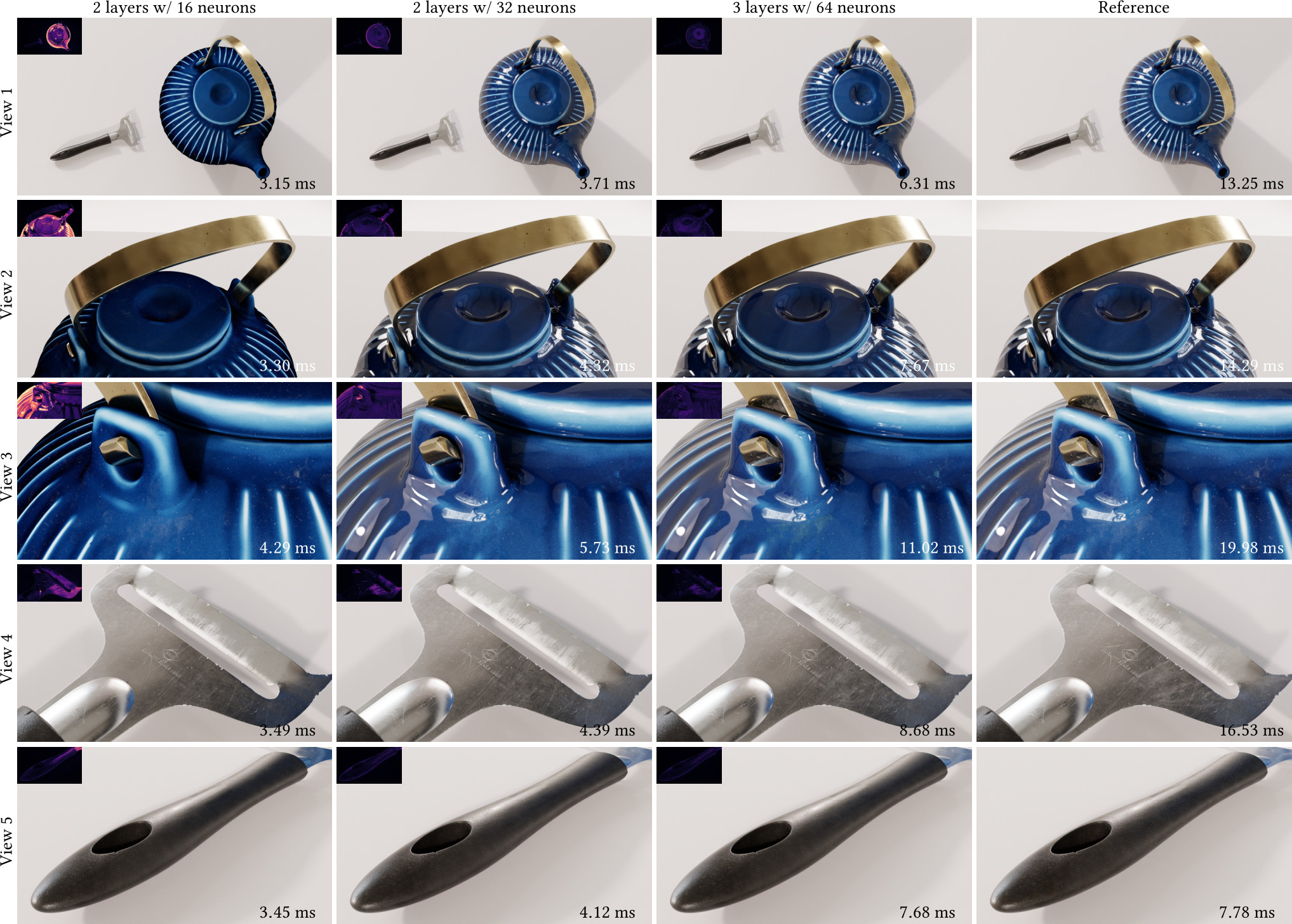

A scene with four materials that we approximate using the proposed neural BRDF. The first three columns correspond to different configurations of the BRDF decoder, from fastest to the most accurate. FLIP error images are in the corners, timings quantify the cost of rendering a 1 SPP image of the scene; we show images with 8192 samples to suppress path tracing noise.

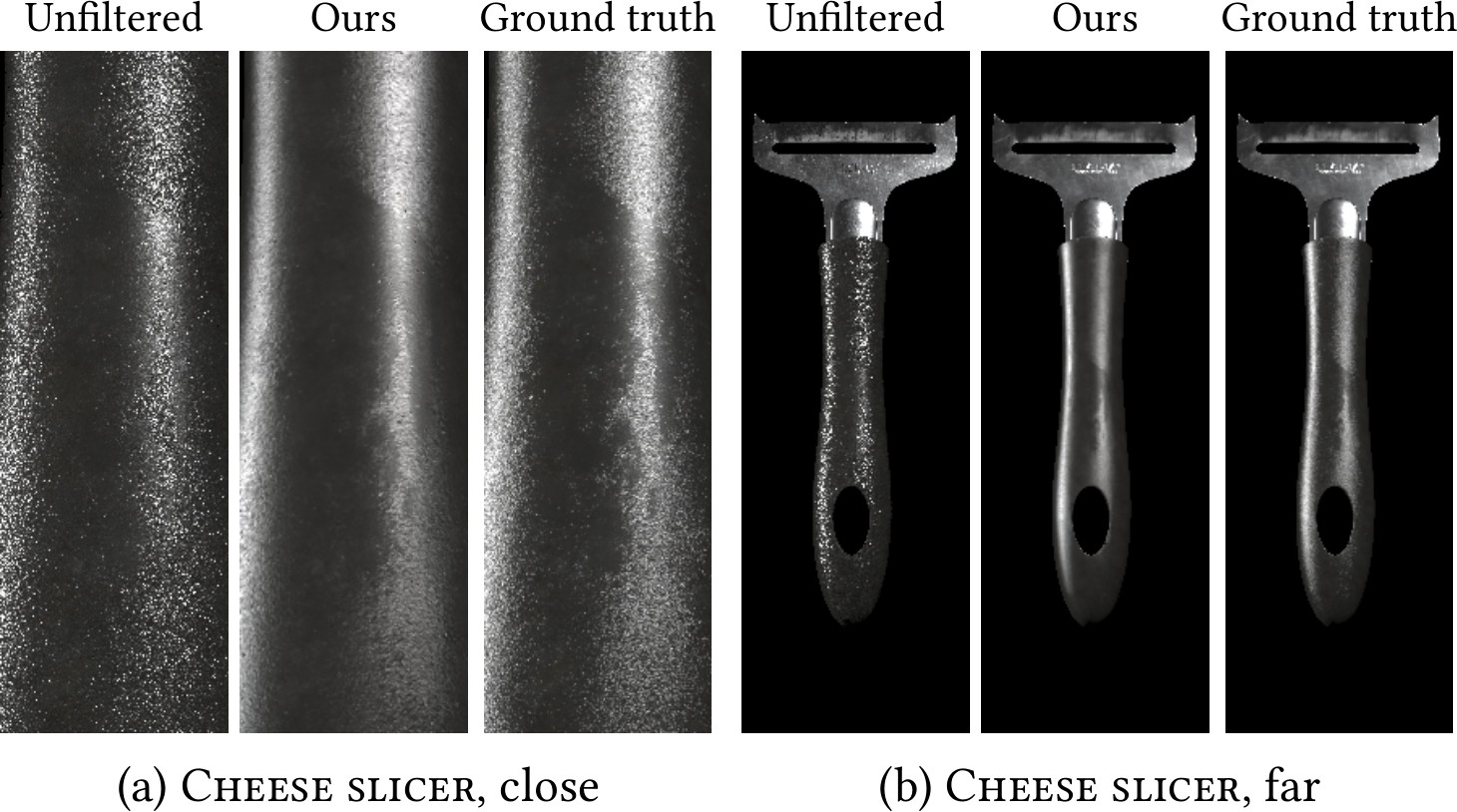

Highly detailed materials will alias significantly when rendered without supersampling (left columns, unfiltered). Supersampling averages high frequency glints and produces a filtered material, but at impractical sample cost for real-time (right columns, ground truth at 512 SPP). Our neural material can render filtered materials without aliasing at any distance, without supersampling (middle columns, ours).

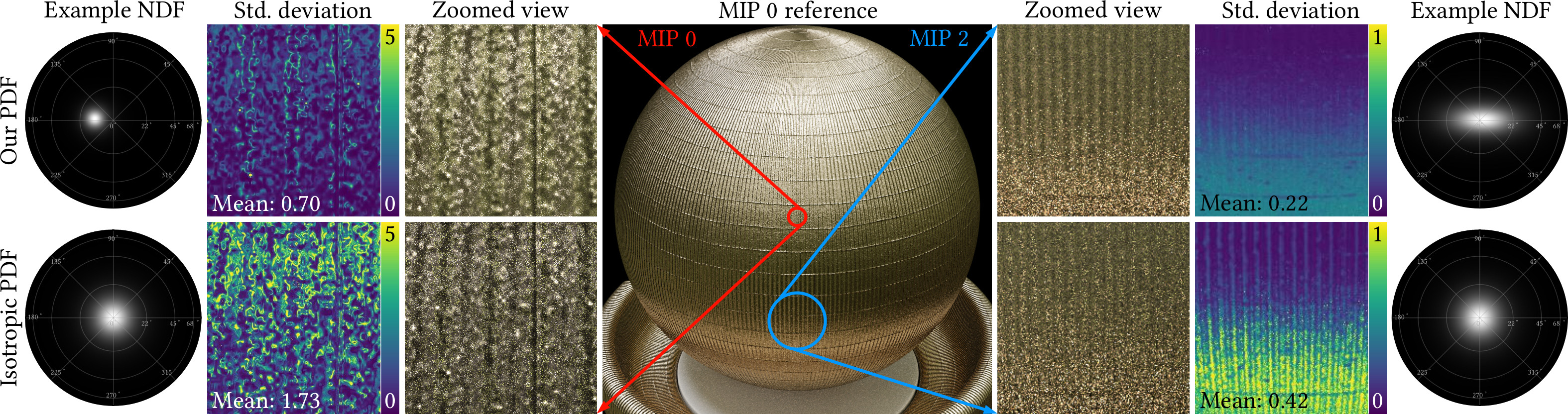

The importance sampler (top row) reduces noise levels compared to a simpler variant only supporting isotropic specular reflections (bottom row), in the spirit of Sztrajman et al. [2021] and Fan et al. [2022]. Left: Fine details of a normal map are captured using a non-centered microfacet NDF. Right: At coarser MIP levels, the filtered distribution is strongly anisotropic. The zoomed views are rendered using 4SPP. False-color images show the pixel-wise standard deviation and the mean across the entire inset.

We want to thank Toni Bratincevic, Davide Di Giannantonio Potente, and Kevin Margo for their help creating the reference objects, Yong He for evolving the Slang language to support this project, Craig Kolb for his help with the 3D asset importer, Justin Holewinski and Patrick Neill for low-level compiler and GPU driver support, and Karthik Vaidyanathan for providing the TensorCore support in Slang. We also thank Eugene d’Eon, Steve Marschner, Thomas Müller, Marco Salvi, and Bart Wronski for their valuable input. The material test blob in Figure 14 was created by Robin Marin and released under CC ( https://creativecommons.org/licenses/by/3.0/).