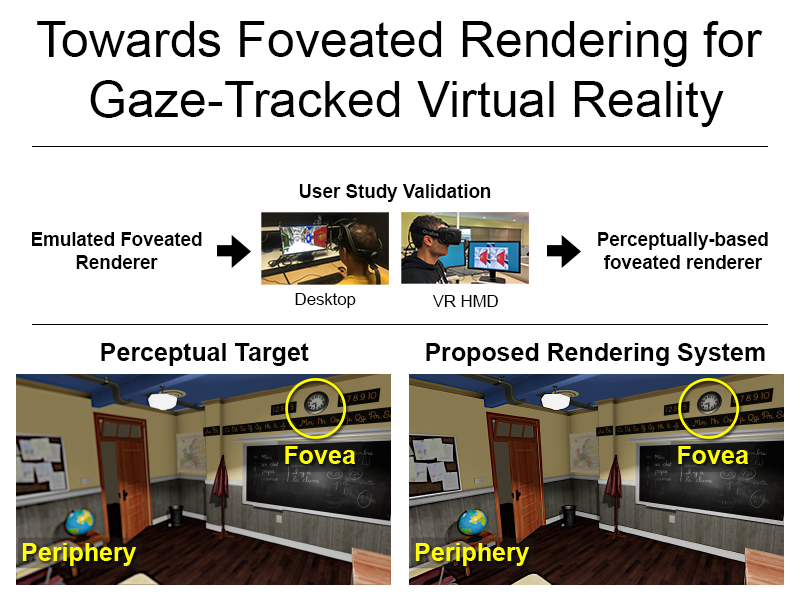

Towards Foveated Rendering for Gaze-Tracked Virtual Reality

Foveated rendering synthesizes images with progressively less detail outside the eye fixation region, potentially unlocking significant speedups for wide field-of-view displays, such as head mounted displays, where target framerate and resolution is increasing faster than the performance of traditional real-time renderers. To study and improve potential gains, we designed a foveated rendering user study to evaluate the perceptual abilities of human peripheral vision when viewing today's displays. We determined that filtering peripheral regions reduces contrast, inducing a sense of tunnel vision. When applying a postprocess contrast enhancement, subjects tolerated up to 2x larger blur radius before detecting differences from a non-foveated ground truth. After verifying these insights on both desktop and head mounted displays augmented with high-speed gaze-tracking, we designed a perceptual target image to strive for when engineering a production foveated renderer. Given our perceptual target, we designed a practical foveated rendering system that reduces number of shades by up to 70 % and allows coarsened shading up to 30 degrees closer to the fovea than Guenter et al.[2012] without introducing perceivable aliasing or blur. We filter both pre- and post-shading to address aliasing from undersampling in the periphery, introduce a novel multiresolution- and saccade-aware temporal antialising algorithm, and use contrast enhancement to help recover peripheral details that are resolvable by our eye but degraded by filtering. We validate our system by performing another user study. Frequency analysis shows our system closely matches our perceptual target. Measurements of temporal stability show we obtain quality similar to temporally filtered non-foveated renderings.

Publication Date

Published in

Copyright

Copyright by the Association for Computing Machinery, Inc. Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, to republish, to post on servers, or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from Publications Dept, ACM Inc., fax +1 (212) 869-0481, or permissions@acm.org. The definitive version of this paper can be found at ACM's Digital Library http://www.acm.org/dl/.