Our group addresses challenges in AI-powered autonomous vehicles through a multi-pronged research agenda which, collectively, is aimed at laying the foundation for the next generation of AV autonomy.

-

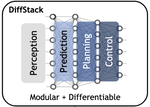

Next-Generation AV Architectures: While modular architectures provide unparalleled reusability and interpretability, they suffer from integration challenges and information bottlenecks between modules. Accordingly, we are investigating tools and methods to design much more integrated autonomy stacks along the following axes:

- System-level, including differentiable, semi-modular, and end-to-end stacks.

- Representation-level: leveraging neural and prior-augmented representations (standard-definition maps, map priors, NeRF priors, etc.).

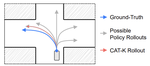

- Component-level: investigating new methods for joint prediction/planning, online mapping, neural reconstruction, and policy planning, among others.

-

AV Foundation Models: Large, generally-trained models contain a wealth of information. Harnessing such a breadth of knowledge in autonomous driving may help AVs generalize to new domains and navigate smoothly in rare scenarios. Towards this end, our group investigates vision-language foundation models, vision-language-action modeling, and scene understanding in the wild.

-

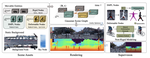

Simulation: Simulation is paramount for accelerating AV development. Towards developing more realistic and controllable simulation enviroments, our group is pursuing behavior/traffic modeling to produce realistic and interactive agent motion, language-based simulation generation to leverage text for specifying and editing simulated scenarios, and neural simulators to reconstruct and simulate logged scenes and their sensor data.

-

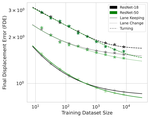

AI Safety for AVs: Data-driven methods based on ML have enabled tasks beyond what was considered possible with their traditional, non-learning-based counterparts. However, ML-based algorithms can suffer from unpredictability and erratic behavior. To enable the confident infusion of ML models within the decision-making loop of an autonomous vehicle, we research tools and methods for providing both offline and online algorithmic assurances for components of a learning-enabled autonomy stack, including uncertainty quantification and calibration, online monitoring of AI components, rule reasoning in neural architectures, and validation (safety KPIs, evaluation, etc.).

In pursuit of this research agenda, we leverage and combine expertise from a number of fields, including optimal control, decision-making, reinforcement learning, deep learning, machine learning, robotics, computer vision, and formal methods.

Our research efforts are enriched by close collaborations with NVIDIA’s AV product teams.