SIDOD: A Synthetic Image Dataset for 3D Object Pose Recognition with Distractors

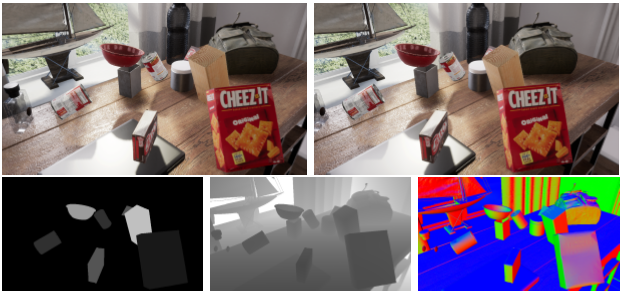

We present a new image dataset generated by the NVIDIA Deep Learning Data Synthesizer intended for use in object detection, pose estimation, and tracking applications. This dataset contains 144k stereo image pairs generated from 18 camera view points of three photorealistic virtual environments with up to 10 objects (chosen randomly from the 21 object models of the YCB dataset) and flying distractors. Object and camera pose, scene lighting, and quantity of objects and distractors were randomized. Each provided view includes RGB, depth, segmentation, and surface normal images. We describe our approach for domain randomization and provide insight into the decisions that produced the dataset.

Publication Date

Published in

External Links

Uploaded Files

Copyright

This material is posted here with permission of the IEEE. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by writing to pubs-permissions@ieee.org.