Automated Synthetic-to-Real Generalization

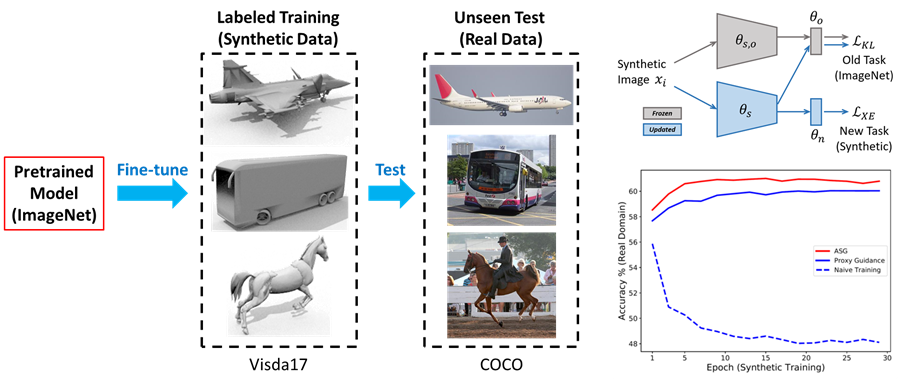

Models trained on synthetic images often face degraded generalization to real data. As a convention, these models are often initialized with ImageNet pre-trained representation. Yet the role of ImageNet knowledge is seldom discussed despite common practices that leverage this knowledge to maintain the generalization ability. An example is the careful hand-tuning of early stopping and layer-wise learning rates, which is shown to improve synthetic-to-real generalization but is also laborious and heuristic. In this work, we explicitly encourage the synthetically trained model to maintain similar representations with the ImageNet pre-trained model, and propose a learning-to-optimize (L2O) strategy to automate the selection of layer-wise learning rates. We demonstrate that the proposed framework can significantly improve the synthetic-to-real generalization performance without seeing and training on real data, while also benefiting downstream tasks such as domain adaptation. Code is available at: https://github.com/NVlabs/ASG.