Interactive Character Control with Auto-Regressive Motion Diffusion Models

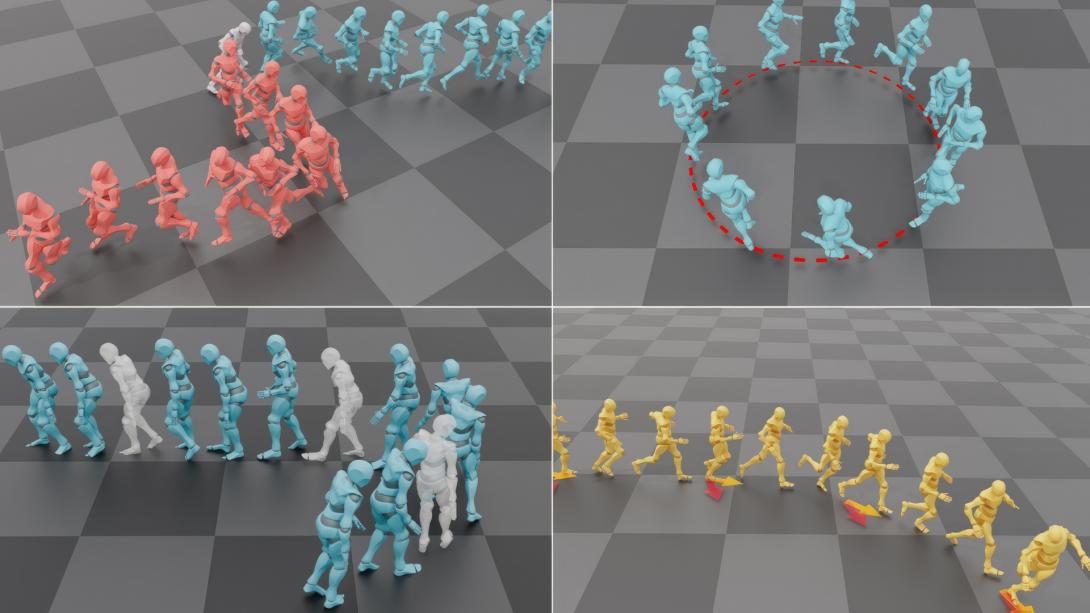

Real-time character control is an essential component for interactive experiences, with a broad range of applications, including but not limited to physics simulations, video games, and virtual reality. The success of diffusion models for image synthesis has led to recent works exploring the use of these models for motion synthesis. However, the majority of these motion diffusion models are primarily designed for offline applications, where space-time models are used to synthesize an entire sequence of frames simultaneously with a pre-specified length. To enable real-time motion synthesis with diffusion model that allows time-varying controls, we propose A-MDM (Auto-regressive Motion Diffusion Model). Our conditional diffusion model takes an initial pose as input, and auto-regressively generates successive motion frames conditioned on the previous frame. Despite its streamlined network architecture, which uses simple MLPs, our framework is capable of generating diverse and high-fidelity motion sequences of arbitrary lengths. Furthermore, we introduce a suite of techniques for incorporating interactive controls into A-MDM, such as task-oriented sampling, in-painting, and hierarchical reinforcement learning. These techniques enable pre-trained A-MDMs to be efficiently adapted for a variety of new downstream tasks. We conduct a comprehensive suite of experiments to demonstrate the effectiveness of A-MDM, and compare its performance against state-of-the-art auto-regressive methods.