Towards Neural Scaling Laws for Time Series Foundation Models

Scaling laws offer valuable insights into the design of time series foundation models (TSFMs). However, previous research has largely focused on the scaling laws

of TSFMs for in-distribution (ID) data, leaving their out-of-distribution (OOD)

scaling behavior and the influence of model architectures less explored. In this

work, we examine two common TSFM architectures—encoder-only and decoderonly Transformers—and investigate their scaling behavior on both ID and OOD

data. These models are trained and evaluated across varying parameter counts,

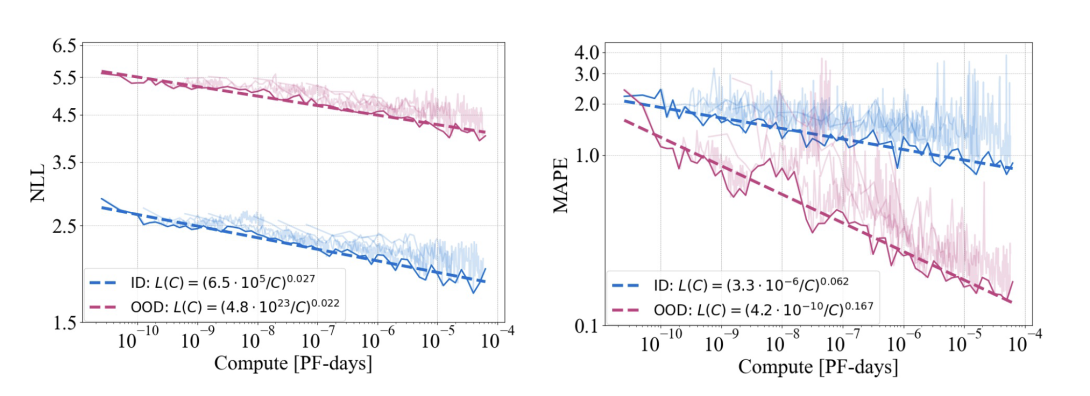

compute budgets, and dataset sizes. Our experiments reveal that the negative loglikelihood of TSFMs exhibits similar scaling behavior in both OOD and ID settings. We further compare the scaling properties across different architectures, incorporating two state-of-the-art TSFMs as case studies, showing that model architecture plays a significant role in scaling. The encoder-only Transformers demonstrate better scalability than the decoder-only Transformers in ID data, while the

architectural enhancements in the two advanced TSFMs primarily improve ID

performance but reduce OOD scalability. While scaling up TSFMs is expected to

drive performance breakthroughs, the lack of a comprehensive understanding of

TSFM scaling laws has hindered the development of a robust framework to guide

model scaling. We fill this gap in this work by synthesizing our findings and providing practical guidelines for designing and scaling larger TSFMs with enhanced

model capabilities.