Learning Linear Transformations for Fast Image and Video Style Transfer

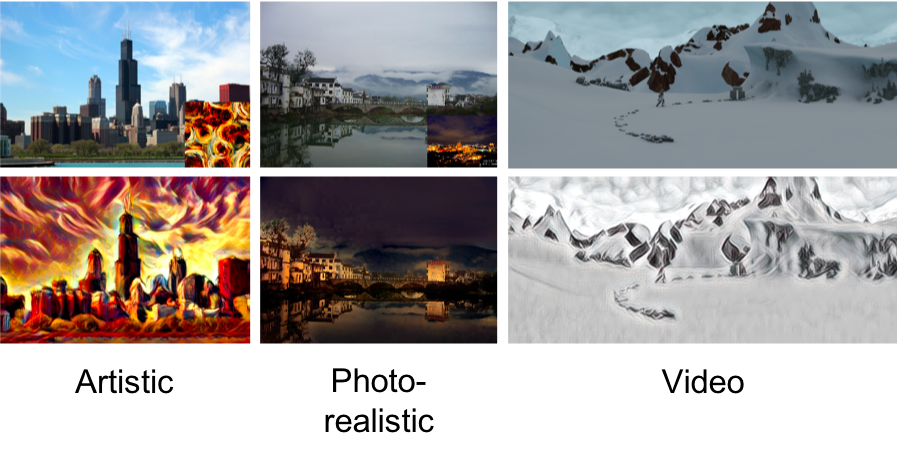

Given a random pair of images, a universal style transfer method extracts the feel from a reference image to synthesize an output based on the look of a content image. Recent algorithms based on second-order statistics, however, are either computationally expensive or prone to generate artifacts due to the trade-off between image quality and run-time performance. In this work, we present an approach for universal style transfer that learns the transformation matrix in a data-driven fashion. Our algorithm is efficient yet flexible to transfer different levels of styles with the same auto-encoder network. In addition, we propose a linear propagation module to enable a feed-forward network for photo-realistic style transfer. We demonstrate the effectiveness of our approach on three tasks: artistic style, photo-realistic and video style transfer, with comparisons to state-of-the-art methods.

Publication Date

Published in

Research Area

External Links

Copyright

This material is posted here with permission of the IEEE. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by writing to pubs-permissions@ieee.org.