Sim-to-Real for Robotic Tactile Sensing via Physics-Based Simulation and Learned Latent Projections

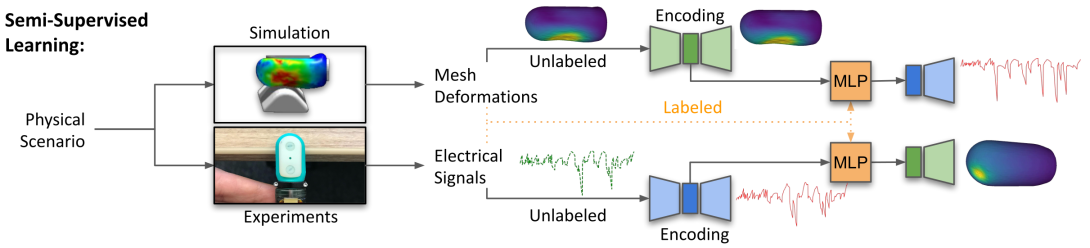

Tactile sensing is critical for robotic grasping and manipulation of objects under visual occlusion. However, in contrast to simulations of robot arms and cameras, current simulations of tactile sensors have limited accuracy, speed, and utility. In this work, we develop an efficient 3D finite element method (FEM) model of the SynTouch BioTac sensor using an open-access, GPU-based robotics simulator. Our simulations closely reproduce results from an experimentally-validated model in an industry-standard, CPU-based simulator, but at 75x the speed. We then learn latent representations for simulated BioTac deformations and real-world electrical output through self-supervision, as well as projections between the latent spaces using a small supervised dataset. Using these learned latent projections, we accurately synthesize real-world BioTac electrical output and estimate contact patches, both for unseen contact interactions. This work contributes an efficient, freely-accessible FEM model of the BioTac and comprises one of the first efforts to combine self-supervision, cross-modal transfer, and sim-to-real transfer for tactile sensors.

Publication Date

Research Area

External Links

Copyright

This material is posted here with permission of the IEEE. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by writing to pubs-permissions@ieee.org.