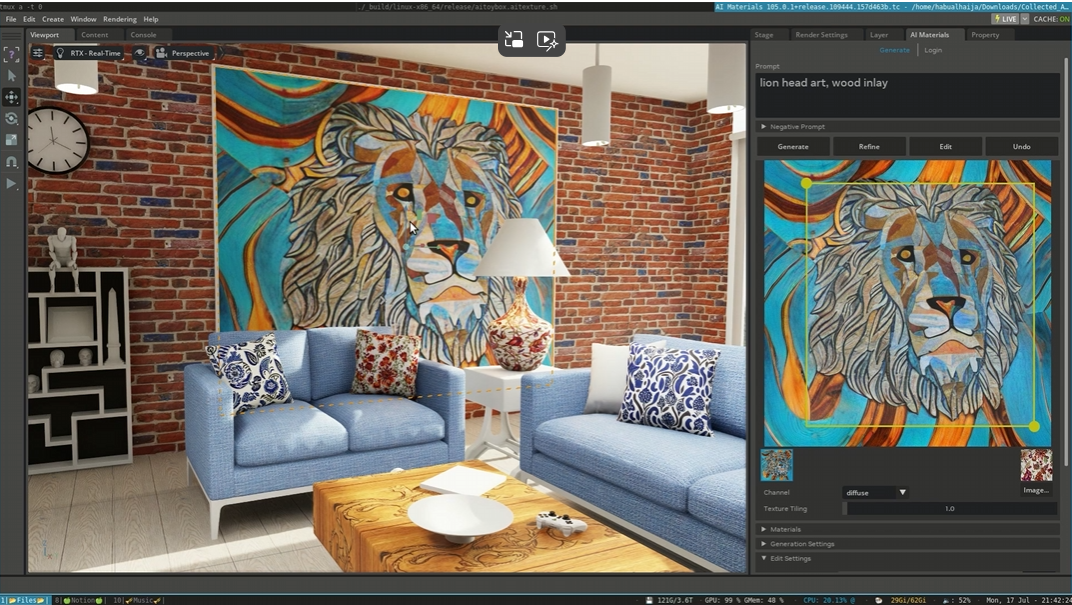

Interactive AI Material Generation and Editing in NVIDIA Omniverse

We present an AI-based tool for interactive material generation within the NVIDIA Omniverse environment. Our approach leverages a State-of-the-art Latent Diffusion model with some notable modifications to adapt it to the task of material generation. Specifically, we employ circular-padded convolution layers in place of standard convolution layers. This unique adaptation ensures the production of seamless tiling textures, as the circular padding facilitates seamless blending at image edges. Moreover, we extend the capabilities of our model by training additional decoders to generate various material properties such as surface normals, roughness, and ambient occlusions. Each decoder utilizes the same latent tensor generated by the de-noising UNet to produce a specific material channel. Furthermore, to enhance real-time performance and user interactivity, we optimize our model using NVIDIA TensorRT, resulting in improved inference speed for an efficient and responsive tool.

Publication Date

Published in

External Links

Uploaded Files

Award

Copyright

Copyright

Copyright by the Association for Computing Machinery, Inc. Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, to republish, to post on servers, or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from Publications Dept, ACM Inc., fax +1 (212) 869-0481, or permissions@acm.org. The definitive version of this paper can be found at ACM's Digital Library http://www.acm.org/dl/.