Interpretable Trajectory Prediction for Autonomous Vehicles via Counterfactual Responsibility

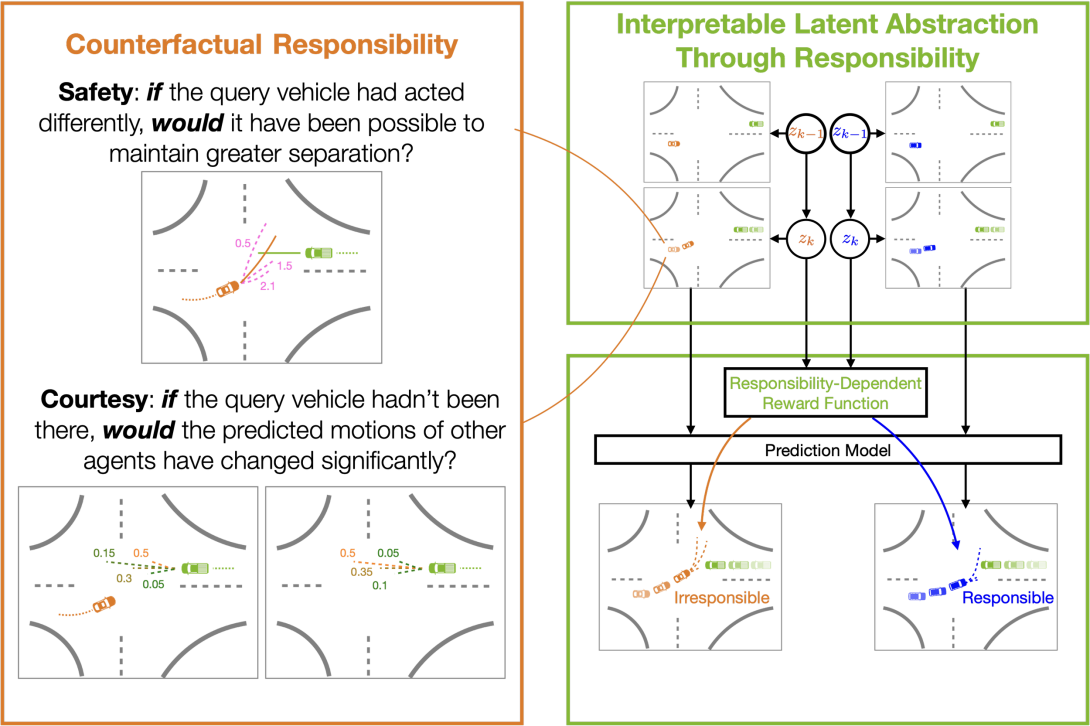

The ability to anticipate surrounding agents’ behaviors is critical to enable safe and seamless autonomous vehicles (AVs). While phenomenological methods have successfully predicted future trajectories from scene context, these predictions lack interpretability. On the other hand, ontological approaches assume an underlying structure able to describe the interaction dynamics or agents’ internal decision processes. Still, they often suffer from poor scalability or cannot reflect diverse human behaviors. This work proposes an interpretability framework for a phenomenological method through responsibility evaluations. We formulate responsibility as a measure of how much an agent takes into account the welfare of other agents through counterfactual reasoning. Additionally, this framework abstracts the computed responsibility sequences into different responsibility levels and grounds these latent levels into a trajectory prediction model. The proposed responsibility-based interpretability framework is modular and easily integrated into a wide range of prediction models. To demonstrate the utility of the proposed framework in providing added interpretability, we adapt an existing AV prediction model and perform a simulation study on a real-world nuScenes traffic dataset. Experimental results show that we can perform offline ex-post traffic analysis by incorporating the responsibility signal and rendering interpretable but accurate online trajectory predictions.

Publication Date

External Links

Copyright

This material is posted here with permission of the IEEE. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by writing to pubs-permissions@ieee.org.