Page Placement Strategies for GPUs within Heterogeneous Memory Systems

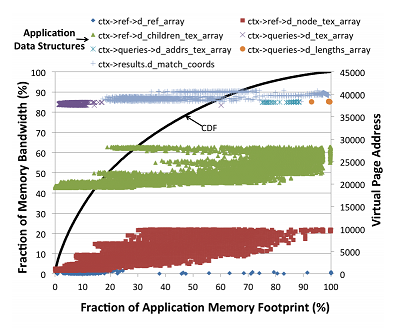

Systems from smartphones to supercomputers are increasingly heterogeneous, being composed of both CPUs and GPUs. To maximize cost and energy efficiency, these systems will increasingly use globally-addressable heterogeneous memory systems, making choices about memory page placement critical to performance. In this work we show that current page placement policies are not sufficient to maximize GPU performance in these heterogeneous memory systems. We propose two new page placement policies that improve GPU performance: one application agnostic and one using application profile information. Our application agnostic policy, bandwidth-aware (BW-AWARE) placement, maximizes GPU throughput by balancing page placement across the memories based on the aggregate memory bandwidth available in a system. Our simulation-based results show that BW-AWARE placement outperforms the existing Linux INTERLEAVE and LOCAL policies by 35% and 18% on average for GPU compute workloads. We build upon BW-AWARE placement by developing a compiler-based profiling mechanism that provides programmers with information about GPU application data structure access patterns. Combining this information with simple program-annotated hints about memory placement, our hint-based page placement approach performs within 90% of oracular page placement on average, largely mitigating the need for costly dynamic page tracking and migration.

Publication Date

Research Area

External Links

Uploaded Files

Copyright

Copyright by the Association for Computing Machinery, Inc. Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, to republish, to post on servers, or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from Publications Dept, ACM Inc., fax +1 (212) 869-0481, or permissions@acm.org. The definitive version of this paper can be found at ACM's Digital Library http://www.acm.org/dl/.