Towards Selecting Robust Hand Gestures for Automotive Interfaces

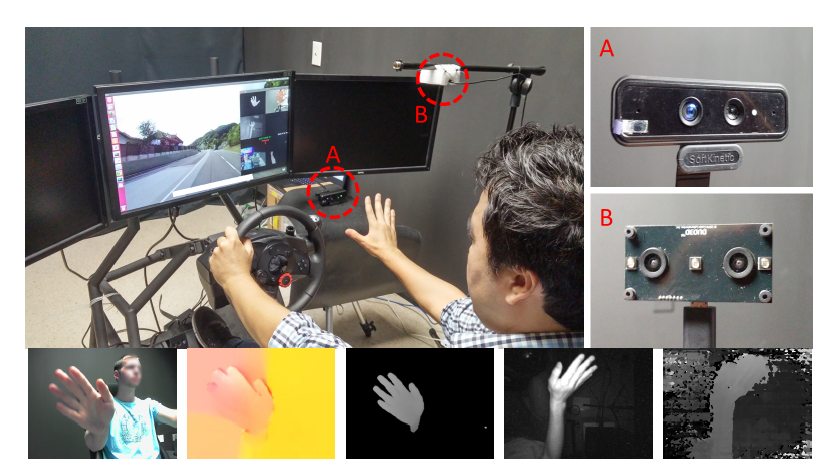

Driver distraction is a serious threat to automotive safety. The visual-manual interfaces in cars are a source of distraction for drivers. Automotive touch-less hand gesture-based user interfaces can help to reduce driver distraction and enhance safety and comfort. The choice of hand gestures in automotive interfaces is central to their success and widespread adoption. In this work we evaluate the recognition accuracy of 25 different gestures for state-of-the-art computer vision-based gesture recognition algorithms and for human observers. We show that some gestures are consistently recognized more accurately than others by both vision-based algorithms and humans. We further identify similarities in the hand gesture recognition abilities of vision-based systems and humans. Lastly, by merging pairs of gestures with high miss-classification rates, we propose ten robust hand gestures for automotive interfaces, which are classified with high and equal accuracy by vision-based algorithms.

Publication Date

Published in

Uploaded Files

Copyright

This material is posted here with permission of the IEEE. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by writing to pubs-permissions@ieee.org.