Consistent Video Filtering for Camera Arrays

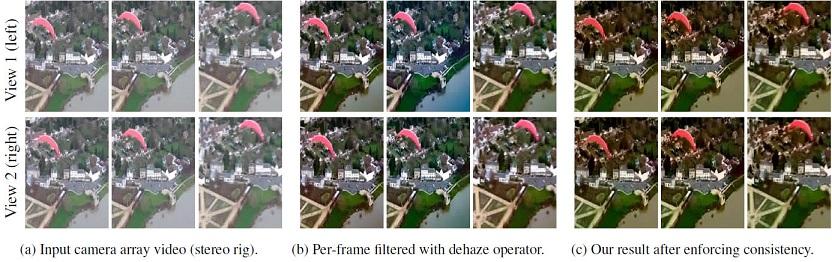

Visual formats have advanced beyond single-view images and videos: 3D movies are commonplace, researchers have developed multi-view navigation systems, and VR is helping to push light field cameras to mass market. However, editing tools for these media are still nascent, and even simple filtering operations like color correction or stylization are problematic: naively applying image filters per frame or per view rarely produces satisfying results due to time and space inconsistencies. Our method preserves and stabilizes filter effects while being agnostic to the inner working of the filter. It captures filter effects in the gradient domain, then uses input frame gradients as a reference to impose temporal and spatial consistency. Our least-squares formulation adds minimal overhead compared to naive data processing. Further, when filter cost is high, we introduce a filter transfer strategy that reduces the number of per-frame filtering computations by an order of magnitude, with only a small reduction in visual quality. We demonstrate our algorithm on several camera array formats including stereo videos, light fields, and wide baselines.

Publication Date

Published in

Research Area

Uploaded Files

Copyright

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than Eurographics must be honored.