NVGaze: An Anatomically-Informed Dataset for Low-Latency, Near-Eye Gaze Estimation

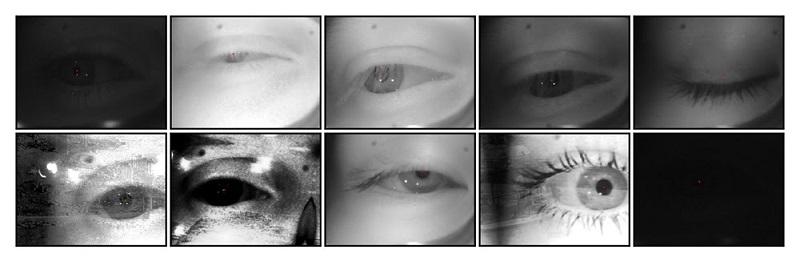

Quality, diversity, and size of training dataset are critical factors for learning-based gaze estimators. We create two datasets satisfying these criteria for near-eye gaze estimation under infrared illumination: a synthetic dataset using anatomically-informed eye and face models with variations in face shape, gaze direction, pupil and iris, skin tone, and external conditions (two million images at 1280x960), and a real-world dataset collected with 35 subjects (2.5 million images at 640x480). Using our datasets, we train a neural network for gaze estimation, achieving 2.06 (+/- 0.44) degrees of accuracy across a wide 30 x 40 degrees field of view on real subjects excluded from training and 0.5 degrees best-case accuracy (across the same field of view) when explicitly trained for one real subject. We also train a variant of our network to perform pupil estimation, showing higher robustness than previous methods. Our network requires fewer convolutional layers than previous networks, achieving sub-millisecond latency.

Publication Date

Published in

External Links

Copyright

Copyright by the Association for Computing Machinery, Inc. Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, to republish, to post on servers, or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from Publications Dept, ACM Inc., fax +1 (212) 869-0481, or permissions@acm.org. The definitive version of this paper can be found at ACM's Digital Library http://www.acm.org/dl/.