Reinforcement Learning through Asynchronous Advantage Actor-Critic on a GPU

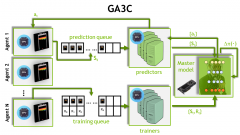

We introduce a hybrid CPU/GPU version of the Asynchronous Advantage ActorCritic

(A3C) algorithm, currently the state-of-the-art method in reinforcement

learning for various gaming tasks. We analyze its computational traits and concentrate

on aspects critical to leveraging the GPU’s computational power. We

introduce a system of queues and a dynamic scheduling strategy, potentially

helpful for other asynchronous algorithms as well. Our hybrid CPU/GPU version

of A3C, based on TensorFlow, achieves a significant speed up compared

to a CPU implementation; we make it publicly available to other researchers at

https://github.com/NVlabs/GA3C.