Efficient Transformer Inference with Statically Structured Sparse Attention

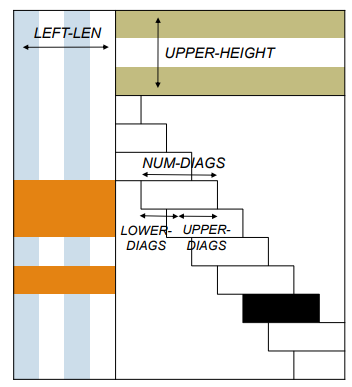

Self-attention matrices of Transformers are often highly sparse because the relevant context of each token is typically limited to just a few other tokens in the sequence. To reduce the computational burden of self-attention on Transformer inference, we propose static, structured, sparse attention masks that split attention matrices into dense regions, skipping computations outside these regions while reducing computations inside these regions. To support the proposed mask structure, we design an entropy-aware finetuning algorithm to naturally encourage attention sparsity while maximizing task accuracy. Furthermore, we extend a typical dense deep learning accelerator to efficiently exploit our structured sparsity pattern. Compared to a dense baseline, we achieve 56.6% reduction in energy consumption, 58.9% performance improvement with <1% accuracy loss and 2.6% area overhead.

Publication Date

External Links

Copyright

This material is posted here with permission of the IEEE. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by writing to pubs-permissions@ieee.org.