MAGNet: A Modular Accelerator Generator for Neural Networks

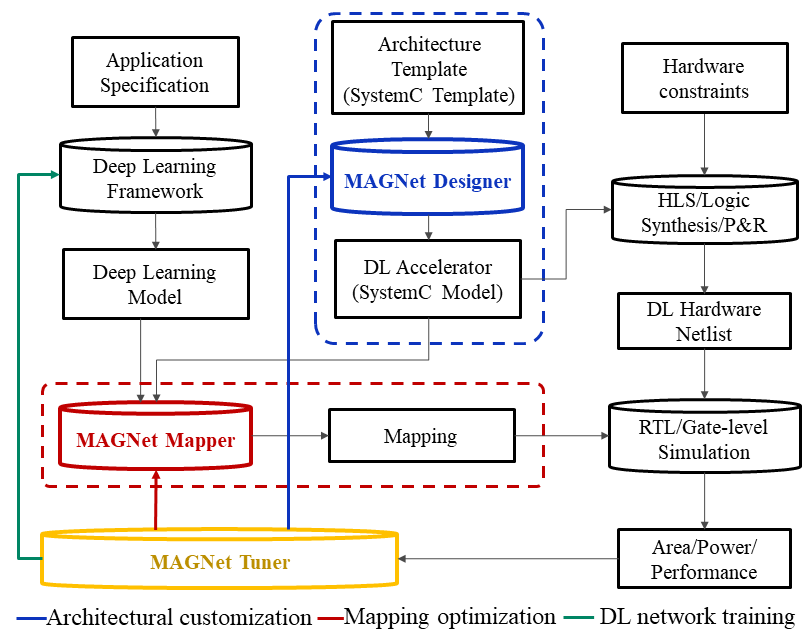

Deep neural networks have been adopted in a wide range of application domains, leading to high demand for inference accelerators. However, the high cost associated with ASIC hardware design makes it challenging to build custom accelerators for different targets. To lower design cost, we propose MAGNet, a modular accelerator generator for neural networks. MAGNet takes a target application consisting of one or more neural networks along with hardware constraints as input and produces synthesizable RTL for a neural network accelerator ASIC as well as valid mappings for running the target networks on the generated hardware. MAGNet consists of three key components: (i) MAGNet Designer, a highly configurable architectural template designed in C++ and synthesizable by high-level synthesis tools. MAGNet Designer supports a wide range of design-time parameters such as different data formats, diverse memory hierarchies, and dataflows. (ii) MAGNet Mapper, an automated framework for exploring different software mappings for executing a neural network on the generated hardware. (iii) MAGNet Tuner, a design space exploration framework encompassing the designer, the mapper, and a deep learning framework to enable fast design space exploration and co-optimization of architecture and application. We demonstrate the utility of MAGNet by designing an inference accelerator optimized for image classification application using three different neural networks—AlexNet, ResNet, and DriveNet. MAGNet-generated hardware is highly efficient and leverages a novel multi-level dataflow to achieve 40 fJ/op and 2.8 TOPS/mm^2 in a 16nm technology node for the ResNet-50 benchmark with <1% accuracy loss on the ImageNet dataset.

Publication Date

External Links

Uploaded Files

Copyright

This material is posted here with permission of the IEEE. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by writing to pubs-permissions@ieee.org.