A 0.11 pJ/Op, 0.32-128 TOPS, Scalable Multi-Chip-Module-based Deep Neural Network Accelerator Designed with a High-Productivity VLSI Methodology

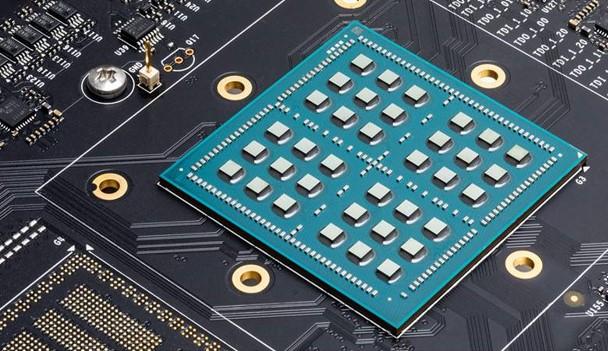

This work presents a scalable deep neural network (DNN) inference accelerator consisting of 36 small chips connected in a mesh network on a multi-chip-module (MCM). The accelerator enables flexible scaling for efficient inference on a wide range of DNNs, from mobile to data center domains. The testchip was implemented using a novel high-productivity VLSI methodology, fully designed in C++ using High-Level Synthesis (HLS) tools and leveraged an agile VLSI design flow. The 6 mm^2 chip was implemented in 16nm technology and achieves 1.29 TOPS/mm^2, 0.11 pJ/op energy efficiency, 4 TOPS (8b int) peak performance on 1 chip, and 128 peak TOPS and 2,615 images/s ResNet-50 inference in a 36-chip MCM.

Publication Date

Published in

Research Area

Uploaded Files

Published slides1.95 MB